Summarize and analyze this article with

Data observability has emerged as a critical discipline for ensuring reliable, trustworthy data in complex environments. But what exactly is data observability? At its core, data observability is the ability to holistically understand and monitor the health of your data across its entire lifecycle – from the data content itself to the pipelines that move it, the infrastructure that houses it, how it’s used, and even the costs associated with it.

It is a strategic, 360° approach that goes beyond traditional data monitoring or quality checks, enabling data teams to detect and fix issues before they disrupt business, inflate costs, or derail AI initiatives. In practice, data observability continuously tracks a wide array of signals (data quality metrics, pipeline metadata, system logs, user behavior, cost metrics, etc.) across distributed systems, using automation and AI to spot anomalies, discover unknown problems, and trigger timely alerts or corrective actions. The result is end-to-end visibility into your data ecosystem’s health, allowing your teams to be proactive instead of reactive.

Why does this matter now? Traditional, siloed monitoring tools can’t keep up with modern data architecture complexity – they typically flag only predefined issues or focus on a single area. The consequence is that critical data issues (like a silent schema change upstream or a subtle data drift) can go undetected until they wreak havoc on dashboards, machine learning models, or business processes. Data observability addresses this gap by providing a comprehensive lens over everything that happens to your data, so you’re the first to know when something goes wrong, what broke, and how to fix it.

In this guide, we’ll delve into what data observability means for data engineers and technical teams, how it differs from traditional monitoring or data quality efforts, and how to implement it effectively. We’ll break down the five key pillars of observability, explore DQLabs’ multi-layered approach and maturity model, and provide actionable steps and best practices for deploying data observability in your own stack (including integration with tools like Airflow, dbt, Snowflake, and Databricks). We’ll also examine specific use cases such as AI/ML readiness and FinOps (cost) observability and discuss how to scale observability across hybrid environments. By the end, you should have a clear understanding of data observability and a roadmap to leverage it for more resilient, efficient, and cost-effective data operations.

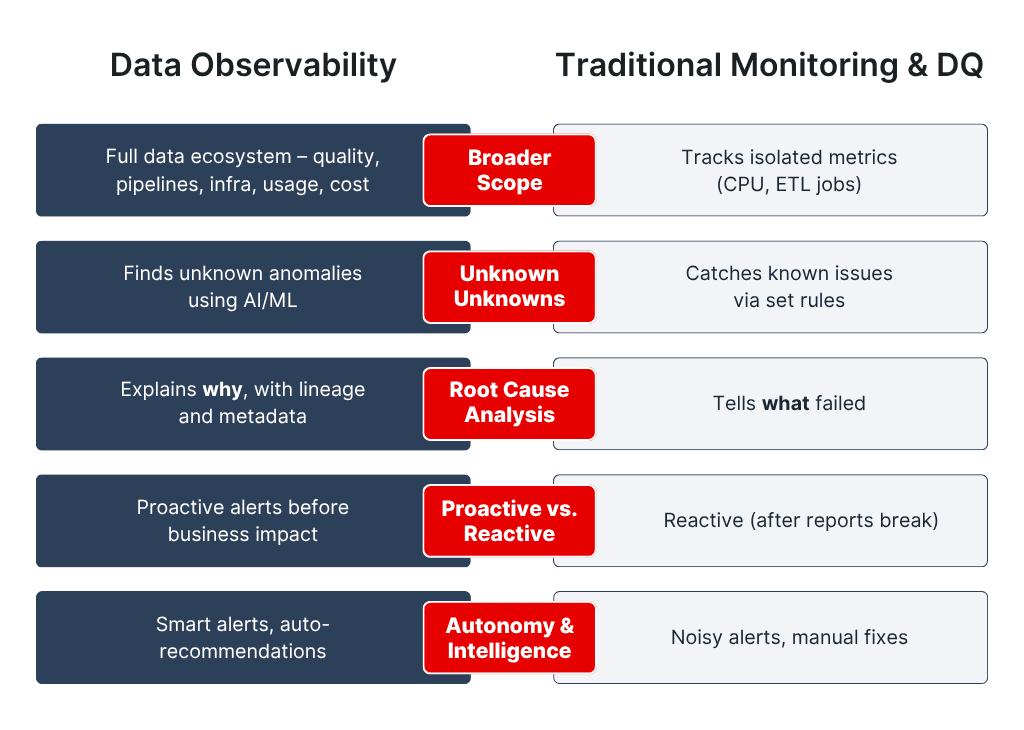

Data Observability vs. Traditional Data Monitoring and Data Quality

It’s important to clarify how data observability differs from traditional monitoring or basic data quality management, as the terms can be confusingly intertwined:

- Broader Scope: Traditional data monitoring tools (or infrastructure monitoring systems) typically track specific events or metrics in isolation – for example, CPU usage on a server, or whether an ETL job succeeded. Similarly, classic data quality tools focus on the content of data, checking for issues like missing values or invalid entries against predefined rules. Data observability, on the other hand, encompasses the full spectrum of data health. It monitors not only data quality metrics but also pipeline performance, system resource utilization, data usage patterns, and even financial aspects of data operations. In essence, observability connects the dots between data content, the processes that handle data, and the environment it lives in, giving a holistic view of the entire data ecosystem rather than isolated snapshots.

- Unknown Unknowns: Traditional monitoring usually relies on known failure modes or preset thresholds. For instance, you might set an alert if a pipeline runtime exceeds X minutes or if null values go above Y%. This is effective for known issues but often misses novel or subtle problems. Data observability is designed to handle “unknown unknowns.” Observability platforms leverage intelligent anomaly detection and machine learning to learn baseline behaviors and flag deviations that weren’t explicitly anticipated. For example, if a normally consistent daily data load suddenly drops by 30% with no rule defined for that scenario, a good observability solution would catch it. In short, while monitoring answers “Is this specific metric okay?”, observability asks “Is everything about my data okay, and if not, where and why?”.

- Depth of Insight and Root Cause Analysis: When a traditional monitor triggers an alert (say a pipeline failed), it often tells you what happened, but not much about why. Data observability tools are typically built with rich context to enable faster troubleshooting. They automatically collect metadata like data lineage (where the data came from and where it’s going), recent changes in upstream systems, schema modifications, and so on. This means that when an issue arises, engineers can quickly pinpoint the root cause (e.g., a broken upstream source, a code change in a dbt model, or a sudden surge in usage that overwhelmed the system) instead of manually combing through logs. In essence, observability doesn’t just monitor raw metrics – it correlates events and provides actionable intelligence to fix problems.

- Proactive vs. Reactive: Data observability flips the approach from reactive firefighting to proactive prevention. In a traditional setup, data teams often learn about issues only after a report breaks or a business user complains (“this dashboard looks wrong!”). With observability, the goal is that the system notifies you of anomalies in data or pipelines before they escalate into business-impacting incidents. This early warning system is crucial for maintaining trust. For example, observability might alert the team that today’s sales data in Snowflake hasn’t been updated by its usual time, allowing them to investigate and resolve the delay before the end-of-day revenue report goes out. It’s a shift from passively monitoring to actively observing and maintaining data health in real-time.

- Autonomy and Intelligence: Modern data observability platforms (like DQLabs) bring an autonomous, AI-driven layer on top of monitoring. They not only identify issues but can also help recommend fixes. For instance, if a data quality check fails due to a known data format issue, an observability platform might help suggest a transformation fix or automatically help quarantine the bad data. This goes beyond the scope of traditional tools. Additionally, observability solutions reduce “noise” by learning which anomalies are truly important. Instead of flooding engineers with hundreds of alerts (as naive monitoring might), a smart observability tool uses semantic understanding and pattern recognition to cut down false positives and alert fatigue, focusing your attention on the anomalies that matter most.

In summary, data observability is a superset and evolution of monitoring and data quality practices. Monitoring and quality checks are still essential pieces, but observability unifies them, layers on intelligence, and aligns the whole process with both technical and business outcomes. Rather than just measuring data against static rules, it continually asks if your data ecosystem is behaving as expected, and if not, shines a light on where to look. This comprehensive approach is what makes data observability so powerful for modern data engineering teams.

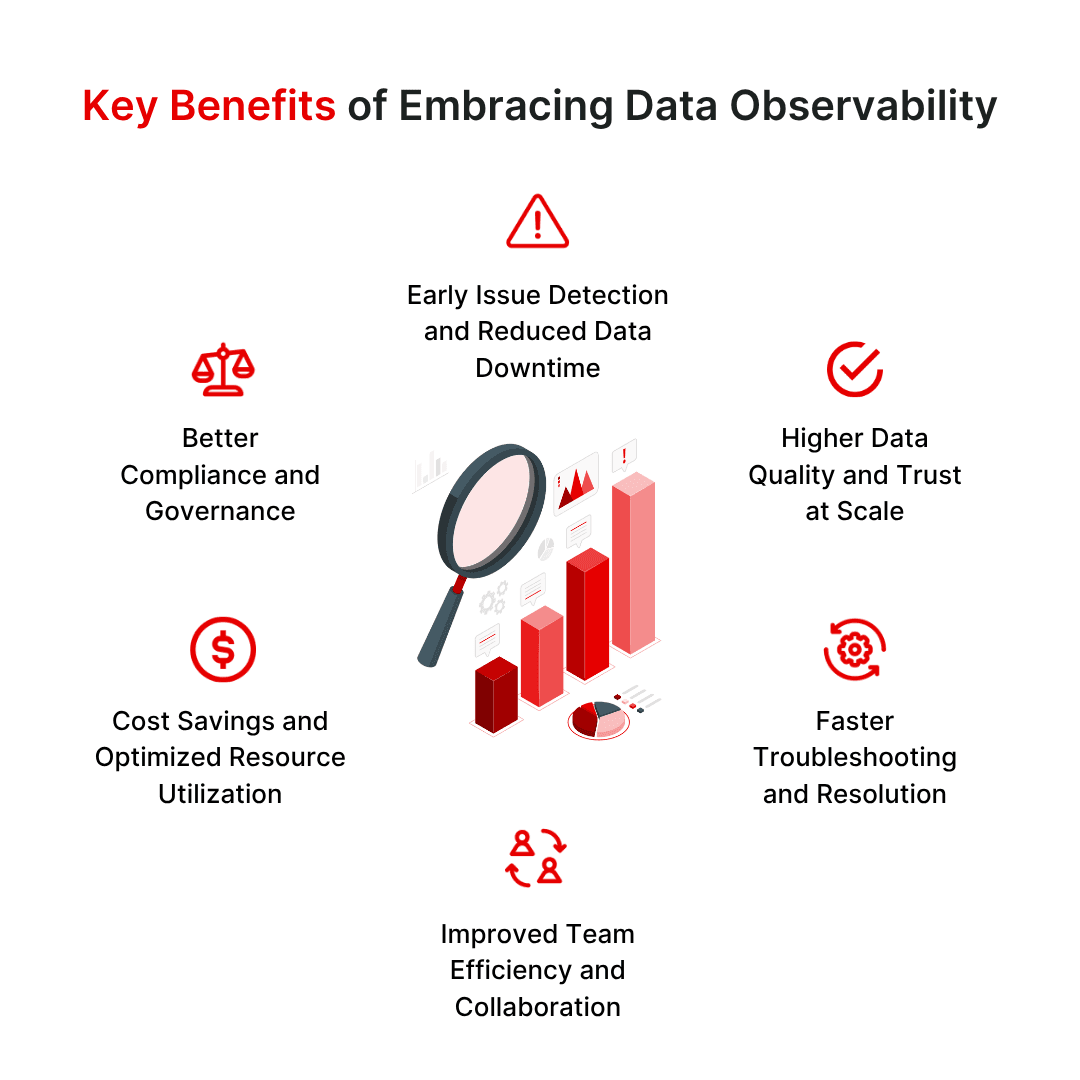

Key Benefits of Embracing Data Observability

Why should data professionals invest time and resources in data observability? Simply put, it directly translates to more reliable data and more efficient operations. Some of the key benefits include:

- Early Issue Detection and Reduced Data Downtime: Data observability enables you to catch problems in real-time (or even predict them) before they propagate. This means less “data downtime” – those periods when data is missing, inaccurate, or otherwise untrustworthy. Companies adopting robust observability have reported significant reductions in major incidents. For example, identifying and fixing a broken pipeline early can prevent hours of downstream delays or incorrect analytics. Over a year, this proactive stance can save organizations millions of dollars that would otherwise be lost to firefighting and reprocessing bad data.

- Higher Data Quality and Trust at Scale: By continuously monitoring data health across various dimensions (accuracy, completeness, timeliness, etc.), observability improves overall data quality. Teams and business users gain confidence that the data they’re using is correct and up-to-date. This increased trust enables more aggressive use of data in decision-making and AI models because everyone knows that if something goes off-kilter, it will be caught and addressed quickly. In essence, observability lets you scale up data volume and complexity without sacrificing reliability.

- Faster Troubleshooting and Resolution: When issues do occur, an observability platform drastically cuts down time to resolution. With features like centralized logging, lineage, and automated root cause analysis, engineers can often find the needle in the haystack in minutes rather than days. Many organizations see a 2–3× improvement in mean time to resolution (MTTR) for data incidents after implementing observability. Faster fixes mean less impact on end-users and more stable SLAs for data availability.

- Improved Team Efficiency and Collaboration: Data observability practices free up valuable engineering hours. Instead of manually auditing data or reacting to chaos, engineers get automated insights at their fingertips. This leads to as much as 60–70% reduction in time spent on investigating data issues, allowing the team to focus on building new features and innovating. Moreover, with a “single pane of glass” view of data health, different teams (data engineering, analytics, DevOps, etc.) can collaborate using the same facts. An ops engineer can see if a pipeline failed due to an upstream data issue and quickly involve the data engineer responsible, all within the observability dashboard. Shared visibility breaks down silos and aligns everyone toward quick resolution and continuous improvement (supporting a DataOps culture).

- Cost Savings and Optimized Resource Utilization: Trustworthy data and efficient pipelines also have a direct financial benefit. By observing cost-related metrics (more on FinOps later), companies can identify wasteful processes – e.g., an ETL job that suddenly uses twice the compute resources due to a rogue query or an unnecessary full data scan. Stopping or optimizing such inefficiencies can save significant cloud spend. Additionally, early error detection means you avoid costly re-runs of pipelines or patchwork fixes that consume extra compute and labor. It’s not uncommon to see observability initiatives pay for themselves through lower cloud bills and prevention of expensive data errors (think of the cost of making a strategic decision on faulty data – observability helps avert those scenarios).

- Better Compliance and Governance: With comprehensive observability, you inherently get detailed logs and audit trails of your data’s journey. This is a boon for governance and compliance. You can prove that data is monitored for quality and integrity at all times, which is valuable in regulated industries. If an unusual data access occurs or a sensitive data pipeline fails, the observability system can flag it, aiding in security and compliance efforts. Overall, observability can be seen as a foundational layer that supports data governance policies with real-time enforcement and transparency.

In short, data observability isn’t just a “nice-to-have” – it directly impacts the reliability, efficiency, and cost-effectiveness of your data operations. Now, let’s break down the core components of observability in more detail.

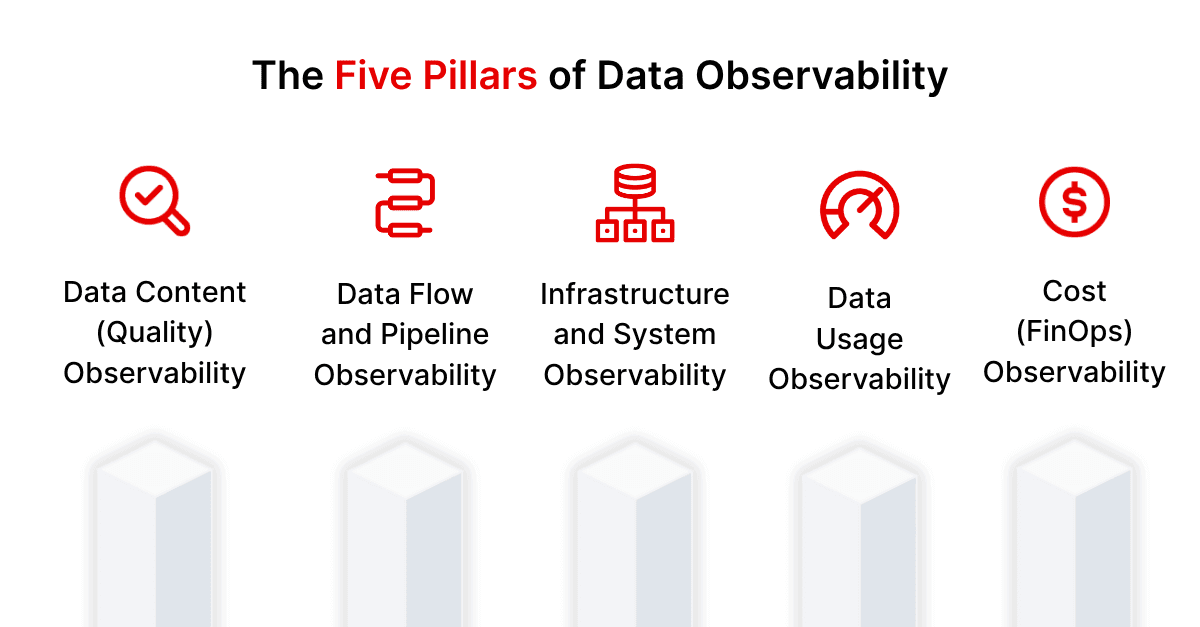

The Five Pillars of Data Observability

A robust data observability strategy spans five key pillars (or dimensions) of observation. These represent the different aspects of your data ecosystem that need to be continuously monitored and analyzed to achieve full visibility:

1. Data Content (Quality) Observability

Focus: What is the state of the data itself? This pillar covers the health of the data content – essentially data quality and statistical properties. It involves monitoring things like:

- Data quality metrics: completeness (e.g., are any values missing or null where they shouldn’t be?), accuracy/validity (do values conform to expected formats and ranges?), uniqueness (are there duplicate records?), consistency (do related data points align?), and so on.

- Anomalies and outliers in data: detecting unusual patterns within the data. For instance, a sudden spike in zero values in a sales amount field, or a category in a dimension that normally has 5 values now showing a 6th unexpected value.

- Schema changes and drift: tracking changes in the structure of data, such as added/dropped columns or changes in data types. Even if a pipeline doesn’t fail, a silent schema change could mean downstream reports break or produce incorrect results – observability will catch that.

- Business rule violations: Many datasets have implicit or explicit business rules (e.g., “order_date should not be in the future” or “each account must have a country code”). Content observability checks these rules continuously, often through configurable checks or learned patterns.

Why it matters: This pillar ensures the data itself is trustworthy. It’s the evolution of traditional data quality monitoring into an always-on, automated guardrail. By observing data content, you can catch issues like data corruption, unexpected values, or gradual quality degradation. For example, if a third-party data feed starts delivering empty fields due to an upstream bug, data content observability will quickly surface that problem. In summary, content observability is the foundation of data reliability – it answers “Is my data correct and complete right now?”

2. Data Flow and Pipeline Observability

Focus: How is data moving and transforming through the ecosystem? This pillar looks at the data pipelines, workflows, and dependencies that transport and process data. Key aspects include:

- Pipeline health and failures: Monitoring ETL/ELT jobs, data ingestion processes, streaming pipelines, etc., to ensure they run on schedule and complete successfully. If a pipeline fails, stalls, or runs slower than usual, observability will flag it.

- Performance metrics: Tracking pipeline performance indicators like latency (e.g., how long a job takes to run), throughput (data volumes processed per run or per time unit), and frequency (is a daily job actually running daily at the expected time?). Any degradation could indicate an issue (a job taking twice as long as normal might be a sign of upstream data growth or performance bottlenecks).

- Data volume and completeness: Observing the volume of data flowing through pipelines (e.g., number of records loaded). Sudden drops or spikes in volume (not explained by normal seasonality) often indicate a problem such as a drop in source data or duplicate processing. Pipeline observability ensures that no data is unexpectedly missing or duplicated as it moves.

- Workflow dependencies and scheduling: In complex pipelines, one job’s output is another’s input. Observability tracks these dependencies and can detect when upstream issues might cascade. For example, if an upstream extraction is delayed, it can warn that dependent transformations will also be delayed.

- Basic data lineage: Understanding where data in a pipeline comes from and where it’s going. If a data set is corrupted, lineage helps identify which pipeline or source introduced the issue.

Why it matters: Pipeline observability is all about ensuring data delivery is reliable and timely. Even if the data content is good, a broken or slow pipeline means the right data won’t reach the right place at the right time. This pillar helps teams quickly troubleshoot ETL/ELT issues, pipeline failures, or bottlenecks. It answers questions like “Did all my data for today load successfully?” and “Where in the pipeline did things break or slow down?” By having visibility into pipeline execution and performance, data engineers can keep data flowing smoothly, meeting data SLAs and preventing surprise outages in dashboards or machine learning workflows.

3. Infrastructure and System Observability

Focus: Where is the data living and what resources is it consuming? This pillar extends observability to the underlying infrastructure and platforms that host data and run data workloads. It includes monitoring:

- Resource utilization: CPU, memory, disk I/O, network throughput, and other system metrics on servers, databases, and cloud services that are part of data pipelines. For instance, if a Spark cluster in Databricks is running hot on memory, or a Snowflake warehouse hits its credit quota, those are infrastructure signals that can affect data delivery.

- System logs and errors: Collecting and analyzing logs from databases, processing engines, and applications for errors or warnings. These might reveal things like disk space issues, connection timeouts, or hardware failures that aren’t obvious at the data pipeline level.

- Service uptime and performance: Ensuring the databases, data lake storage, and pipeline orchestrators (Airflow, etc.) are up and performing within acceptable bounds. If a storage service is slowing down or a critical server is unreachable, data will be impacted.

- Scalability and capacity: Observing trends in storage growth, compute capacity usage, and query performance over time. This helps with capacity planning – e.g., you might see that your data volume has been growing 10% month-over-month and queries are gradually slowing, indicating it’s time to upgrade resources or optimize queries.

- Multi-cloud/hybrid visibility: In many organizations, data systems span on-premise and multiple clouds. Infrastructure observability provides a unified view. For example, you can observe a data pipeline that extracts from an on-prem database and loads into a cloud warehouse, with insight into both environments’ health.

Why it matters: Even the best data pipeline can falter if the infrastructure underneath is strained or failing. Data observability isn’t complete without infrastructure observability, because the root cause of a data issue might be at the system level (like “the database ran out of disk space, so the pipeline couldn’t insert new records”). By keeping an eye on infrastructure metrics alongside data metrics, you ensure that you catch environmental issues – and you can correlate them with data incidents. This pillar bridges the gap between data engineers and DevOps/SRE concerns, effectively bringing a DataOps perspective: it helps answer “Is the platform supporting our data pipelines healthy and tuned, and how do system issues impact our data?” In summary, robust infrastructure observability means fewer nasty surprises like unplanned downtimes or slowdowns that blindside the data team.

4. Data Usage Observability

Focus: Who is using data and how? This pillar monitors how data is being accessed, queried, and utilized across the organization. It involves:

- User access patterns: Tracking which users or systems are querying which datasets, how frequently, and with what performance. Unusual access patterns can be illuminating – for example, a sudden spike in queries against a particular table might indicate a new use case (or potentially someone abusing a system).

- Query and workload analytics: Observing the behavior of queries (especially in warehouses like Snowflake, BigQuery, etc.). Which queries are the most expensive? Are there recurring slow queries that need optimization? Usage observability can highlight hotspots in data consumption.

- Data dependencies and impact analysis: Understanding which reports or dashboards or ML models depend on which datasets. If a critical data table has an issue, usage observability helps identify who or what will be affected (e.g., “this table is used by Finance dashboard X and Marketing report Y”). This way, the right stakeholders can be alerted and the business impact is clear.

- Data auditing and security signals: Monitoring access logs for compliance – e.g., who accessed sensitive data, were there any unauthorized access attempts, are there patterns that might indicate a security risk or data misuse. This crosses into data security observability but is an important aspect of knowing your data’s usage.

- Usage anomalies: Detecting unusual usage trends – like a normally popular dashboard suddenly not being used (could indicate data issues making it unusable), or conversely a normally quiet dataset becoming hot (could indicate a trend or an unintended usage).

Why it matters: Data isn’t just produced and stored – ultimately it’s consumed to drive decisions and products. Usage observability closes the loop by ensuring the way data is used is visible and optimized. One benefit is performance tuning: by seeing how users interact with data, you can better optimize data models, indexes, caching, etc., to improve user experience. Another benefit is impact assessment: when something goes wrong in a data pipeline, usage observability immediately tells you what business processes or teams might be impacted, so you can prioritize and communicate effectively. It also reinforces governance – knowing exactly how data flows not just through systems but through users and business units. In summary, data usage observability helps answer “Is data reaching the people and systems that need it in a timely way, and are they encountering any issues using it?” It ensures that data delivers value efficiently and safely.

5. Cost (FinOps) Observability

Focus: How much are data processes costing, and where can we optimize? The final pillar involves keeping an eye on the financial aspects of your data infrastructure and operations – essentially applying FinOps principles to data. Key components include:

- Resource cost tracking: Monitoring the cost of cloud resources used by data workloads (e.g., Snowflake credits consumed per day, storage costs). By attributing costs to pipelines or teams, you gain transparency into how much each data process truly costs.

- Cost anomalies and spikes: Detecting when costs deviate from the norm. For example, if yesterday’s data processing cost double the usual amount, that’s worth investigating (maybe a job ran twice, or an inefficient query scanned a huge table). Early detection of cost anomalies can prevent runaway cloud bills.

- Idle or underutilized resources: Observability can highlight resources that are provisioned but not used (e.g., an oversized cluster or a database that’s rarely queried but running 24/7). Identifying these is key to cost optimization – you might downgrade or turn off resources to save money.

- SLA vs cost analysis: Combining cost and performance data to see if you are over-provisioning. For instance, if a pipeline is running well under its SLA, perhaps you can use a smaller compute instance to save cost without hurting delivery times.

- Chargeback and budgeting data: For larger organizations, cost observability feeds into FinOps dashboards where each team or project’s data usage costs are measured. This encourages accountable usage and helps in budgeting for data infrastructure. Observability tools can provide the data for internal chargebacks or showback models (e.g., “Team A used 30% of this month’s data platform resources, costing $X”).

Why it matters: As data ecosystems scale, cost control becomes a major concern. Data teams are increasingly expected to not just deliver data, but do so efficiently. Cost observability ensures there is financial transparency and no “hidden spend.” It allows organizations to balance performance with expense – finding opportunities to save without compromising on data delivery. Moreover, linking cost with technical metrics can reveal inefficiencies: maybe a poorly written SQL query is responsible for 50% of a database’s consumption. Without cost observability, such issues often go unnoticed until the cloud bill arrives. In times where budgets are scrutinized, demonstrating a handle on data ROI is crucial. Thus, cost observability helps answer “Are we spending our data budget wisely, and where can we trim fat?” It enables data FinOps, turning what used to be seen as just IT costs into manageable, optimizable metrics for the data team.

Together, these five pillars – data content, data flow, infrastructure, usage, and cost – provide a comprehensive map of what to monitor in data observability. A mature observability practice will cover all of them. It’s worth noting that many first-generation “data observability” tools only focused on one or two pillars (often data quality content and pipeline metrics). However, to truly trust your data and operate efficiently, you need visibility into all five dimensions. This is where modern platforms like DQLabs differentiate themselves, by offering multi-layered observability across every pillar in one solution.

The DQLabs Data Observability Maturity Triangle (Four Stages)

Implementing data observability is a journey. Organizations typically progress through levels of maturity, expanding the breadth and depth of what they monitor and how they govern data health. DQLabs visualizes this progression as a multi-layered Data Observability Triangle – a pyramid of four stages, from the foundational capabilities up to the most advanced. Each layer builds upon the one below it:

Stage 1 – Core Data Observability (Base Layer): Data Health & Reliability – This foundational stage focuses on the basic health checks of data. Organizations at this stage implement automated monitoring for fundamental data quality indicators. The emphasis is on data reliability at a surface level:

- Includes: Data freshness (is data up-to-date?), data volume completeness (is all expected data present each run?), and basic data lineage visibility (where did the data come from). Think of this as getting the essential signs of life from your data.

- Purpose: Stage 1 provides the groundwork for detecting obvious errors and inconsistencies in data. It ensures you have an early warning system for missing, delayed, or blatantly corrupted data before it affects analytics or reporting. For many teams, this is the starting point of observability – catching broken pipelines or stale data as soon as possible to maintain a base level of trust.

Stage 2 – Pipeline, Performance & Cost Observability: Once the basics are in place, the next layer adds richer visibility into how data flows and the efficiency of those flows. This stage expands monitoring to cover end-to-end pipeline operations and resource/cost aspects:

- Includes: Detailed pipeline health and job/task monitoring, performance metrics like latency and throughput for data processes, usage analytics (e.g., which tables or reports are heavily accessed), and cost monitoring (FinOps insights as discussed).

- Purpose: Stage 2 enables teams to troubleshoot ETL/ELT and integration issues more effectively, optimize pipeline performance, and understand resource consumption patterns. In this stage, observability is not just catching errors but also highlighting opportunities to tune and improve data pipelines. For example, you might identify that a particular data transformation is consistently slow and expensive, prompting an optimization. This stage also often introduces better alerting mechanisms – e.g., alerts for pipeline SLA breaches or cost overruns – ensuring the right people know about issues at the right time.

Stage 3 – Advanced Analytics & Semantic Observability: Deep Data Understanding – In this layer, organizations incorporate advanced analytics and AI-driven insights into their observability practice. It goes beyond just monitoring known metrics and brings in anomaly detection and semantic context:

- Includes: Automated anomaly detection for data patterns (catching outliers, sudden variance, or unusual trends in data that simpler rules might miss), data distribution and drift analysis (monitoring statistical distribution of data over time to catch drifts in values or schema), semantic profiling of data (using AI to understand the meaning of data, e.g., detecting that a column contains emails or addresses and validating accordingly), and business metric observability (tracking key business KPIs for anomalies, not just low-level data metrics).

- Purpose: Stage 3 allows early detection of subtle or complex data issues that baseline monitors might not catch – for instance, a slight trend of data drift that could skew a machine learning model if left unchecked. By leveraging machine learning and semantic understanding, the observability system becomes smarter and more proactive, capable of identifying issues like “This week’s customer transactions have an unusual pattern compared to previous weeks” or “Schema changes in table X have caused a downstream metric to slowly diverge.” Crucially, this stage supports intelligent responses – since the platform “understands” the data context better, it can reduce false positives and even begin to recommend corrective actions. For example, it might identify that an anomaly correlates with a recent code deployment and suggest checking that particular pipeline. This is where an AI-driven platform like DQLabs shines, using machine learning to continuously profile data and self-tune thresholds, so you’re not manually configuring endless rules.

Stage 4 – Ecosystem & Business Control (Apex Layer): Governance and Enterprise Alignment – The top of the observability maturity triangle is about integrating observability into wider data governance and business processes. At this stage, observability becomes a central nervous system for data across the enterprise:

- Includes: Multi-cloud and hybrid observability (unified monitoring across all environments and data silos), comprehensive metadata and impact analysis (understanding relationships across data assets enterprise-wide), cross-silo lineage and end-to-end traceability (full visibility from source to consumer across organizational boundaries), and policy-driven controls (embedding governance rules, compliance checks, and collaboration workflows into the observability platform).

- Purpose: Stage 4 connects technical observability with business outcomes and oversight. The observability platform not only finds issues but feeds into governance dashboards, compliance reports, and business KPI monitors. Executives and data owners gain trust that data products are being delivered with quality and within policy. Essentially, observability dovetails with data governance: ensuring that any data issues are not just an IT concern but are managed in line with business priorities and regulatory requirements. The organization can deliver “trusted data as a product” at scale – meaning data is treated with the same rigor as a product: monitored, quality-checked, reliable, and aligned to user expectations. At this apex stage, data observability is fully institutionalized; it’s part of the culture and processes, much like application observability is integral to DevOps in mature software organizations.

Using the Maturity Model: Not every company will jump straight to Stage 4 – and that’s okay. The idea of this four-stage model is to guide your roadmap. For example, you might realize you’re doing Stage 1 and bits of Stage 2 today (you monitor freshness and maybe pipeline run times). From there, you can plan to add cost observability and better performance metrics (completing Stage 2), then move into anomaly detection (Stage 3), and eventually tie it into governance (Stage 4). Tools like DQLabs are designed to support this journey. DQLabs provides a multi-layered observability platform that covers all these stages out-of-the-box, allowing teams to progress naturally. With DQLabs, you’re not stuck at basic monitoring – the platform can grow with you into advanced AI-driven analytics and enterprise-wide governance. In fact, DQLabs is unique in offering capabilities at every layer (core data checks, pipeline & cost insights, semantics/AI, and governance integration) within one solution, so you don’t need to stitch together multiple tools as you mature.

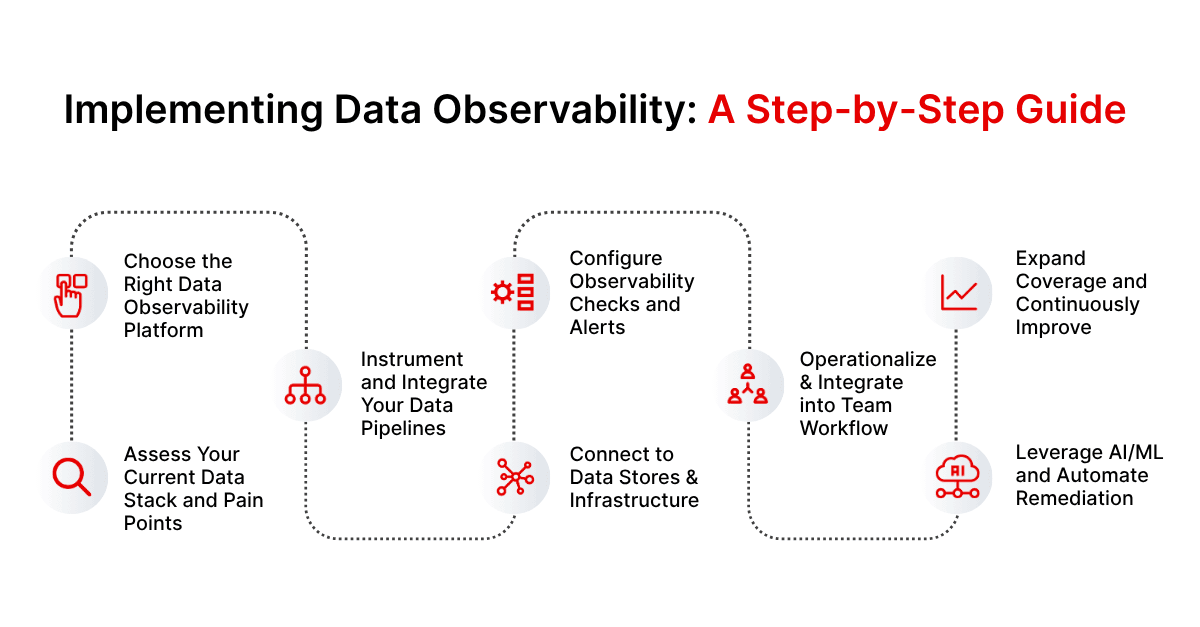

Implementing Data Observability: A Step-by-Step Guide

For technical teams ready to embark on (or accelerate) their data observability journey, it helps to have a concrete game plan. Below is a step-by-step guide to implementing data observability in your organization, from initial assessment to full integration. This guide assumes you want a comprehensive approach, leveraging a platform like DQLabs to cover all bases, and that you’ll be integrating with popular data stack components like Airflow (for orchestration), dbt (for transformations), Snowflake/Databricks (for data platforms), etc.

Step 1: Assess Your Current Data Stack and Pain Points

Begin with understanding where you stand. Map out your data architecture – what sources, pipelines, databases, and BI/AI tools are in play? Identify the key pain points or risks: Do you often face unknown data quality issues? Pipeline failures? Slow queries? Compliance worries? This assessment will highlight which observability pillars are most urgent. For instance, if broken pipelines are a frequent headache, pipeline observability is a priority; if cloud bills are ballooning, cost observability needs attention. Also, inventory any existing monitoring or data quality checks you have – these will feed into your observability plan. Define the goals and KPIs for observability at this stage: e.g., “Reduce data incident resolution time by 50%” or “Ensure no critical dashboard goes down without an alert.”

Step 2: Choose the Right Data Observability Platform

Given the complexity of full observability, it’s usually wise to leverage a dedicated platform rather than building everything in-house. Evaluate solutions with an eye on comprehensive coverage (all five pillars, multi-layer insights) and ease of integration with your tools. For example, DQLabs offers connectors and agents that integrate with common orchestrators (like Apache Airflow), ETL tools, cloud warehouses (Snowflake, BigQuery, Redshift), lakehouses (Databricks), and more. When evaluating, consider: Does the platform support your tech stack out-of-the-box? Can it ingest metadata from your pipeline tool (Airflow DAGs, dbt run results)? Can it connect to your data stores with minimal hassle? Also, look at the AI capabilities – a platform like DQLabs that is AI-driven and semantics-powered can save you time by auto-detecting anomalies and tuning thresholds. Once you’ve selected a platform, provision it and set up the basic environment (this might involve deploying an observability service or connecting a SaaS platform to your data).

Step 3: Instrument and Integrate Your Data Pipelines

Integration is a crucial step. Start with your orchestration and pipeline tools:

- For Airflow (or similar schedulers like Prefect or AWS Glue workflows): You’ll want to integrate observability at the DAG/task level. This could mean installing an observability agent/plugin that logs pipeline metadata to DQLabs or configuring Airflow callbacks to send task success/failure and timing info. DQLabs, for instance, can connect via API or hooks to ingest Airflow job statuses, durations, and context. The goal is to capture when each job runs, how long it took, and if it failed or skipped.

- For dbt: If you use dbt for transformations and data modeling, integrate its artifacts (tests, run results) into the observability platform. Many observability solutions can consume dbt test results as part of data quality monitoring. You might configure dbt to emit events or have DQLabs pull the test outputs. This way, if a dbt test fails (e.g., a uniqueness test on a primary key), it appears as a data observability alert immediately. Also, dbt’s lineage information can enrich the observability platform’s lineage view.

- For custom pipelines or ETL frameworks, consider adding logging or metrics emission. Sometimes this is as simple as adding a few lines of code to push metrics (row counts, success/fail flags) to the observability tool’s API, or using open standards like OpenTelemetry for tracing data flows.

Step 4: Connect to Data Stores and Infrastructure

Next, integrate your data storage and processing platforms:

- For data warehouses and lakes (Snowflake, Databricks, BigQuery, etc.): Enable the observability platform to collect relevant telemetry. For example, connect to Snowflake’s information schema or usage views to get query logs, data volumes, and performance stats. DQLabs can use native connectors to pull these metrics regularly. Similarly, for Databricks or Spark, integration might involve reading job logs or using cloud provider monitoring (like CloudWatch for AWS Glue or Databricks metrics). Ensure you are capturing things like query execution times, row counts processed, errors, and resource usage from these systems.

- For infrastructure (servers, VMs, containers running databases or data apps): You might deploy lightweight agents or use existing monitoring tools’ feeds to pipe system metrics into the observability platform. For instance, if you already use something like Prometheus or Datadog for infra monitoring, see if you can integrate those metrics. DQLabs might allow ingestion of custom metrics or integration with cloud monitoring APIs to get CPU/memory usage from your nodes.

- Don’t forget streaming pipelines if you have them (Kafka, Spark streaming, etc.). Observability should also cover streaming job liveness, lag, and throughput. Integrate those via connectors or metrics endpoints.

The integration phase can be iterative – you don’t have to wire up everything at once. Often, teams start with a critical pipeline or two and a couple of databases, then expand over time. The key is establishing a feed of telemetry (metadata and metrics) from every layer: data, pipeline, infrastructure. Modern platforms will do a lot of the heavy lifting via connectors.

Step 5: Configure Observability Checks and Alerts

With data flowing into the observability platform, the next step is to configure what “normal” looks like and how you want to be alerted when things deviate. Here’s what to do:

- Define baselines and rules: Many checks might be auto-generated by the platform (for example, DQLabs can auto-detect baseline data freshness or distribution ranges). Start by reviewing these and adjusting if necessary. Also define any custom business rules that are crucial (e.g., “Total daily orders should never be below 1000” or “Null rate in critical field X must stay below 0.1%”). The platform might allow SQL-based rules or no-code rule builders for this.

- Set alert policies: Decide on alerting channels and severities. For example, you might want high-severity alerts (like a pipeline failure or a significant data anomaly in a key table) to page an on-call engineer via PagerDuty or Microsoft Teams/Slack, whereas lower-severity ones (like a non-critical table delayed by 30 minutes) could just be an email or logged for review. DQLabs and similar tools let you configure alert thresholds and routes. Take advantage of features to reduce noise – e.g., alert suppression if multiple related alerts trigger, or grouping alerts by incident.

- Incident management integration: Integrate with your ticketing or incident system (like Jira or ServiceNow) if applicable. A good observability platform can auto-create incident tickets with context. Setting this up ensures that when an alert fires, it doesn’t get lost – it becomes a task that someone will triage.

- Dashboard setup: Create centralized dashboards for overview. For instance, a dashboard showing the status of all key pipelines (success/fail, last run time, data recency), another for data quality metrics of critical tables, another for cost trends. DQLabs provides pre-built dashboard templates. Customize them to fit your team’s eyeshots (e.g., a NOC-style monitor screen for data team).

At this stage, you’re essentially codifying your data SLAs and expectations into the observability tool. Be prepared to iterate – initial thresholds might be too tight or too loose; you’ll refine these as you learn what genuine anomalies are.

Step 6: Operationalize & Integrate into Team Workflow

Now that observability is live, fold it into your daily operations:

- Embed in Dev/DataOps processes: For new pipelines or changes, make adding observability checks a standard part of the development checklist. For example, if a new data source is onboarded, ensure appropriate quality monitors are set up in DQLabs for that data. Treat observability config as code where possible (some platforms allow configuration via code or YAML, which you can version control).

- Team training and roles: Train the data engineering/analytics team on how to use the observability dashboards and respond to alerts. Define clear ownership: who is responsible for investigating certain types of alerts? Perhaps data engineers handle pipeline failures, data stewards handle data quality anomalies, etc. Establishing this ownership and runbook for common issues will make the response more consistent.

- Integrate with communication channels: Make sure alerts and insights are visible where your team already communicates. If your team lives in Slack or Teams, integrate DQLabs to post alerts there. Perhaps set up a dedicated “#data-observability” channel for real-time notifications and discussions around incidents.

- Closed-loop resolution: Encourage a practice where every alert or incident is reviewed and resolved. If something was a false alarm or non-actionable, adjust the monitors so it doesn’t distract in the future. This continuous improvement keeps the observability system effective and trustworthy.

Step 7: Leverage AI/ML and Automate Remediation

With the basics running, take advantage of the more advanced capabilities of the observability platform:

- Enable anomaly detection features on key datasets if not already. For example, DQLabs can automatically detect anomalies in data distributions – turn this on for critical metrics or tables and let it start learning. Over time, this may catch subtler issues than any rule you configured.

- Utilize machine learning for threshold tuning. Instead of maintaining static thresholds that might create false alarms as data evolves, allow the platform’s ML to adjust them. DQLabs’ autonomous features can, for instance, learn that web traffic is 10x higher on Fridays and adjust expectations accordingly, so you only get alerted when Friday deviates from the normal Friday pattern.

- Explore automated actions. This is cutting-edge in observability: for certain scenarios, you can automate the response. For instance, if a known issue occurs (like “the data in table X is 2 days stale”), an automated job might restart a stuck pipeline or revert to a backup data source. Platforms might allow custom scripts or functions to trigger on alerts. Even if you don’t fully automate, having suggested actions (like run this dbt model or notify this data owner) can streamline the resolution.

Step 8: Expand Coverage and Continuously Improve

Finally, treat data observability as an ongoing program:

- Onboard more systems: As your data landscape grows, keep integrating new sources into the observability framework. Don’t leave any significant blind spots – even legacy on-prem databases or new SaaS data sources should funnel their telemetry in.

- Refine and scale: Review your observability metrics and incident logs periodically. Are there repeat issues that point to a deeper problem? (e.g., a certain pipeline fails frequently – maybe it needs redesign or better error handling). Use observability insights to drive improvements in data architecture and processes.

- Performance and cost tuning: As the volume of telemetry grows, ensure your observability platform is scaling well (DQLabs is built to handle large-scale metadata, but keep an eye on any throughput limits or data retention settings). Also, monitor the cost of observability itself – storing a lot of metadata has a cost, so implement retention policies (maybe you don’t need log data older than 1 year, etc.).

- Stakeholder reporting: Create summary reports for leadership on data health. For instance, monthly trends of incidents, improvements in SLA adherence, cost savings identified by observability, etc. This shows the value of the initiative and helps secure ongoing support.

By following these steps, technical teams can methodically roll out data observability and embed it into their data operations. It might seem like a lot, but modern platforms like DQLabs are designed to make this process as smooth as possible – offering connectors for integration, templates for monitors, and AI to minimize manual setup. The payoff is huge: you transform from a reactive team putting out data fires into a proactive team ensuring data reliability and excellence as part of the everyday routine.

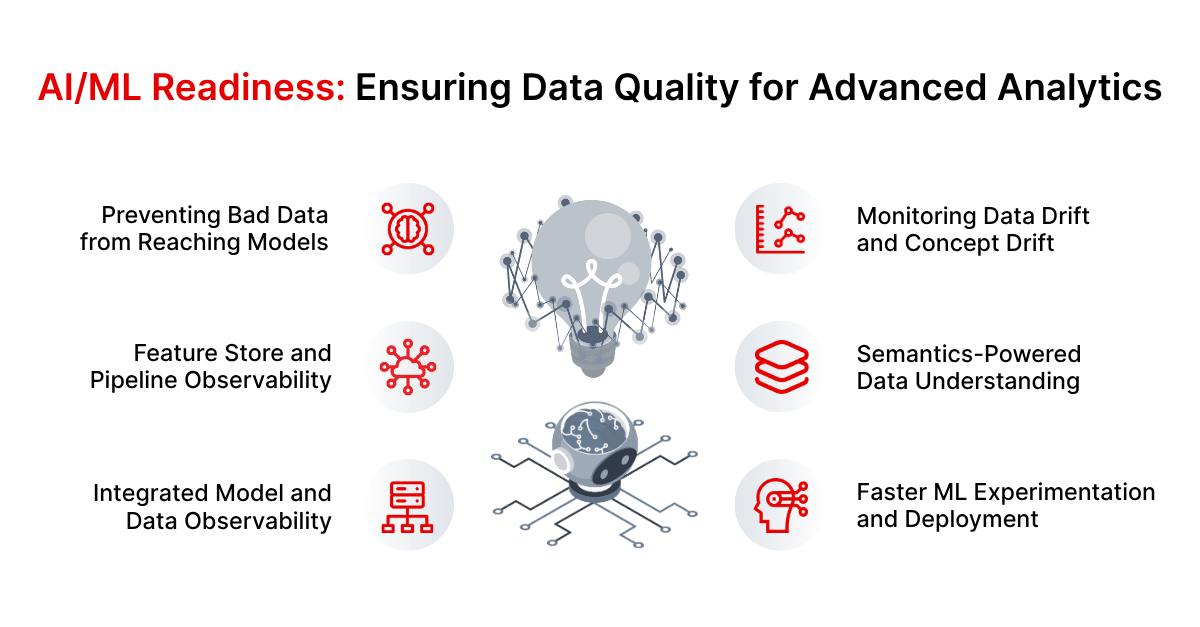

AI/ML Readiness: Ensuring Data Quality for Advanced Analytics

One of the most compelling use cases for data observability is ensuring AI and machine learning readiness. In the realm of AI, the adage “garbage in, garbage out” holds especially true – the effectiveness of ML models and AI systems is directly tied to the quality and stability of the data feeding them. Here’s how data observability helps technical teams guarantee that their AI/ML initiatives are built on a solid foundation of reliable data:

- Preventing Bad Data from Reaching Models: Machine learning models are extremely sensitive to data issues. A slight drift in data distribution or a batch of corrupted training data can degrade model performance or even cause erroneous outcomes (for example, a fraud detection model might start flagging too many false positives if the input data distribution shifts unnoticed). Data observability’s continuous monitoring (particularly the advanced anomaly detection and data drift analysis of Stage 3 maturity) will catch these issues. If an input feature for a model begins to show unusual values or if a data pipeline feeding a model fails, observability alerts the team before the model retraining or inference yields bad results. This allows you to pause, fix the data, and retrain if needed, thereby avoiding deploying a model on tainted data.

- Monitoring Data Drift and Concept Drift: In production ML, two important phenomena are data drift (the input data distribution changes over time) and concept drift (the relationship between input and output changes, often due to real-world shifts). While model monitoring tools focus on the model’s performance metrics, data observability focuses on the underlying data. It can highlight, for example, that the average value of a key feature has been gradually increasing each week – a signal of drift. Or maybe the categorical mix in a feature has changed (say, a new product category appears in e-commerce data). By detecting drift early through observability, data scientists can retrain or adjust models proactively, maintaining model accuracy. Essentially, observability acts as an early warning system for model decay by keeping tabs on data characteristics.

- Feature Store and Pipeline Observability: Many organizations use feature stores and complex pipelines to generate features for ML. If you’re a data engineer supporting data scientists, you know that those pipelines must be rock-solid. Data observability can be applied to the ML feature pipelines just like any other ETL. For instance, if you have a nightly job computing aggregates for user behavior features, observability ensures that job runs and the features look sane (no sudden drop to zero or spike to infinity). DQLabs can monitor feature data quality metrics and freshness, so your data scientists are never training on outdated or incomplete features. Moreover, lineage tracking helps – if a model output seems off, you can trace back which data source or intermediate feature might be responsible, thanks to the lineage metadata captured.

- Semantics-Powered Data Understanding: One unique advantage of DQLabs in AI readiness is its semantics-powered approach. The platform can infer the semantic meaning of data fields (like detecting a column is an address, a name, a geolocation, etc.). This semantic awareness helps in contextually monitoring data for AI. For example, if a feature is supposed to be a percentage but suddenly contains values >100, a semantics-driven rule can catch that as an anomaly. By understanding what the data represents, the observability platform reduces false alarms and focuses on true issues that could confuse a model. It’s like having a domain-aware data assistant double-checking the inputs to your AI.

- Integrated Model and Data Observability: While this article is primarily about data observability, it’s worth noting the convergence of data and ML observability in modern architectures. Some advanced setups (and indeed where the industry is heading) incorporate model performance metrics into the same observability framework. For instance, alongside data metrics, you might also track things like model prediction latency or accuracy on a rolling basis. DQLabs, by ensuring the data is reliable, indirectly supports model observability – because often when a model’s performance dips, the root cause is data-related (e.g., data drift or an upstream data bug). By having both data and model signals, teams get a complete picture of AI system health. If your organization is doing MLOps, consider extending data observability practices to cover feature pipelines and even model outputs.

- Faster ML Experimentation and Deployment: When data scientists trust the data (thanks to observability) and know that any issues will be caught quickly, they can iterate faster. Less time is spent double-checking if training data is correct or debugging model issues that turn out to be data problems. This means models move to production more quickly and with greater confidence. Moreover, once in production, the combination of observability and alerting ensures that any data issue triggers a response – for example, halting a model deployment if a critical input feature goes haywire. This safety net is crucial for high-stakes AI applications.

In summary, data observability is an essential pillar of AI/ML readiness. It provides the assurance that the data powering models is accurate, consistent, and timely. Given that many organizations in 2025 are scaling up AI projects, having observability in place is like having quality control on the assembly line – it keeps your AI outputs high-quality. DQLabs, with its AI-driven anomaly detection and semantic engine, is particularly well-suited to guard the interfaces between data engineering and data science. The result is trusted, robust AI models that deliver business value without nasty surprises from the data side.

FinOps Observability: Managing Data Costs and Efficiency

In the era of cloud data platforms and massive data workloads, financial observability (FinOps) has become a crucial aspect of data engineering. FinOps observability is all about keeping tabs on the cost and efficiency of your data infrastructure and operations – ensuring you get the most bang for your buck. Let’s explore how implementing cost observability helps technical teams and how DQLabs supports this use case:

- Transparent Visibility into Data Spend: One of the first benefits of cost observability is simply making the costs visible and attributable. In a complex pipeline that touches storage, compute, and various services, it’s not always obvious which process or team is driving costs. By instrumenting cost metrics, you can break down expenses by pipeline, service, or user. For example, you might discover that your daily ETL of clickstream data in Spark costs $50/day in AWS compute and $10/day in storage I/O, whereas your nightly sales report in Snowflake costs 100 credits per run. DQLabs can aggregate and display such cost metrics alongside pipeline performance. This transparency allows data teams to justify expenditures and identify high-cost areas at a glance.

- Detecting Cost Spikes and Anomalies: Have you ever been surprised by a cloud bill that’s much higher than expected? Cost observability aims to prevent that. By monitoring spend in near real-time, you can set up alerts for unusual spikes. For instance, if a normally $100/day pipeline suddenly incurs $300 one day, you get alerted immediately (not at month-end when the bill arrives). Such a spike could be due to an accidental code bug (e.g., a query with a cross join that exploded data processed) or an unintended duplicate run of a job. With observability, you catch it right away and can roll back the change or kill the offending process. This not only saves money but also signals potential data issues (often, cost spikes correlate with something going wrong, like a stuck loop processing the same data repeatedly).

- Optimizing Resource Usage: Cost observability goes hand-in-hand with performance tuning. By correlating cost data with usage and performance metrics, engineers can find inefficiencies. For example, you might observe via DQLabs that a particular report is run by analysts frequently, and its queries always scan a huge table, making it costly and slow. That insight can drive you to create a summary table or materialized view to cut both cost and latency. Or you might see that you have an oversized cluster for a job that uses only 50% of resources; downsizing it could save money with no performance hit. Over time, these optimizations add up. FinOps observability essentially provides the feedback loop needed for continuous cost optimization in your data environment.

- Budgeting and Chargeback: In larger organizations, different departments or projects might share a data platform. Cost observability enables chargeback or showback models by accurately measuring usage per team. For example, you can report that “Team A consumed 40% of the warehouse credits this quarter, and Team B 20%, etc.” This fosters accountability and can even encourage teams to optimize their own usage when they know they’re being measured. Even if you don’t formally charge back, having budgets and tracking against them (with alerts when approaching limits) is invaluable. You could set monthly cost budgets on a per-pipeline or per-dataset basis. DQLabs could notify if a budget is likely to be exceeded based on current trends, allowing you to adjust proactively (perhaps by reducing retention or query frequency).

- Aligning Cost with Value (ROI): Perhaps the most strategic aspect of FinOps observability is facilitating discussions about ROI of data. When you know the cost of delivering a particular data product (say a dashboard costs $X per month to keep updated), you can weigh it against the value it provides. If something is very costly but not very useful, you might decide to scale it down. Conversely, if a pipeline is very valuable, you might invest more to ensure its reliability or performance. Observability data helps make these decisions data-driven. It elevates the data team’s role from a cost center to a value center, because you can articulate costs and benefits clearly.

- DQLabs Capabilities for FinOps: DQLabs, being an autonomous observability platform, is equipped to monitor cost metrics across cloud providers and tools. It can ingest billing data or usage data from platforms like Snowflake (which provides credit usage logs) or cloud services (through APIs). DQLabs can then apply anomaly detection to cost just as it does to data metrics – so you get the same intelligent alerting for cost deviations. Moreover, because it correlates cost with pipeline events, it can help pinpoint exactly why a cost spike happened (e.g., linking it to a specific pipeline run or query). By having cost observability in the same interface as data quality and performance, engineers have a one-stop-shop to balance performance, quality, and cost trade-offs. For example, if speeding up a pipeline would require doubling resources (and cost), you can weigh that decision seeing both the performance improvement and cost increase in one place.

In practice, FinOps observability might reveal scenarios such as:

- A machine learning model retraining job running daily that could run weekly to cut costs by 80% with negligible impact on accuracy.

- An outdated backup pipeline still running (and costing money) that could be turned off.

- A particular team running extremely expensive ad-hoc queries in the data warehouse, prompting training or the creation of a governed data mart for them.

By acting on these insights, companies have achieved substantial savings – without data observability, such opportunities remain hidden. In the end, cost observability ensures that your data platform is not just technically sound, but also financially efficient, which is increasingly a key success factor for data teams.

Best Practices for Scaling Data Observability (and Pitfalls to Avoid)

Implementing data observability is one thing; scaling it across a complex, hybrid environment and maintaining its efficacy is another. Here are some best practices to ensure long-term success, as well as common pitfalls to avoid:

Best Practices

- Start Small, Then Expand: Begin with a pilot on a critical data pipeline or a single domain. Gain quick wins by observability on a high-impact area (for example, your main data warehouse ETL). This helps demonstrate value and fine-tune the configuration. Once proven, incrementally expand observability coverage to more datasets and pipelines. This phased approach prevents overwhelm and lets your team adapt processes gradually.

- Define Clear Data SLAs and SLOs: Establish service-level objectives (SLOs) for data quality and timeliness in collaboration with business stakeholders. For example, “Dashboard X will be updated by 8am daily and data accuracy verified.” Using observability, you then monitor against these SLOs. Having clear targets helps the team focus on what’s most important and measure success. Make these SLOs visible – maybe in a dashboard of their own or an internal wiki – so everyone knows what the expectations are.

- Ensure Cross-Team Collaboration (Embed DataOps Culture): Observability works best when it’s embraced beyond just the data engineering team. Bring in data analysts, scientists, and even business data owners into the observability fold. For instance, set up weekly or monthly reviews of data health with representatives from various teams. Encourage a culture where if an analyst spots a data issue, they check the observability dashboard and tag the respective engineer. Similarly, when engineering resolves an incident, they update stakeholders transparently. This collaborative approach aligns everyone towards the common goal of reliable data and prevents an “us vs them” mentality.

- Maintain a Data Observability Runbook: Document the standard operating procedures for handling different types of alerts. For example, if a “data freshness delay” alert triggers, the runbook might say: check pipeline XYZ logs, notify Data Owner A if delay exceeds 2 hours, etc. Over time, build a knowledge base of known issues and resolutions. DQLabs can often capture some context (like error messages or lineage) in alerts – incorporate that into your runbook steps. A well-maintained runbook speeds up onboarding new team members and ensures consistent responses.

- Leverage Automation and Integration: Use the automation capabilities of your observability tools to the fullest. This includes auto-baselining, automatic anomaly detection, and even automated ticketing. The less manual overhead, the better your system will scale. For integration, try to hook observability into CI/CD pipelines too – for instance, run a suite of data quality checks (via the observability platform) as part of your deployment pipeline for a new data model. Some teams even implement “data unit tests” that are essentially observability rules run on a sample data set before code merges. The more you can treat observability as code and integrate it, the more robust and repeatable it becomes.

- Monitor the Monitors (Meta-Observability): Keep an eye on the observability system itself. Ensure your observability platform’s connectors and agents are all running properly and that data is flowing into it. If DQLabs is self-hosted, monitor its resource usage. If it’s SaaS, check you’re within any usage limits. You might set up a simple heartbeat check – e.g., an alert if no telemetry has been received from a certain pipeline in X hours (which could mean the pipeline or the monitoring of it is down). This sounds obvious, but one pitfall is to “set and forget” the observability tool and not notice if it stops receiving data from a source.

- Secure Your Telemetry and Ensure Privacy: Observability often involves collecting metadata and sometimes sample data from production. Be mindful of security and privacy. Use encryption for data in transit to your observability platform. If you’re logging data values, consider masking sensitive information (DQLabs provides features to handle PII safely). Also enforce access controls – not everyone should see all observability data if it contains sensitive info. Treat the observability logs as an asset that needs protection, just like the primary data.

Common Pitfalls to Avoid

- Alert Overload (Noise Fatigue): A very common pitfall is turning on too many alerts or setting thresholds too tight, leading to a flood of warnings and false positives. When everything is “red,” teams start ignoring the alerts – defeating the purpose of observability. Avoid this by tuning alerts carefully. Use severity levels, as mentioned, and take advantage of DQLabs’ intelligent alerting to filter noise (for example, its semantic layer can avoid raising 10 separate alerts that are essentially caused by one root issue). Periodically review your alert volume; if certain alerts haven’t provided actionable value in a while, adjust or disable them.

- Siloed Implementation: Another mistake is treating data observability as just a tooling project for the data engineering team, without process or cultural change. You might deploy a great platform but not inform end-users or not loop in DevOps, etc. This siloed approach leads to underutilization – e.g., issues get flagged but no one outside the data team knows, so business users keep discovering problems independently. Avoid going it alone – involve stakeholders early, and evangelize the observability insights to all data consumers. The more eyes on the data health metrics, the better.

- Neglecting On-Prem and Legacy Systems: In hybrid environments, it’s tempting to focus observability on the shiny new cloud data warehouse and ignore that old Oracle database or that mainframe feed. But a chain is only as strong as its weakest link. If legacy systems feed critical data, they need observability too. It might be trickier (perhaps fewer APIs or need for custom scripts), but not including all parts of your data landscape can leave blind spots. Those blind spots often come back to bite (imagine the one system you didn’t monitor is the one that delayed a critical dataset and you had no alert).

- Over-Reliance on Manual Effort: Some teams treat observability like a one-time setup and then rely on manual eyes to catch things. For example, they set up dashboards but expect someone to watch them constantly, or they create alerts that go to an email nobody checks at 2 AM. This is essentially recreating a manual monitoring regime. To truly scale, observability must be automated and actionable. If an alert fires at 2 AM, it should page the on-call or at least be seen by someone who will act. If you find your observability output is not being actively consumed (or worse, being checked only after an incident as a forensic tool), you need to adjust – whether it’s better alert routing or more automation in response.

- Ignoring Continuous Improvement: Data systems evolve – new data sources, changing usage patterns, etc. A pitfall is to assume the observability rules you set up initially will forever remain valid. Without periodic recalibration, you might have thresholds that are no longer appropriate (leading to misses or false alarms). Avoid this by scheduling regular review of your observability configuration. Many teams do quarterly “tuning” sessions. With DQLabs’ autonomous features, some of this is handled (auto thresholding), but you should still update rules when, say, a dataset’s volume permanently doubles after a business change.

By following these best practices and being mindful of the pitfalls, you can scale data observability from a small initiative to a robust, organization-wide capability. It will become an invisible backbone that keeps the data ecosystem running smoothly, much like DevOps practices do for application infrastructure. Remember, the goal is not just to have a fancy monitoring system – it’s to build a resilient, trust-driven data culture where issues are caught early, accountability is shared, and data truly becomes a reliable asset for the business.

Accelerating Your Data Observability Journey with DQLabs

As discussed throughout this guide, having the right platform is key to successful data observability. DQLabs distinguishes itself as a multi-layered, AI-driven, semantics-powered platform that simplifies and supercharges the observability journey for technical teams. Here’s how DQLabs can help you implement everything we’ve covered, faster and more effectively:

- All Five Pillars in One Platform: Unlike point solutions that might only address data quality or only pipeline monitoring, DQLabs offers a complete end-to-end observability solution. It natively covers data content quality checks, pipeline and workflow monitoring, infrastructure metrics, usage analytics, and cost observability. This means you don’t have to stitch together multiple tools or dashboards – DQLabs serves as a one-stop “single pane of glass” for all your data health metrics. For example, on a single DQLabs dashboard you can see yesterday’s row count anomaly on a table (data content issue), alongside the fact that an Airflow job ran 20 minutes late (pipeline issue), and that the Snowflake warehouse usage spiked (cost issue). This holistic view dramatically speeds up troubleshooting and ensures nothing falls through the cracks.

- AI-Driven Anomaly Detection and Reduced Noise: DQLabs leverages advanced AI/ML algorithms to learn the normal behavior of your data and pipelines. It automatically flags anomalies that would be nearly impossible to catch with manual rules – such as complex multi-variable outliers or seasonal pattern shifts. Importantly, DQLabs’ AI is designed to minimize false positives, addressing the noise fatigue problem. Users have seen up to 90% reduction in false alerts thanks to intelligent pattern recognition that DQLabs provides. For instance, instead of alerting every time a metric is slightly outside a hard threshold, DQLabs might recognize “this is a minor fluctuation that self-corrects” vs. “this is truly abnormal” based on historical context. The result: your team trusts the alerts that do come through and can act on them confidently.

- Semantics-Powered Context (Less Configuration Required): One of DQLabs’ standout features is its semantics engine. It automatically understands data in context – identifying data types, sensitive information, and relationships. This means the platform can auto-generate quality rules and monitoring logic without you having to configure everything manually. For example, if DQLabs sees a column of email addresses, it can apply an out-of-the-box rule to check format validity and uniqueness of emails. Or if it detects a numeric ID field, it might ensure no unexpected negative values. This semantic awareness not only saves setup time but also enables richer insights (like grouping anomalies by business entity, etc.). Essentially, DQLabs acts as an autonomous data steward that “knows” what to look for, allowing your team to focus on higher-level concerns.

- Autonomous Operations and Self-Healing Capabilities: DQLabs goes beyond observing and takes strides towards autonomous data operations. It can automatically discover data relationships and lineage, which is crucial for root cause analysis. Additionally, the platform can self-tune monitoring thresholds over time – if your data volume gradually increases, DQLabs adjusts what’s considered normal without you needing to constantly tweak settings. Perhaps most impressively, DQLabs can proactively recommend corrective actions when issues arise. For instance, if a particular column frequently has null spikes, DQLabs might suggest adding a specific data validation or even help generate a cleaning script. In some scenarios, it can trigger automated workflows – like restarting a stuck pipeline or isolating a bad data segment – effectively helping fix issues, not just detect them. This level of autonomy is a force multiplier for lean data teams.

- Seamless Integration with Modern Data Stacks: DQLabs was built with modern data ecosystems in mind. It provides plug-and-play connectors and APIs for popular tools and platforms. Whether you’re using AWS, Azure, or GCP; Snowflake or SQL Server; Spark or dbt; Airflow or Kubernetes – DQLabs likely has an integration for it. The platform can ingest telemetry from cloud services, on-prem databases, SaaS applications, and more, stitching together a unified observability fabric. This is crucial for hybrid environments; DQLabs spares you from writing custom scripts for each system. The upshot: you get quicker time-to-value. Many DQLabs users are able to deploy and get meaningful insights in days, not months, because the heavy lifting of integration is largely handled.

- Intuitive UI with Powerful Insights: While under the hood DQLabs is performing complex analysis, it presents findings in a clean, user-friendly interface. The UI is no-code for those who want simplicity (you can point-and-click to set up monitors or view lineage graphs), yet it offers depth for power users (such as the ability to drill into a timeline of data changes, or to write custom query checks). It also comes with out-of-the-box visualizations and reports that cater to different audiences – e.g., an executive dashboard highlighting overall data reliability score, a data engineering dashboard for pipeline runs, and a cost dashboard for FinOps. This flexibility means the platform can be used by engineers, analysts, and managers alike, bridging communication gaps with shared factual visuals.

- Proven ROI and Industry Recognition: DQLabs has been recognized by industry analysts (Gartner, Everest, QKS, and ISG) as a leader in the observability space. Its users have reported tangible benefits such as 3× faster incident resolution, 70% reduction in data issue workload, and significant annual savings from preventing data errors. Knowing that DQLabs is a vetted solution gives teams confidence – you’re not just adopting a tool, you’re adopting best practices distilled into that tool. The platform is also continually updated to keep up with new trends (like data mesh or lakehouse architectures), so it’s a future-proof choice.

In essence, DQLabs accelerates your observability maturity. It lets you implement the foundational monitors quickly and then guides you up the maturity triangle to advanced capabilities like anomaly detection and business-level controls, all within one coherent environment. For a data engineer or architect evaluating solutions, the value proposition is clear: with DQLabs you invest in a platform that grows with your needs – from initial data quality checks to full-fledged autonomous data operations. And because it emphasizes both strategy (holistic governance) and tactics (technical depth in monitoring), it helps you achieve that balance of theory and actionable insight that this guide has emphasized.

By leveraging DQLabs, technical teams can spend less time wrangling with disparate monitoring scripts or reacting to surprises, and more time delivering high-quality data products. It’s like having a guardian for your data ecosystem – one that watches every layer tirelessly and even helps fix issues in the background – so your team can innovate and trust the data every step of the way.

Conclusion

Data observability has quickly moved from a buzzword to a foundational component of modern data engineering. As data ecosystems continue to grow in scale and complexity, the ability to know exactly what’s happening with your data at any given moment is no longer optional – it’s mission-critical. A robust observability practice empowers organizations to deliver data with confidence, powering everything from daily business intelligence to cutting-edge AI, all while minimizing downtime, inefficiencies, and risks.

In this blog, we’ve explored what data observability is and why it matters: it’s the evolution of data monitoring into a comprehensive, proactive, and intelligent system for managing data health. We differentiated it from traditional monitoring and data quality efforts, highlighting that observability is about holistic visibility and understanding, not just isolated metrics. We broke down the five pillars of observability – data content, pipelines, infrastructure, usage, and cost – which together ensure that every aspect of your data’s journey is under watch.

We also introduced the DQLabs Data Observability maturity model, illustrating how organizations can progress from basic data checks to advanced, business-aligned observability. No matter where you are on that journey, the goal is clear: to align data operations with business outcomes and enable DataOps excellence. The step-by-step implementation guide provided a tactical roadmap for teams to integrate observability into their stack (yes, even with Airflow, dbt, Snowflake, Databricks and more), and to do so in a sustainable, scalable way. We looked at specific high-impact use cases – ensuring AI/ML readiness (so your models don’t falter due to unseen data issues) and FinOps observability (so your data platform runs efficiently and cost-effectively). Along the way, we covered best practices and pitfalls, so you can benefit from hard-earned lessons of others and avoid common mistakes as you scale.

Crucially, we underscored that technology like DQLabs can be a game-changer in this space. The right platform operationalizes all these concepts – multi-layered monitoring, AI-driven anomaly detection, semantic context, and automation – into day-to-day reality. With DQLabs, data teams gain an autonomous partner that not only flags issues but helps resolve them, bringing true agility to data operations.

As you move forward, remember that data observability is both a strategy and a practice. Strategically, it’s about instilling a culture of data reliability and continuous improvement. Practically, it’s about deploying tools and processes that watch over your data 24/7. Success will be measured in more reliable analytics, faster incident response, happier data consumers, and ultimately, better business decisions made on trusted data.

In closing, the question “What is Data Observability?” can be answered simply: it’s how we keep our data honest, healthy, and ready for whatever comes next. By adopting data observability, you’re not just solving today’s data issues; you’re building a robust framework that will support innovation and reliability for years to come. As 2025 and beyond will surely bring new data challenges and opportunities, having strong observability means you’ll be prepared to tackle them head-on, with clarity and confidence.

Frequently Asked Questions

What is the main goal of data observability?

The primary goal of data observability is to ensure the reliability and health of your data pipelines and datasets. It aims to make data issues (whether in quality, timeliness, or system performance) visible and diagnosable in real-time so that teams can prevent bad data from ever reaching end users or downstream systems. In essence, the goal is to move from reactive data firefighting to proactive monitoring and maintenance, so that data remains trustworthy and readily available for decision-making and analysis. A successful data observability practice means you’re the first to know about any data anomalies or pipeline failures – not your stakeholders – and you can address them before they cause damage.

How is data observability different from data quality management?

Data quality management typically focuses on defining rules and checks to ensure data meets certain standards (accuracy, completeness, etc.). It’s often a manual or rule-based process applied to the data itself. Data observability encompasses data quality but goes much further. Observability is about monitoring the entire data ecosystem – not just the data content, but also the processes that move the data, the infrastructure supporting it, how people are using it, and how much it costs to run. While data quality tools might tell you “this column has 5% nulls, which is above the allowed threshold,” data observability will tell you that plus “the pipeline that generates this column failed two days ago on the upstream system, which is the root cause,” and maybe even “this happened after a schema change in the source.” In short, observability provides context and holistic oversight, whereas data quality management provides important but narrow checks. They are complementary – data observability platforms often automate and enhance data quality checks as part of their features.

Can small teams implement data observability effectively?