Why DQLabs?

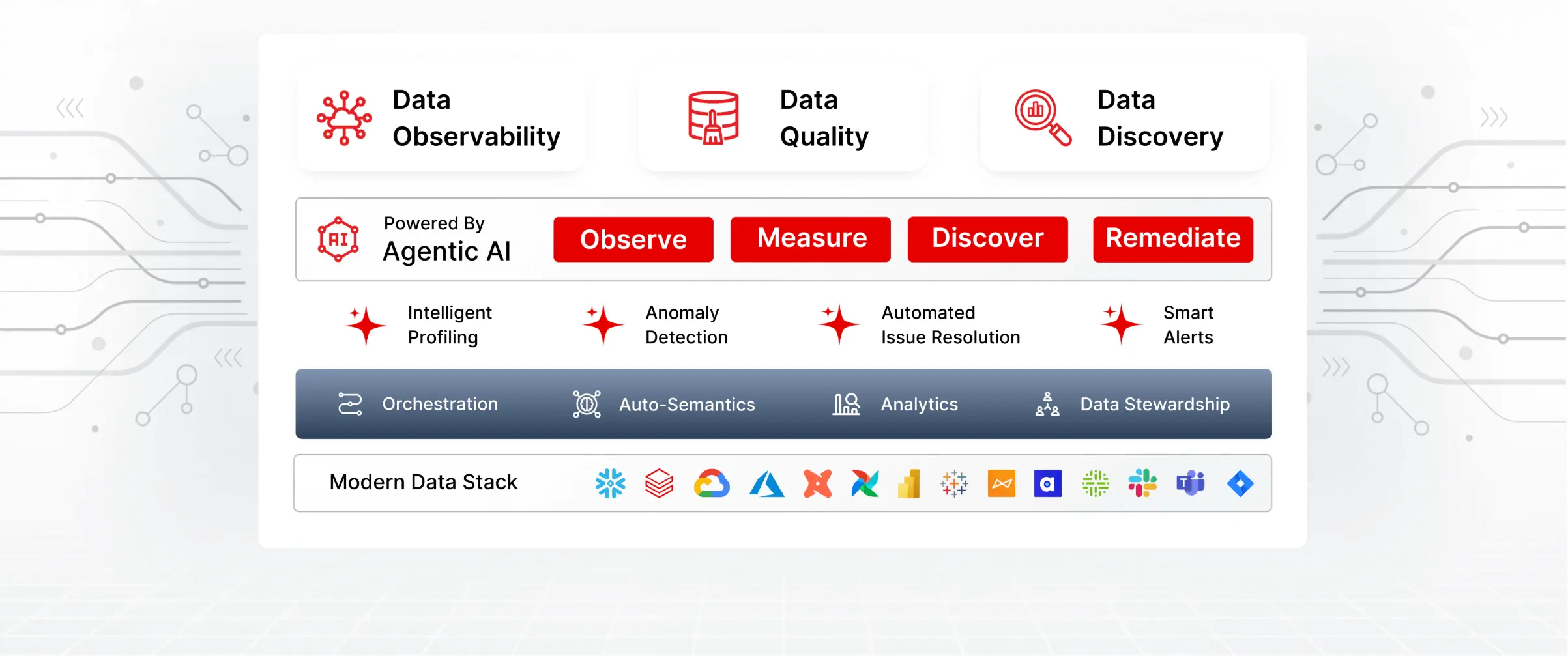

One Unified Platform. No More Data Issues.

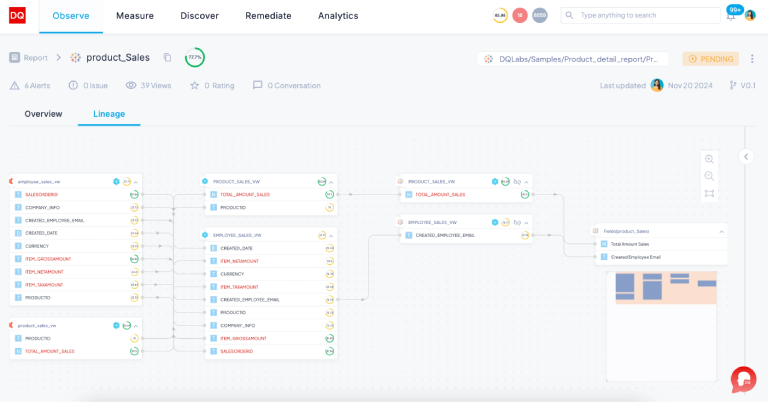

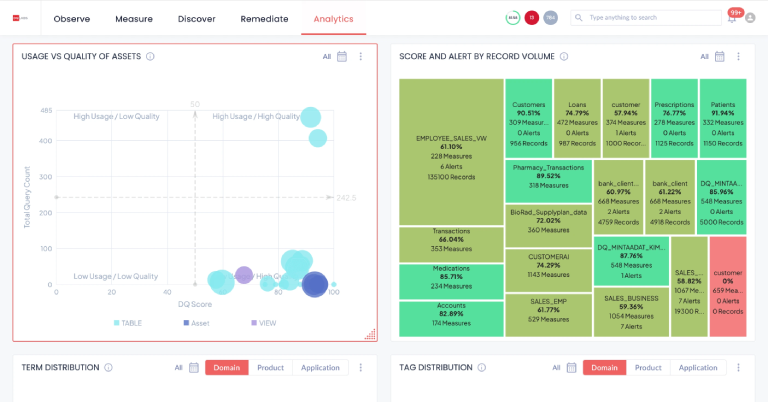

Data Observability

Ensure continuous data trust and actionable insights with instant issue detection, root cause analysis, and proactive alerts.

- Real-time monitoring of data, pipelines, usage, and cost.

- Automated anomaly detection with reduced false positives.

- Unified end-to-end pipeline observability.

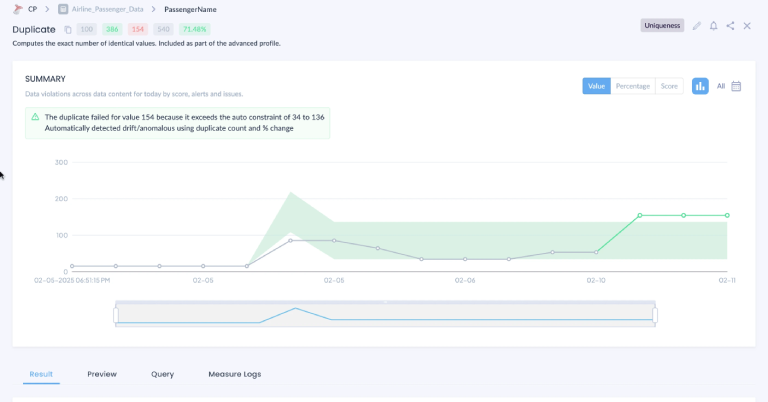

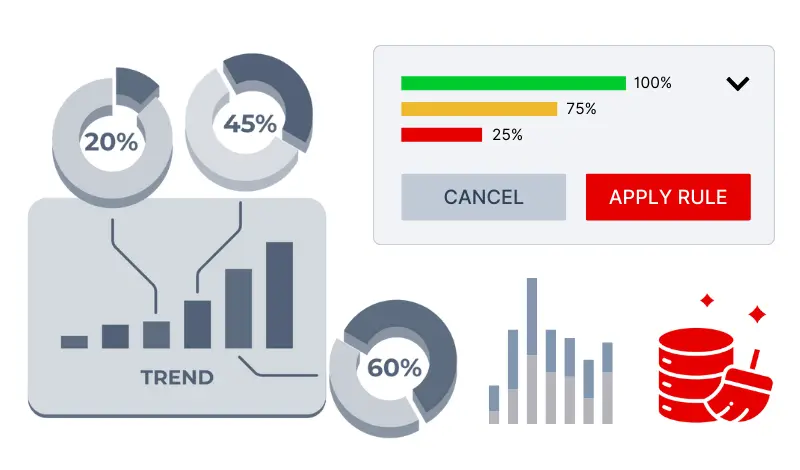

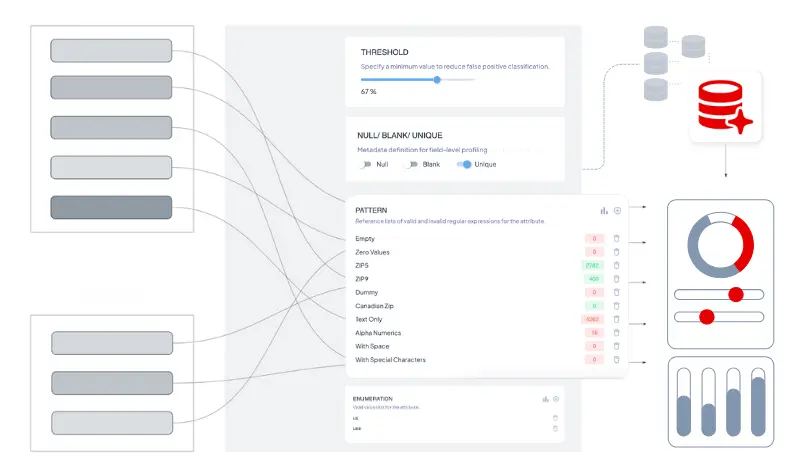

Data Quality

Enable trusted data for AI initiatives with AI-driven rule automation, OOB checks, and complete lineage assurance.

- Out-of-the-box and custom no-code rules for instant setup.

- AI-driven rule generation and attribute-specific recommendations.

- End-to-end data lineage and impact analysis for trust and compliance.

Auto Semantics

Operationalize business and domain context via automated discovery, classification, and tagging—powered by DQLabs’ semantic layer.

- Auto-discover and classify business context—no manual mapping.

- Instantly tag data assets with business terms and domains.

- Rapid rule inheritance and deployment accelerate onboarding.

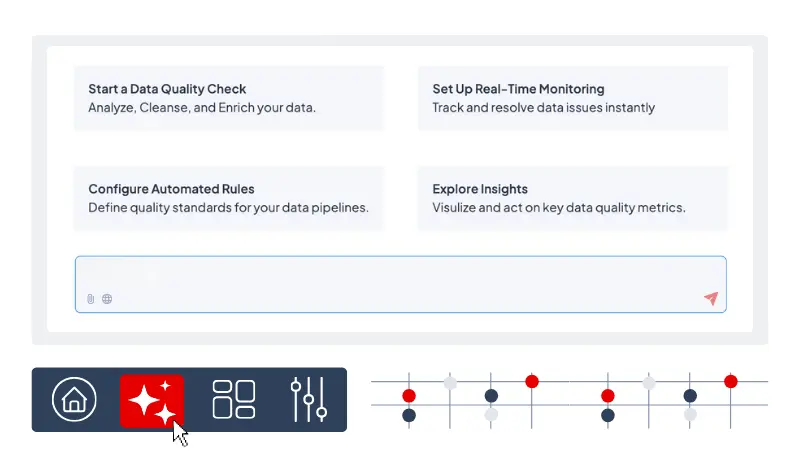

Agentic AI

Transform data management with autonomous AI agents for quality, observability, and efficiency at enterprise scale.

- Achieve faster MTTD with real-time anomaly and drift detection.

- Reduce dependence on SMEs with automated, repetitive task handling.

- AI-driven recommendations deliver smart rules and impactful quality insights.

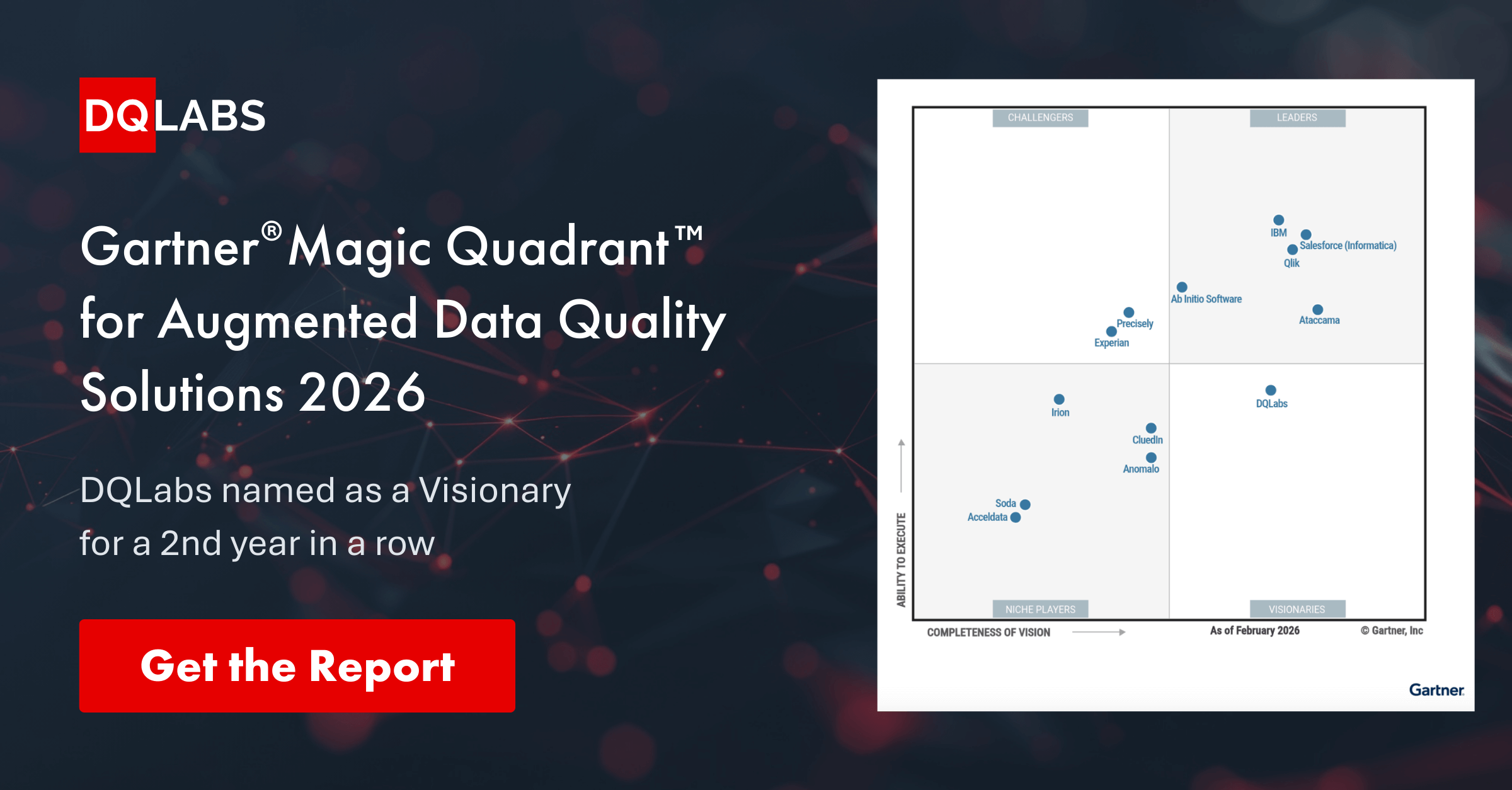

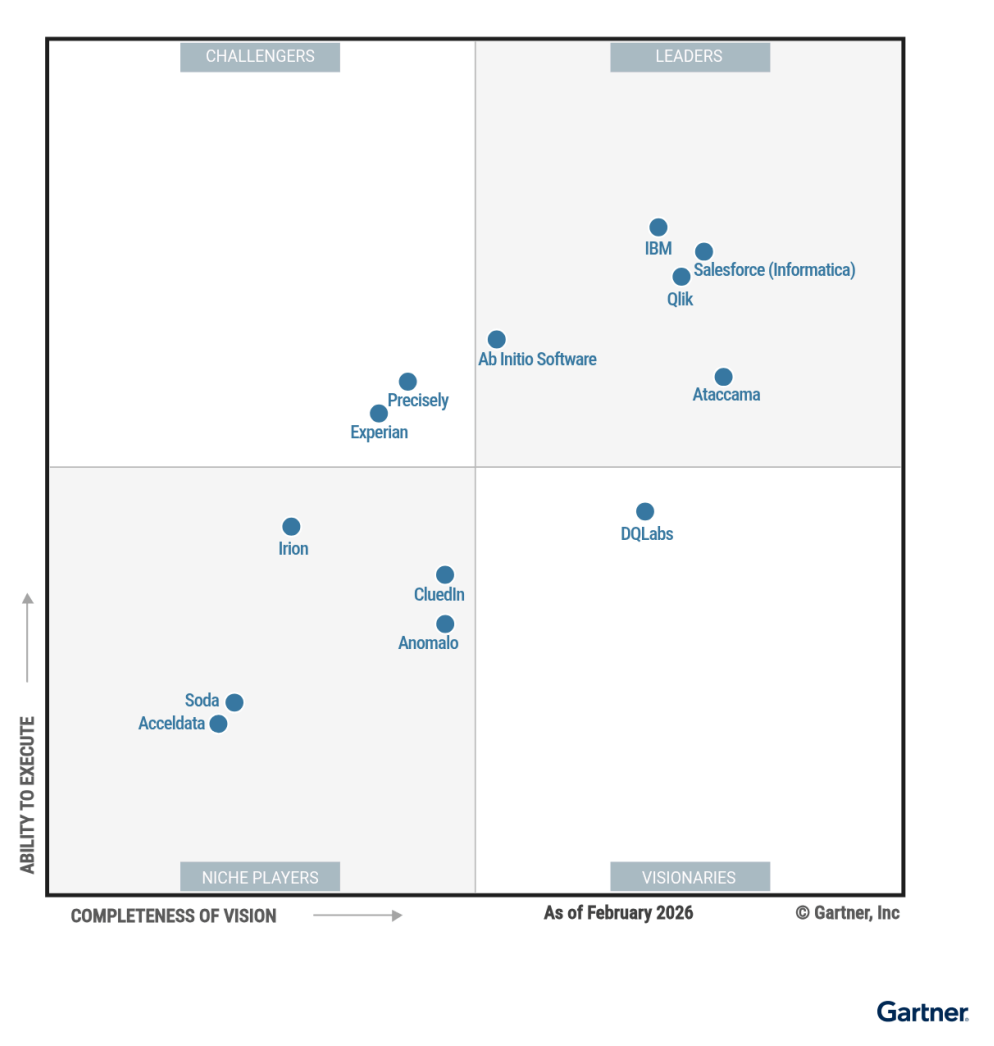

DQLabs Named a Visionary in the 2026 Gartner® Magic Quadrant™ for Augmented Data Quality Solutions

Recognized as a Visionary for the

second consecutive year

GARTNER is a registered trademark and service mark of Gartner, Inc. and/or its affiliates in the U.S. and internationally and is used herein with permission. All rights reserved.

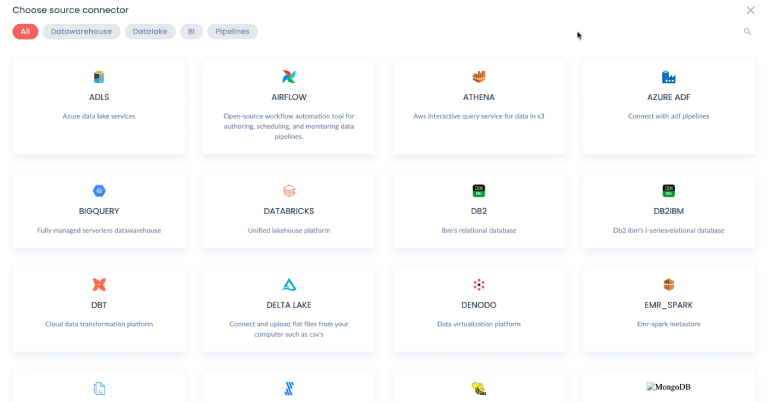

DQLabs Platform

Unified Data Observability & Data Quality

Powered by Semantics & Agentic AI