Summarize and analyze this article with

Operationalizing data observability means making continuous data monitoring, quality checks, and diagnostics part of your day-to-day data operations from day one. This blog explains how DQLabs – an AI-powered data quality and observability platform – enables organizations to get value from data observability from Day One. We’ll cover common pain points (like fragmented tools and manual troubleshooting), the key pillars of DQLabs’ observability framework, and best practices for integrating DQLabs with modern data stacks (Snowflake, Databricks, dbt, Airflow, and more). By the end, you’ll see how DQLabs delivers immediate, plug-and-play observability that improves data reliability, reduces “data downtime,” and boosts team collaboration from the beginning.

What Is Data Observability and Why Operationalize It?

Data observability is the ability to fully understand and monitor the health of your data across all stages – from ingestion and pipelines to storage, BI, and beyond. In essence, it means always having “eyes on your data”: knowing when data is missing or wrong, what broke and why, and how to fix issues quickly.

Operationalizing data observability means baking these monitoring and diagnostic capabilities into your daily workflows and infrastructure from the get-go. This is critical because modern data ecosystems are highly complex (hybrid cloud environments, streaming data, microservices, etc.). Without automated observability in place, errors often go undetected until they cause damage – for example, a silent schema change or a failed ETL job might only be noticed after it produces incorrect analytics. By implementing observability as an always-on practice, data teams can catch anomalies in real time (e.g. a sudden drop in record counts or a spike in null values) and resolve issues before they impact business decisions. The result is significantly reduced data downtime (periods when data is unreliable or unavailable) and greater trust in your organization’s data. In short, operationalized observability is the difference between constantly firefighting data issues versus proactively preventing them.

Pain Points in Achieving Data Observability (and How DQLabs Solves Them)

Despite its importance, many organizations struggle with data observability due to several common pain points. Let’s highlight a few challenges and how a unified platform like DQLabs addresses them:

- Fragmented Tooling and Siloed Monitoring: Data teams often use a patchwork of tools (one for data quality, another for pipeline logs, others for infrastructure metrics). This fragmented approach leaves blind spots – it’s hard to get a unified view of data health when insights are scattered. DQLabs solves this by providing an end-to-end observability platform that monitors data, pipelines, and usage all in one place. By unifying data quality checks, pipeline monitoring, and metadata tracking, DQLabs eliminates silos and gives you complete visibility across your data stack.

- Manual Root Cause Analysis: Without proper observability, troubleshooting data issues can be painfully manual. Engineers might spend hours combing through logs or tracing data lineage by hand to find why a report broke. DQLabs accelerates root cause analysis with automated data lineage and AI-driven insights. When an anomaly is detected, DQLabs can trace its lineage upstream and downstream, pinpointing the source (e.g. a schema change or late data load) and showing which downstream tables or dashboards are impacted.

- Inconsistent Data Quality Checks: Many companies lack consistent data quality enforcement – some critical datasets might have tests, others don’t, and standards vary by team. This inconsistency leads to “unknown unknowns” in your data. DQLabs addresses this with continuous data quality monitoring across all your data assets. It provides out-of-the-box data quality rules and machine learning anomaly detection that run automatically, ensuring every dataset is being checked for accuracy, freshness, completeness, and more.

- Alert Fatigue and Noise: On the flip side, teams that do implement monitoring often get bombarded with alerts – many of them low priority or false positives – causing alert fatigue. DQLabs uses intelligent alerting to prioritize and contextualize notifications. Its AI models learn normal patterns to reduce false alarms and can group related issues into a single alert. This ensures you stay focused on truly critical data incidents without being overwhelmed by trivial alerts.

These pain points underscore the need for a comprehensive solution. Next, let’s look at how DQLabs is built to tackle data observability through a set of key pillars.

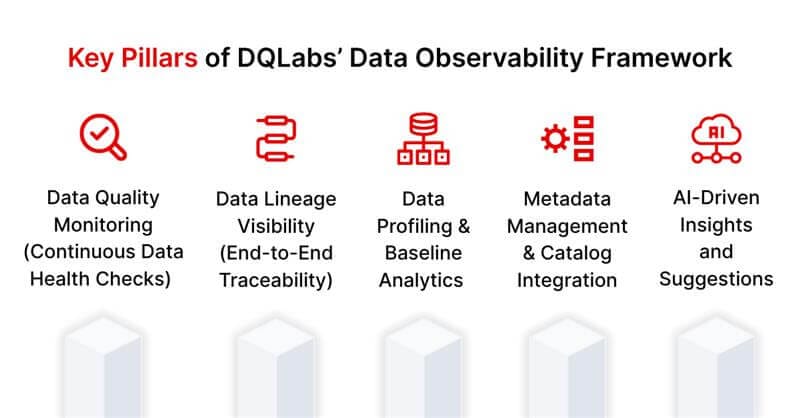

Key Pillars of DQLabs’ Data Observability Framework

DQLabs provides a holistic observability framework covering all aspects of data health and pipeline monitoring. Here are the five key pillars of DQLabs’ approach and how each helps you maintain trustworthy data:

1. Data Quality Monitoring (Continuous Data Health Checks)

At the heart of DQLabs is robust data quality monitoring across multiple dimensions. The platform continuously tracks metrics like freshness (is your data up-to-date?), volume/completeness (are record counts within expected ranges?), validity (do values meet business rules or formats?), accuracy/consistency, and more.

DQLabs comes with 50+ out-of-the-box data quality rules and AI/ML-powered anomaly detection. This means from day one, you can automatically detect anomalies such as a daily load being 90% of the usual (volume drop), a sudden spike in null or outlier values (distribution anomaly), or data that hasn’t arrived on schedule (freshness issue). By continuously monitoring data in real-time (or batch, depending on your needs), DQLabs ensures that any data health issues are caught immediately.

2. Data Lineage Visibility (End-to-End Traceability)

Understanding how data flows—from source through transformations to final outputs—is crucial. DQLabs automatically maps and visualizes data lineage across pipelines, showing upstream and downstream dependencies for datasets, reports, and machine learning models. This helps pinpoint root causes quickly—if a dashboard shows wrong numbers, lineage reveals if an upstream table failed or changed schema. This transparency greatly speeds up troubleshooting and also helps you plan changes (you can instantly see what might be impacted if you modify a data source or ETL job). With full lineage at your fingertips, manual detective work is eliminated – the platform tells the story of your data’s journey and dependencies.

3. Data Profiling and Baseline Analytics

Before you can monitor data effectively, it helps to know what “normal” looks like. DQLabs includes automated data profiling capabilities that kick in as soon as you connect your data sources. It scans datasets to compute statistics, distribution details, schema information, and quality scores. This profiling builds a baseline for each dataset (e.g., typical range of values, average daily row count, common patterns in the data). The AI then uses these baselines to detect drift or anomalies over time. For instance, if a column usually has 2% nulls but suddenly has 20%, DQLabs will flag it. Profiling also surfaces hidden data issues early (like out-of-range values or inconsistent coding of categories) and helps you understand your data assets better from day one. In short, this pillar provides deep insight into your data’s shape and behavior.

4. Metadata Management and Catalog Integration

Observability is strengthened by robust metadata management. DQLabs acts as a unified metadata layer and data catalog, automatically collecting metadata such as schemas, data types, owners, and update frequency. Semantic discovery tags datasets with business terms or sensitive classifications like PII. This rich context is accessible within DQLabs. It also integrates with popular data catalog and governance tools such as Alation, Collibra, and your existing metadata repositories. Regardless of where data resides—cloud, on-prem, or across platforms—DQLabs offers a single pane of glass to monitor and understand it all.

5. AI-Driven Insights and Recommendations

One standout feature of DQLabs is its use of AI/ML to not only detect issues but also recommend actions and improvements. The platform’s “Agentic AI” engine can auto-generate data quality rules based on patterns it sees (for example, suggesting a new rule when it learns what “normal” behavior is for a certain column). DQLabs provides AI-driven recommendations on how to address anomalies – such as highlighting which upstream source likely caused an issue, or suggesting data enrichment and transformation steps to improve quality. In some cases, DQLabs can even auto-remediate issues: for instance, quarantining or rejecting bad data (acting as a “circuit breaker”), or triggering a pipeline retry when a fix is known. Overall, AI-driven insights don’t just churn out raw alerts, they help you decide what to do next.

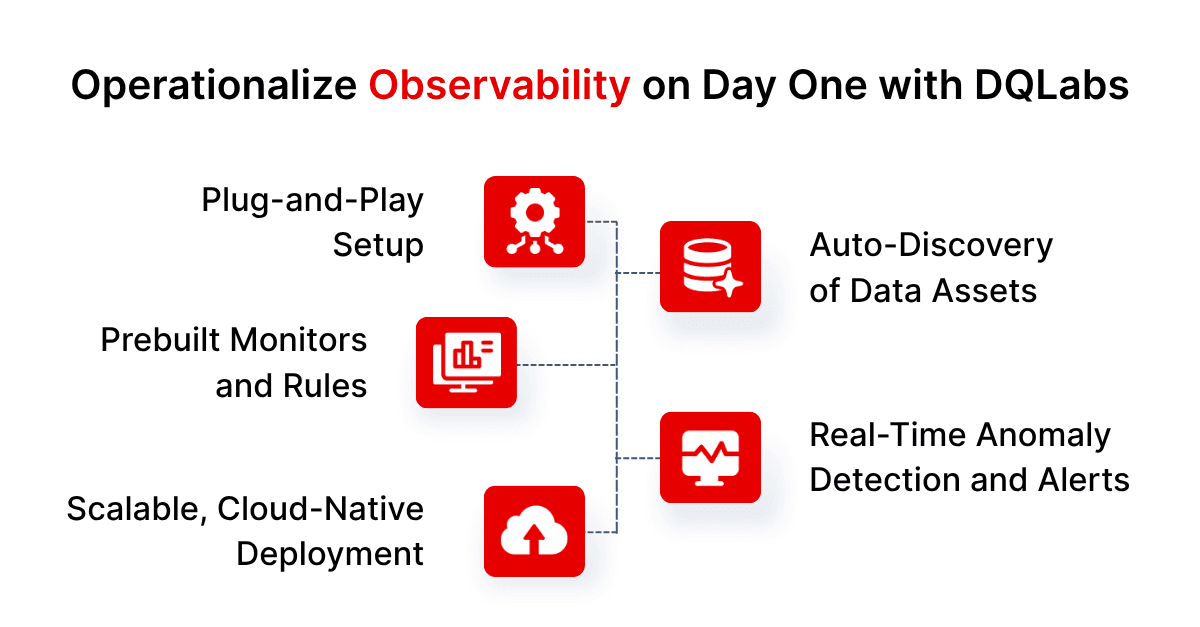

Day-One Operationalization: Immediate Value with Plug-and-Play Observability

One of the greatest benefits of DQLabs is its focus on “Day One” value – you can start gaining insights and preventing issues from the moment you deploy it. Here’s how DQLabs makes data observability operational on day one (with minimal effort):

- Plug-and-Play Setup: DQLabs is engineered for fast, seamless integration into your existing data stack. With smart native connectors for dozens of sources and tools—be it cloud data warehouses like Snowflake, lakehouses like Databricks, on-prem databases, or SaaS platforms—you can connect DQLabs within minutes. There’s no need for complex engineering or custom build-outs; just point DQLabs to your sources and it automatically starts ingesting metadata and metrics. This lets you achieve comprehensive observability across critical systems right from day one.

- Auto-Discovery of Data Assets: Upon connecting, DQLabs automatically discovers your data sets, schemas, and pipeline jobs. The platform’s AI scans through your environment to inventory tables, dashboards, ETL jobs, etc., and begins profiling them (as described earlier). This automatic discovery saves you time configuring what to monitor. For example, DQLabs might pull in all tables in a Snowflake database or all DAGs from Apache Airflow and list them in its interface. You can then easily select which ones to apply monitors to (or let the system apply default monitors to all). There’s no need to manually enumerate every asset – DQLabs ensures nothing important is overlooked in the observability scope from the start.

- Prebuilt Monitors and Rules: DQLabs comes with a rich library of prebuilt data quality checks, anomaly detection algorithms, and dashboard templates. Out-of-the-box, it will start checking data freshness, volumes, distributions, and other quality metrics without you writing any code. For example, a daily sales table will automatically be monitored for schedule updates and data consistency, leveraging historical learning. Prebuilt dashboards and scorecards display quality scores and trends, allowing you to spot issues such as stale or incomplete datasets immediately after deployment. This means observability efforts are jump-started with instant, actionable insights.

- Scalable, Cloud-Native Deployment: DQLabs is designed to scale effortlessly with your data landscape. As a cloud-native platform, it supports SaaS deployments or can operate within your own cloud environment, expanding to monitor thousands of tables or terabytes of data in real time. Distributed processing enables performance and reliability, even for organizations with hundreds or thousands of pipelines. Heavy profiling tasks can be offloaded to existing compute systems, ensuring efficient operations without added system burden.

- Real-Time Anomaly Detection and Alerts: From the moment DQLabs is connected, it begins actively watching for anomalies in your data and pipelines. Thanks to its machine learning models, it doesn’t rely solely on static thresholds – it can flag unusual events even in the first day of operation by comparing against initial profiles or known patterns. For example, if a nightly ETL job usually takes 30 minutes but tonight runs for 2 hours, DQLabs can catch that performance anomaly and alert you immediately. Alerts are delivered in real time via your preferred channels (email, Slack, Microsoft Teams, PagerDuty, etc.), which you can configure right away. You can also set up custom alert rules easily through the UI or API for any specific conditions you care about. The key point is that DQLabs provides actionable notifications from day one, so your team is instantly in the loop on data issues rather than finding out days later when a report is wrong.

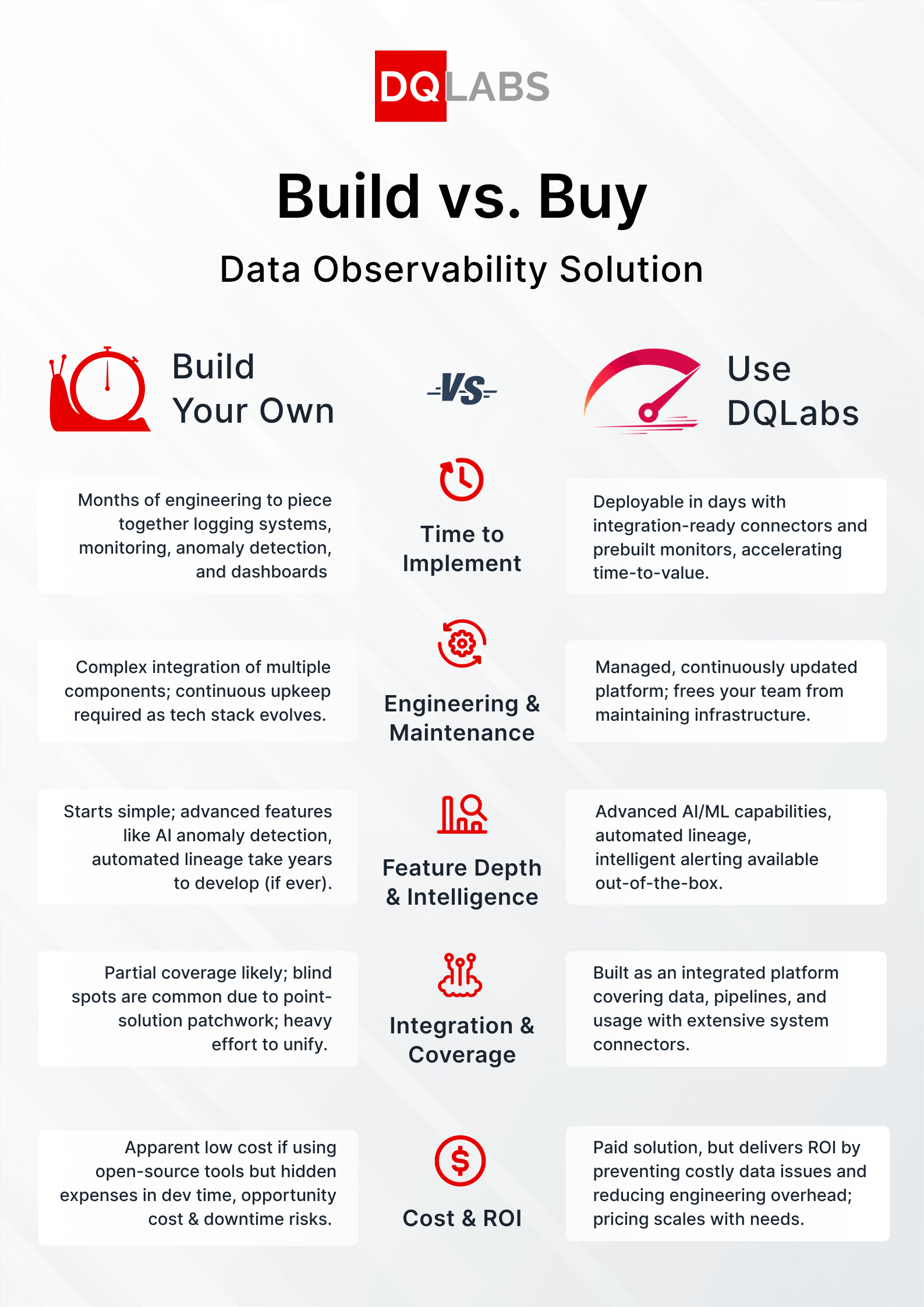

Build vs. Buy: Custom Observability Solution vs. DQLabs

Hybrid, Multi-Cloud, and Industry-Wide Integration (No Vertical Limits)

Modern enterprises often operate in complex environments – multiple cloud platforms, on-premise databases, and strict industry regulations. DQLabs is built to be environment-agnostic and industry-agnostic, ensuring you can deploy data observability anywhere it’s needed:

- Multi-Cloud and Hybrid Support: Whether your data infrastructure is all in one cloud or spread across AWS, Azure, GCP, and on-prem systems, DQLabs can handle it. The platform can be deployed in a cloud-agnostic manner (or accessed as a SaaS that connects securely to your data in any location). It has connectors for cloud-native warehouses like Snowflake or BigQuery, as well as traditional databases (Oracle, SQL Server) often found on-prem. DQLabs’ metadata-driven approach stitches together monitoring across these environments. Practical example: A hybrid setup with on-prem ERP feeding a cloud data lake can be fully monitored end-to-end on a single DQLabs dashboard (for timeliness, quality rules, and schema consistency), eliminating blind spots between systems. The result was no more blind spots between on-prem and cloud.

- Flexible Deployment Options: DQLabs can adapt to your IT and security requirements. You can use it as a fully managed cloud service, or deploy it within your own VPC (Virtual Private Cloud) for compliance. It supports containerized deployments (Docker/Kubernetes), which is useful in regulated industries that require running software on-prem or in a private cloud. This flexibility ensures that even sectors like finance or government, which have strict data control policies, can leverage DQLabs without compromising on security or compliance.

- Not Limited by Industry or Data Type: Data observability is a universal need – whether you’re in finance, healthcare, retail, manufacturing, or tech – and DQLabs is built accordingly. The platform’s semantic layer enables customization with industry-specific rules and terminology—such as patient data consistency for healthcare or compliance usage tracking in finance. Tailorable building blocks, including custom rules, tags, and domain-specific AI models, allow monitoring of diverse datasets—from e-commerce clickstreams to laboratory records—making it effective across verticals.Many of DQLabs’ existing use cases span multiple industries – the platform’s adaptability means it can enforce your unique business rules and quality criteria, whatever they may be.

- Integration with Industry Tools and Data Formats: Different sectors often have specialized data stores or formats (for instance, HL7/FHIR data in healthcare, or IoT telemetry in manufacturing). DQLabs’ architecture is extensible to handle various data types – including semi-structured and unstructured data. Its AI can profile JSON, logs, documents, etc., not just rows in a table. Through an API, it can integrate with bespoke systems you might have. Bottom line: no matter what your data landscape looks like, DQLabs inserts a layer of observability over it, without forcing you to reshape your ecosystem.

This flexibility across environments and industries means investing in DQLabs is future-proof. As your company grows or adopts new tools, you can bring them under observability coverage easily. You won’t outgrow the platform or be constrained by a particular tech stack.

Delivering Trust, Speed, and Collaboration (The Business Impact)

Implementing DQLabs for day-one data observability isn’t just a technical improvement – it drives significant business benefits:

- Reduced Data Downtime to Near-Zero: DQLabs’ early issue detection prevents bad data from moving downstream, drastically reducing “data downtime.” This means analysts and decision-makers rarely face pauses from missing or incorrect data. With DQLabs in place, costly data incidents become rare, safeguarding business-critical insights and saving potentially millions.

- Faster Time-to-Insight and Value: By operationalizing observability from the start, DQLabs cuts reactive troubleshooting time dramatically. Engineers can resolve pipeline issues in hours instead of days, enabling faster project delivery and accelerated innovation. Real-time monitoring by DQLabs ensures new data products roll out confidently and with high quality, boosting overall business agility.

- Improved Data Trust and Decision-Making: DQLabs offers measurable data quality via scores, dashboards, and alerts, creating transparency that builds stakeholder trust. Knowing data health is continuously monitored, executives and business users rely more confidently on data, fostering a stronger data-driven culture.

- Enhanced Collaboration and Accountability: DQLabs acts as a shared observability hub where engineers, analysts, and business owners collaborate with full visibility. This breaks silos, promotes shared responsibility for data quality, and speeds issue resolution, strengthening overall data governance.

- Reliability as a Competitive Advantage: By ensuring reliable, timely data pipelines through DQLabs, organizations enhance customer satisfaction and operational efficiency. This trusted foundation empowers teams to confidently experiment with AI/ML and analytics, turning data reliability into a key competitive differentiator.

Conclusion: Start Your Data Observability Journey Now

Data observability is no longer a “nice to have” – it’s a critical pillar of a modern data-driven enterprise. DQLabs enables you to operationalize this observability from day one, so you don’t have to wait months to see benefits. By addressing key pain points and providing a comprehensive framework (quality monitoring, lineage, profiling, metadata, AI insights), DQLabs empowers data teams to deliver reliable, accurate data on a continuous basis. The result is proactive data operations that keep your business a step ahead of problems, rather than a step behind.

Embarking on a data observability initiative can feel daunting, but with DQLabs it doesn’t have to be. From day one, you’ll have an ally in ensuring your data is always reliable, so you can focus on using data – not worrying about it. Book a demo today and take the first step toward eliminating data blind spots and achieving trust in every decision.

ChatGPT

ChatGPT Perplexity

Perplexity Grok

Grok Google AI

Google AI