Summarize and analyze this article with

In today’s data-driven world, data observability has emerged as a vital practice for organizations to maintain trust in their data. Data observability refers to continuously monitoring and managing data to ensure its quality, availability, and reliability across all processes, systems, and pipelines. Unlike traditional monitoring (which only signals that a problem occurred), data observability provides a holistic, real-time view of data health, helping teams identify, troubleshoot, and even resolve issues nearly as they happen. This evolution is necessary because static, event-based monitoring is no longer sufficient for modern, complex data architectures. With data environments growing in volume and complexity, organizations need end-to-end visibility to catch anomalies and errors before they wreak havoc downstream.

Achieving strong data observability is becoming urgent for several reasons. Most businesses know the pain of “bad data”: 80% of executives do not fully trust their data, and poor data can lead to major consequences. For example, in 2022 one company discovered it had been ingesting bad data from a partner, an error that led to a 30% stock plunge and $110 million in lost revenue. Problems like this often go undetected because, unlike an application crash that’s immediately obvious, bad data can quietly spread through reports and models unnoticed. Data observability is the best defense against bad data, continuously monitoring data pipelines for completeness, accuracy, and timeliness so that teams can prevent data downtime, meet SLAs, and maintain the business’s trust in data. In essence, data observability ensures that data remains an asset rather than a liability, which is crucial as organizations rely on data for critical insights, AI/ML models, and day-to-day decisions.

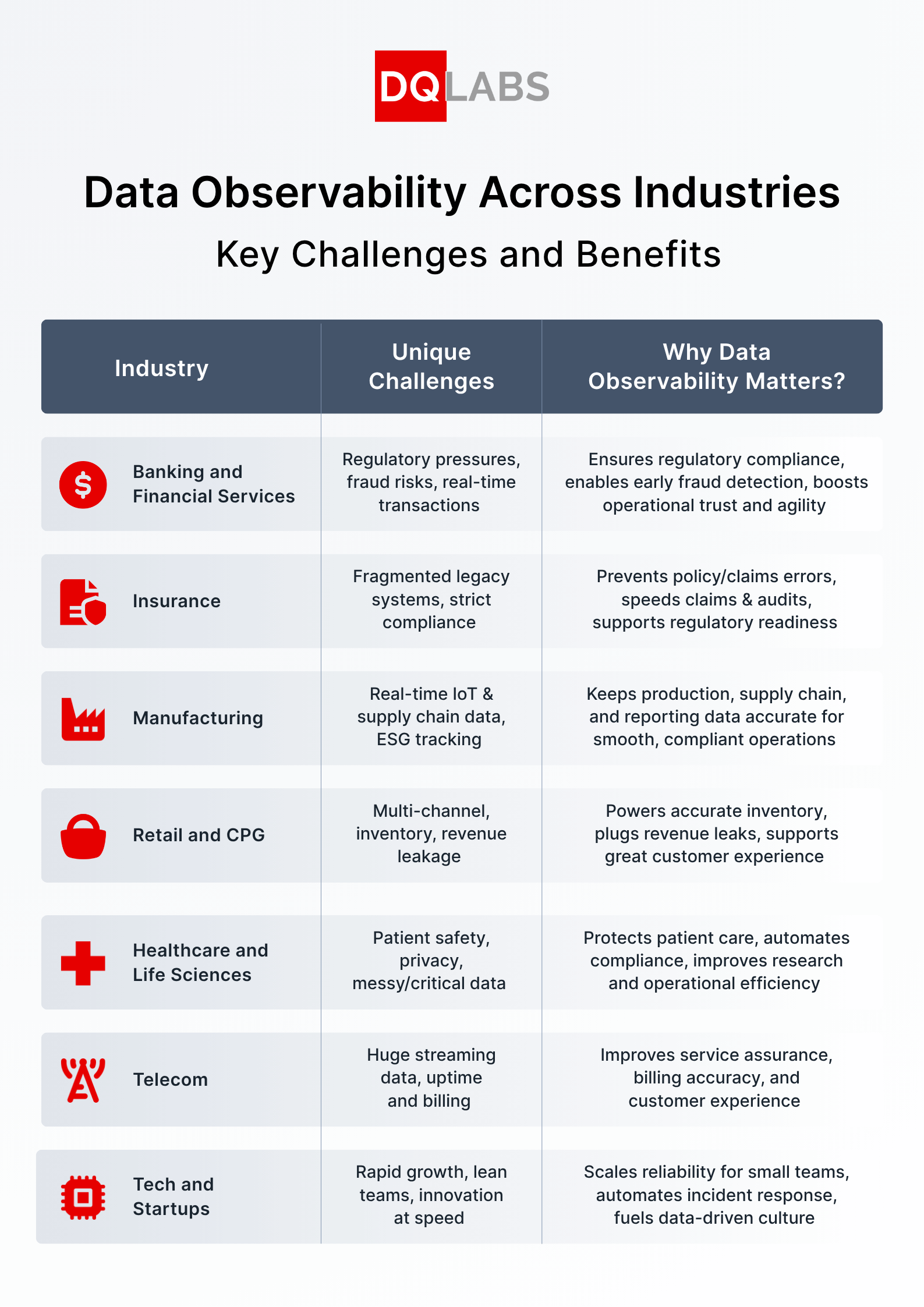

However, effective data observability does not look exactly the same in every industry. Different industries face unique data challenges — from strict regulatory requirements to massive real-time data volumes — and data observability practices must adapt to these contexts. Below, we explore the data observability challenges and benefits in seven key verticals: Banking, Insurance, Manufacturing, Retail/CPG, Healthcare, Telecom, and IT/Tech/Startups. In each case, the goal is the same: to ensure trusted, high-quality data for better decisions and outcomes. We focus on how data observability (with integrated data quality monitoring) helps technical data teams and leaders in each sector address their most pressing data issues and ultimately achieve data trust.

Data Observability for Banking and Financial Services

The banking and financial services sector runs on data – from transaction records to risk models – and any lapse in data integrity can carry huge risks. Banks face intense regulatory scrutiny and must comply with standards for accuracy in reporting (e.g. Basel accords, CCAR, FDIC regulations). They also deal with high volumes of transactions and real-time data feeds, where undetected errors or delays could result in financial loss or compliance breaches. The stakes are illustrated by incidents like the Wells Fargo fake accounts scandal, which in 2016 led to $185 million in fines and a tarnished reputation – a crisis partly attributable to lack of oversight and undetected data issues in account creation processes. This example underscores how critical proactive data monitoring is in banking; effective observability might have flagged anomalous account patterns and prevented such fallout.

Data observability helps financial institutions stay ahead of these challenges by providing comprehensive, integrated visibility into data pipelines and quality. In banking, this means detecting and resolving issues before they impact operations or regulatory reporting. Rather than finding out too late that a report was generated from incorrect data, a robust observability platform will alert teams to anomalies (like unexpected spikes in missing values, schema changes in a data feed, or reconciliation mismatches) in time to fix them. The result is fewer surprises and greater confidence in data-driven processes. Key benefits of data observability for banks include:

- Real-Time Regulatory Compliance: Continuous monitoring of data feeds and reports to ensure they meet regulatory standards (e.g. validating that capital ratios, AML reports, etc. are based on complete and correct data), helping avoid costly penalties and audits. For instance, observability tools can automatically flag out-of-range values or missing records in regulatory filings, allowing prompt correction and preventing compliance breaches. Keeping data under tight watch is crucial given that failure to deliver accurate data can lead to severe consequences in banking.

- Risk Management & Fraud Detection: By monitoring data quality and lineage, banks gain the ability to detect anomalies that could indicate fraud or model drift. Data observability enables early warning on irregular patterns – for example, a sudden surge in transaction volumes or missing risk factor data – so teams can investigate whether it’s a data issue or a legitimate business change. This proactive approach to data health supports more reliable risk models and fraud analytics, ultimately safeguarding the institution and its customers.

- Operational Efficiency & Trust: Banks often have siloed systems (core banking, CRM, payment platforms) and manual data reconciliation processes. Observability platforms help streamline data operations by automatically tracing data flows and pinpointing where errors occur (e.g. a broken ETL job or a dropped data field during transfer). This reduces the burden on analysts who previously spent time finding and fixing data issues. Automated alerts and root-cause analysis mean data engineering teams can resolve incidents faster, minimizing downtime. Over time, both technical teams and business stakeholders develop greater trust in the data, because they know any problems will be caught and addressed promptly. As one industry analyst noted, healthy data engenders consumer trust and efficient compliance operations, so a preventative data health strategy is critical.

Banking data teams are increasingly adopting data observability not just for fire-fighting issues, but as a strategic initiative to improve data reliability and agility. With robust observability, a bank can confidently pursue data-driven innovations (like real-time customer insights or AI-driven services) knowing that their underlying data is trustworthy and any anomalies will be swiftly dealt with. In an era of digital finance and open banking, this capability becomes a competitive advantage, enabling better customer experiences and new data-powered offerings without compromising on governance or accuracy.

Data Observability for Insurance

Insurance companies manage enormous amounts of data across policies, claims, underwriting, actuarial models, and customer interactions. Much of this data is sensitive and subject to complex regulations (such as HIPAA for health data, GDPR for customer data privacy, and industry-specific mandates like NAIC guidelines, IFRS 17 accounting standards, and Solvency II in Europe). The challenge is that insurance data often resides in fragmented legacy systems – from old policy administration systems to third-party data feeds – leading to silos and inconsistencies. Without strong oversight, insurers risk issues like mispriced premiums, claim disputes due to data errors, policy processing mistakes, or compliance violations. Indeed, poor data quality or visibility can result in everything from customer dissatisfaction to regulatory fines or reserve miscalculations that affect the company’s financial stability.

Data observability brings order and trust to the insurance data landscape by unifying visibility across all these disparate systems and data pipelines. Rather than relying on after-the-fact data cleansing or manual reconciliations (which are time-consuming and don’t scale), an observability platform continuously monitors data at each stage – ingestion, transformation, and consumption – to catch issues early. This is especially valuable in insurance, where timeliness and accuracy of data can directly impact decision-making (e.g. whether a claim is approved) and compliance. The benefits of data observability for insurance include:

- Preventing Policy & Claims Errors: By creating a central, unified view of data from underwriting through claims, observability helps catch inconsistencies before they cause errors. For example, if a customer’s information is updated in one system but not another, or if a batch of policy records fails to load overnight, the observability platform will detect those anomalies. This prevents situations like mispriced premiums, coverage gaps, or claims being processed with the wrong data. The result is fewer disputes and a smoother experience for policyholders, which ultimately protects customer trust in the insurer.

- Compliance and Audit Readiness: Insurance firms must adhere to strict reporting and data retention requirements. Data observability provides the tools to ensure regulatory compliance by tracking data lineage and quality for critical reporting data. For instance, an observability solution can maintain an audit trail showing how data flows from source to report, and verify that data used in solvency calculations or financial reports meets standards. This level of visibility is invaluable for regulations like HIPAA, GDPR, IFRS 17, Solvency II, and others, where insurers must demonstrate control over data accuracy and privacy. In short, observability helps stop compliance risks from lurking in legacy system chaos by surfacing potential issues (incomplete data, policy record errors, etc.) before they escalate.

- Faster Underwriting and Claims Processing: Slow, manual data reconciliation can bottleneck insurance operations. Data observability supports autonomous, real-time data quality checks that speed up core processes. For example, if data mismatches between an adjuster’s report and the master claims system are detected immediately, they can be resolved before they delay a claim payout. Observability tools employing AI can even auto-reconcile some discrepancies. This leads to faster underwriting decisions and claim settlements, improving customer satisfaction. As a result, insurers can operate more efficiently – no more waiting for end-of-month reports to discover an issue that has been impacting approvals or risk models. Eliminating those “hidden” data problems means quicker, smarter business decisions. In fact, data mismatches and delays that once caused claim disputes and slow approvals can be proactively identified and addressed, greatly accelerating cycle times.

In a data observability-enabled insurer, data engineers and analysts get ahead of problems like data feed failures, schema changes in policy data, or anomalies in claims patterns. Modern platforms even leverage AI/ML for tasks such as anomaly detection in incoming claims (flagging suspicious fraud indicators) and self-tuning monitoring thresholds based on historical data patterns. This reduces alert noise while ensuring truly critical issues are caught. The bottom line is a more resilient, trustworthy data environment that underpins everything from customer-facing digital services (e.g. accurate quotes on a portal) to back-office analytics (e.g. risk forecasts). By adopting data observability, insurance companies can not only avoid costly data mistakes but also innovate with confidence, knowing their data foundation is solid.

Data Observability for Manufacturing

Manufacturing companies are increasingly data-driven, using information from production lines, supply chains, and IoT sensors to optimize operations. This industry deals with a broad spectrum of data – from inventory and ERP systems to real-time sensor readings from machines and devices (the realm of Industrial IoT). A key challenge is ensuring data consistency and accuracy across the entire production lifecycle. For example, a discrepancy between a factory sensor reading and the manufacturing execution system could lead to incorrect adjustments, impacting product quality or causing downtime. Similarly, errors in inventory or supply chain data might result in raw material shortages or overstock, hurting efficiency and revenue. Additionally, many manufacturers have sustainability and compliance reporting (like energy usage for ESG goals or safety regulations) that demand precise data tracking. In short, the manufacturing sector needs data observability to maintain both operational excellence and compliance in a highly complex, real-time data environment.

By implementing data observability, manufacturers gain the ability to continuously trust and act on their data from the plant floor to the executive dashboard. Rather than reacting to problems after they have disrupted operations (e.g. a production halt due to undetected data errors), teams can detect anomalies or pipeline issues early and address them before they escalate. Here are key ways data observability benefits manufacturing:

- Optimized Production & Supply Chain: Data observability helps maintain an accurate, up-to-the-minute picture of inventory and production data. It can automatically detect anomalies or mismatches between various systems – for instance, if inventory counts in a warehouse system don’t match the sales orders or if a data feed from a supplier is delayed or incomplete. Catching these issues enables manufacturers to avoid costly situations like running out of a critical component on the assembly line or, conversely, overproducing due to bad demand signals. By ensuring data feeding forecasting models is accurate, companies can build better demand forecasts and adjust production schedules dynamically. One global manufacturer noted that improving data trust not only boosted order fulfillment rates but also enabled accurate, dynamic adjustments to supply chain strategies based on predictive insights. In practice, observability tools can detect data drift, freshness issues, or consistency problems in incoming supply chain data, so planners can respond quickly (e.g. reroute shipments or update inventory allocations).

- Reliable IoT Sensor Data & Equipment Monitoring: Modern factories rely on thousands of IoT sensors monitoring equipment performance, environmental conditions, and output quality. Data observability is critical to ensure sensor data streams remain reliable for analytics and decision-making. These tools can automatically flag sensor readings that deviate significantly (e.g. sudden spikes or drops that suggest a faulty sensor or a real issue in the process) and compare incoming data distributions against historical baselines to spot subtle anomalies. They also track the data pipeline from sensors through edge devices to cloud databases, identifying any bottlenecks or delays in data delivery. This level of insight allows engineering teams to differentiate between a true equipment problem and a data glitch. If a sensor fails or a network node lags, observability alerts the team to fix the data pipeline, preventing blind spots. Accurate, timely sensor and machine data means manufacturers can do effective predictive maintenance (fix machines before they break) and ensure quality control systems are getting the right information. In short, observability keeps the pulse of the factory’s digital twin, so that any anomaly is noted and addressed immediately.

- Energy Efficiency and ESG Compliance: Many manufacturers have to report on energy usage, emissions, and other sustainability metrics. Data observability supports these efforts by tracking data quality and lineage for ESG reporting. For example, it can ensure that readings from energy meters or environmental sensors are accurate and consistently captured as they flow into sustainability dashboards or regulatory reports. If there’s a gap or irregularity (like a sensor outage or a schema change in how energy data is logged), the system will alert the team. This allows companies to correct issues proactively, so that the final ESG reports are accurate and auditable. Maintaining end-to-end lineage of emissions data – from factory sensor to the aggregated report – ensures transparency and compliance with environmental regulations. Additionally, by monitoring usage patterns, observability can help identify inefficiencies (e.g. machinery consuming abnormal energy due to calibration issues) and drive cost-saving or eco-friendly adjustments. The overall effect is that manufacturers can trust their sustainability data as much as their production data, reinforcing both regulatory compliance and corporate social responsibility goals.

From the plant operations perspective, data observability often translates into fewer unplanned downtimes and more consistent output. Because the platform connects the dots across data, pipelines, and infrastructure, manufacturing IT teams can quickly troubleshoot issues that span multiple systems – for instance, an upstream data error causing a downstream analytics dashboard to show wrong KPIs. Moreover, advanced observability solutions (like DQLabs) incorporate autonomous features that auto-discover data relationships and self-tune monitoring. This means less manual configuration and more intelligent detection of what “normal” looks like in complex manufacturing processes. Ultimately, a well-observed data environment in manufacturing improves not just data quality, but real-world outcomes: smoother production runs, informed adjustments on the fly, compliance with safety and environmental standards, and a more agile supply chain.

Data Observability for Retail and CPG (Consumer Packaged Goods)

In retail and CPG, businesses succeed or fail based on how well they manage data about products, inventory, sales, and customers. These companies operate across multiple channels – brick-and-mortar stores, e-commerce platforms, supply chain networks, marketing systems, etc. – generating vast amounts of data. The challenge is ensuring all this data is accurate, timely, and consistent, so that the right products are in the right place at the right time and customers have a seamless experience. Data issues in retail/CPG can directly impact revenue and customer trust. Think of scenarios like an inventory database error that shows an item in stock when it’s not (leading to out-of-stock situations and lost sales), or misaligned sales data causing incorrect demand forecasts (resulting in overstock and waste). Even small data quality problems can scale into huge financial losses or reputational damage, especially during critical times like holiday shopping seasons or new product launches. Additionally, retail and CPG firms often run on tight margins, so revenue leakage due to billing errors, duplicate promotions, or missing transactional data is a significant concern.

Data observability empowers retail/CPG organizations by giving them end-to-end oversight of their data supply chain, from raw sales transactions and supplier feeds to consumer analytics and financial reports. By monitoring data continuously, observability helps ensure that operations and decisions are based on up-to-date and correct information. Here are key ways it provides value in this vertical:

- Accurate Inventory Management: Nothing frustrates customers (and hurts a retailer’s bottom line) more than inventory mishaps – either a product is out of stock when customers want to buy it, or too much stock leads to markdowns and waste. Data observability tackles this by detecting anomalies in inventory data across systems. For example, it can compare sales records, warehouse stock levels, and inbound shipment data and immediately flag discrepancies between recorded inventory and actual stock. If a batch of sales transactions failed to record properly or a store’s point-of-sale system didn’t sync, the observability platform will alert the data team. By catching these issues, retailers can correct course quickly (e.g. reallocating inventory or fixing the data integration) to avoid empty shelves or overstocks. Moreover, with high-quality historical and real-time data, companies can build more accurate demand forecasts, preventing costly out-of-stock or excess inventory situations. In practice, observability tools watch for data drift, missing updates, or inconsistency in key inventory metrics (like sell-through rates), ensuring planners always have reliable data. This level of accuracy helps retailers meet customer demand efficiently while minimizing carrying costs.

- Supply Chain Continuity and Visibility: Retail and CPG supply chains involve many steps – manufacturing, distribution centers, logistics providers, and store deliveries or direct shipments. Data flows along this chain (such as shipping manifests, tracking updates, delivery confirmations) need to be consistent and timely for smooth operations. Data observability provides a central nervous system for these data flows, monitoring each stage for delays, errors, or breakdowns. For instance, if an ETL job that updates the inventory position from a regional warehouse fails overnight, or a feed from a logistics partner is slower than usual, observability will detect it and raise an alert. By immediately flagging anomalies or failures in data processing workflows (like a slow-running job or a stalled data transfer), observability tools help minimize latency and ensure that decision-makers (merchandisers, supply planners, store managers) have timely, accurate data. This prevents the scenario of discovering a supply chain issue only after it has caused a stockout or delay. With end-to-end data lineage and freshness tracking, retailers gain full visibility into where each piece of data is and can quickly troubleshoot issues – effectively creating a resilient, data-driven supply chain that can respond to disruptions in real time.

- Preventing Revenue Leakage and Errors: In retail and CPG, small data errors can quietly drain revenue. Examples include: mismatches between sales and promotions leading to unpaid vendor rebates, billing mistakes in vendor invoices, or missing transaction records causing unaccounted sales. Data observability helps stop revenue leakage by reconciling data across systems and flagging inconsistencies that humans might miss. For instance, it can continuously audit sales orders, deliveries, and billing records to ensure they all line up – highlighting if there are delivered items not billed, or invoices for items never delivered. It also monitors promotional data: if a promotional sales file from a retailer shows significantly lower volume than expected, it may indicate missing data or improperly applied discounts. By catching such anomalies (e.g. discrepancies between actual sales vs. reported sales in a promotion), observability enables the company to investigate and correct issues before they become revenue losses. In addition, accurate data monitoring reduces the chance of customer-facing errors like incorrect pricing or loyalty point miscalculations that can erode customer trust. According to industry analyses, billing or data errors can directly cause revenue leakage and customer churn in sectors like telecom and retail, so a similar principle applies: preventing these errors through observability has a clear ROI. Ultimately, plugging these data leaks improves profitability and ensures financial records reflect reality.

Beyond these specific points, a strong data observability framework in retail/CPG fosters better customer experiences and analytics. When data about customers (e.g. purchase history, preferences) is consistent and timely, retailers can power personalized recommendations and marketing campaigns without error – leading to higher customer engagement. Conversely, if something goes wrong (say a personalization algorithm is using stale data due to a pipeline failure), the observability platform will alert data engineers to fix it, thus safeguarding the customer experience. Many retail/CPG firms also rely on dashboards for sales performance, inventory turnover, etc.; observability ensures those dashboards remain trustworthy by validating that the underlying data is complete and up to date. In summary, data observability acts as a quality control layer for all data-driven operations in retail/CPG, which is indispensable for maintaining efficiency, maximizing revenue, and keeping customers happy in a fast-paced market.

Data Observability for Healthcare and Life Sciences

Healthcare and life sciences organizations handle some of the most critical and sensitive data of any industry. Whether it’s patient electronic health records (EHRs), clinical trial results, medical device readings, or insurance billing data, the accuracy and availability of healthcare data can be a matter of life and death. The sector is also heavily regulated: standards like HIPAA (for patient privacy), FDA guidelines for clinical data, and various compliance requirements mandate strict control and auditing of data. Yet, healthcare data is notoriously messy and siloed – information might be spread across hospital departments, labs, pharmacies, and external providers, often with inconsistent formats and coding. Poor data quality in healthcare can directly impact patient safety, care outcomes, and compliance. For example, if a patient’s allergy information is recorded incorrectly, it could lead to a dangerous medication error. In fact, the World Health Organization reported in 2023 that 1 in 10 patients worldwide is harmed while receiving hospital care, with poor data (like inaccurate records) contributing to medication errors and incorrect treatments. This underscores a “zero tolerance” need for data in healthcare – the data feeding decisions and analyses must be as error-free as possible.

Data observability is becoming essential in healthcare as a means to ensure data integrity and reliability across the continuum of care. By deploying observability tools, healthcare organizations can continuously monitor the myriad data pipelines and repositories, catching errors or anomalies before they affect patient care or reporting. Here are key advantages in this domain:

- Patient Safety and Care Quality: The top priority in healthcare is delivering safe, effective care, and that depends on having correct, up-to-date data on each patient. Data observability helps achieve this by monitoring critical patient data flows for accuracy and completeness. For example, it can ensure that laboratory results from diagnostics devices are properly transmitted to EHR systems without delay, and that any missing or out-of-range values are flagged immediately. If a patient’s medication list in the pharmacy system doesn’t match the hospital’s record, or if a data integration from a wearable device stops, the observability platform will alert IT/data teams to fix it. Such early detection prevents scenarios like a doctor making a treatment decision based on incomplete data. High data reliability means clinicians can trust that the information (diagnoses, test results, histories) in front of them is correct, which reduces the risk of errors and improves patient outcomes. In short, observability adds a safety net: issues like duplicate records, inconsistent coding, or data delays that might otherwise slip through are caught and resolved, supporting a higher standard of care.

- Regulatory Compliance and Data Privacy: Healthcare providers and life science companies are subject to stringent regulations regarding data, not only for privacy (HIPAA, GDPR) but also for data usage and reporting (Meaningful Use requirements, clinical trial data integrity, etc.). Data observability assists in maintaining compliance by providing full visibility and auditability of data processes. It tracks data lineage meticulously – for instance, showing exactly how patient data moves from intake forms to billing systems to analytics, and who accesses it – which is invaluable for audits and demonstrating HIPAA compliance. Observability can also enforce data quality rules that align with regulatory standards, such as ensuring required fields are never null or that data updates propagate to all systems (preventing fragmentation). If a violation occurs (say, unauthorized access patterns or unexpected data changes that could hint at a breach), the system can alert compliance officers in real time. Moreover, by keeping data consistent across systems, observability helps with interoperability, which is a regulatory and operational goal in healthcare (e.g. under U.S. law, providers need to share patient data seamlessly). Ensuring clean, reconciled data across labs, EHRs, and insurers means better coordination and adherence to regulations on data exchange. In essence, observability provides the evidentiary support that healthcare organizations are managing data properly – reducing the risk of penalties, legal issues, and reputational damage due to data lapses.

- Operational Efficiency and Research Innovation: Healthcare organizations are complex, with numerous administrative and analytical processes (appointment scheduling, revenue cycle management, outcome analysis, medical research) that rely on data. Data observability can significantly improve operational efficiency by automating the detection of data issues that would otherwise consume countless hours. For example, hospital systems often struggle with duplicate or inconsistent patient records across departments. Observability tools can identify these duplicates or sudden divergences in patient data and trigger workflows to merge or correct records. This not only saves staff time but also ensures clinicians aren’t wasting time with incorrect data. Additionally, observability supports research and analytics (like population health studies or pharma R&D). Researchers need high-quality datasets aggregated from multiple sources; observability ensures that the data feeding these studies is complete and has not been corrupted or partially lost in transit. It flags anomalies in datasets that could skew results (for instance, a chunk of missing sensor readings in a medical device trial). By maintaining data integrity, observability helps scientists and data analysts trust their results, accelerating innovation. Furthermore, a strong observability practice can break down data silos by showing a unified view of data flows across the organization, enabling new insights. For example, linking operational hospital data with patient outcome data could reveal improvements – but only if both data streams are reliable and can be connected, which observability facilitates. Overall, the continuously monitored, high-quality data environment leads to smoother operations (like faster insurance claims processing, accurate reporting of quality metrics) and more impactful research, all while keeping patient data safe and correct.

Implementing data observability in healthcare often involves AI-powered anomaly detection and automation, because the data volumes are large and the tolerance for error is low. Advanced platforms can learn the normal ranges for various healthcare data (admissions rates, sensor readings, etc.) and alert on deviations without being explicitly programmed for each scenario. They can also suggest root causes (for example, pointing to a specific interface or data source that is the source of bad data) to speed up resolution. The payoff is substantial: one healthcare analytics leader noted that combining a modern data cloud with a data observability platform enabled their team to resolve data problems before they reach business users, leading to happy executives and greater trust in data. When doctors, nurses, administrators, and researchers know that the data they use is consistently monitored and cared for, they can focus on their mission – improving health outcomes – rather than worrying about data correctness. In summary, data observability serves as a guardian of data quality in healthcare, supporting patient safety, compliance, and innovation in equal measure.

Data Observability for Telecommunications

Telecommunications companies (telecoms) operate some of the most complex data ecosystems, with massive volumes of streaming data and a high demand for uptime. Telcos collect data from network devices (cell towers, routers, switches), customer usage records (call detail records, data usage logs), billing systems, support systems, and more. This data is critical for running the network efficiently, billing customers correctly, detecting fraud, and delivering new services. The challenges in telecom data management include the sheer scale and speed of data (e.g. real-time network telemetry generating millions of events per minute), the need for near-100% availability (downtime or data loss can cause service outages or revenue impact), and ensuring accuracy in things like billing and regulatory reporting (telcos must often report metrics to authorities, and any errors can lead to fines). A small data mistake, such as a misapplied billing rate or a lost usage record, can cascade into significant revenue leakage or customer dissatisfaction. In fact, billing errors are a well-known source of revenue loss in telecom: if billing data is inaccurate or incomplete, companies can lose money and goodwill – one industry survey found communications providers lost an estimated 2-3% of revenue to such issues. Thus, data observability is a must-have for telecom operators to maintain data trust internally and with customers.

By adopting data observability, telecom companies gain the ability to continuously watch over their data pipelines and act on issues before they impact customers or the bottom line. Here’s how data observability specifically benefits the telecom sector:

- Network Performance and Service Assurance: Telecom networks generate enormous streams of operational data (latency metrics, error logs, throughput stats, etc.). Data observability tools can ingest and monitor these streams in real time, providing a sort of data pulse for the network’s health. If there’s an anomaly – for instance, a sudden drop in traffic from a group of cell towers (possibly indicating an outage in a region) or unusual spikes in network errors – the observability platform will detect it immediately. This allows network operations teams to respond quicker, potentially even before customers notice the issue. In other words, observability extends the concept of observability (widely used in monitoring telecom hardware/software) to the data itself that those systems produce. The result is better uptime and performance: telecoms can maintain proactive service assurance by catching data signals of trouble early. Additionally, data observability helps ensure that network analytics dashboards (used to manage capacity and quality) are fed with correct data. If a data pipeline feeding a NOC (Network Operations Center) dashboard fails or lags, the platform will alert the data engineers to fix it, so decision-makers are always viewing accurate, current information.

- Billing Accuracy and Revenue Protection: Telecom billing involves processing huge numbers of usage records and applying complex rating logic. Any data issue in this pipeline – such as missing call records, incorrect usage aggregation, or a delayed update of tariff rates – can lead to billing errors. These errors might overcharge customers (hurting satisfaction and causing churn) or undercharge them (direct revenue loss), and can also incur regulatory penalties for inaccuracies. Data observability is extremely valuable here as it provides continuous monitoring and reconciliation of billing data. For example, it can reconcile the number of calls or data sessions recorded in the network logs with the records that made it into the billing system, flagging any discrepancies. Observability systems can also detect if a particular usage file from a switch is missing or if there’s a format/schema error that caused some records to be dropped. By catching such issues, telecoms can prevent revenue leakage and ensure customers are billed correctly. As a result, they avoid both financial losses and customer frustration. In essence, observability safeguards the revenue cycle by guaranteeing that every bit of billable usage data is accounted for. According to telecom experts, even small error rates can translate to big losses at telco scale – billing errors lead to revenue leakage, customer churn, and even regulatory fines, whereas accurate data drives profitability and loyalty. Data observability provides the framework to achieve that accuracy at scale.

- Customer Experience and Fraud Detection: Telecoms rely on data to understand and improve customer experience – for example, data about dropped calls, broadband speed, or customer interactions can highlight where service is subpar. Data observability ensures that such customer-related metrics are correctly collected and reported. If a stream of customer experience metrics (like quality-of-service stats from user devices) suddenly shows unusual values, observability helps discern if it’s a true service issue or a data anomaly. This prevents misdirected efforts (chasing a problem that is actually a data error) and ensures genuine issues are addressed. Additionally, observability assists in fraud management by monitoring data for anomalies that could indicate fraudulent activity (such as abnormal usage patterns that a fraud detection system might miss if the data feed has gaps). By verifying the completeness of data to fraud analytics, it ensures those systems have the full picture. Furthermore, telecom companies often integrate data observability with their broader operations support systems. For example, alerts from the observability platform can tie into ticketing systems or on-call rotations, so that data engineers respond just like site reliability engineers would to an infrastructure alert. This integration fosters a culture where data reliability is treated as part of the service reliability. The outcome is that customers experience fewer data-related hiccups – bills are accurate, services are consistent, and issues (like a sudden network glitch) are resolved faster – all contributing to higher satisfaction and retention.

In summary, data observability acts as an early warning system and quality control for telecom data. It’s like having a 24/7 automated watchdog that is continuously auditing everything from network event logs to customer billing records. Given the complexity of telecom operations, no human team could manually monitor all this in real time, but an AI-driven observability platform can. Some advanced solutions even optimize themselves, reducing false alarms by learning normal fluctuations in network data and focusing on truly abnormal events. The payoff for telecoms is substantial: fewer billing disputes (saving time and money), reduced revenue leakage, better compliance with reporting obligations, and an improved ability to plan and optimize the network using trustworthy data. In an industry where trust and reliability are paramount – customers expect their phone and internet to “just work” and be billed correctly – data observability has become a key enabler of delivering on those expectations.

Data Observability for IT/Tech Companies and Startups

Technology companies and startups are often at the forefront of leveraging data for competitive advantage. Whether it’s a SaaS company analyzing user behavior, a marketplace optimizing its algorithms, or a hardware firm monitoring device data, these organizations depend on data to innovate and move quickly. Unlike more traditional industries, tech companies tend to have modern data stacks in the cloud and a culture of data-driven decision making. However, they also face unique challenges: rapid growth (data volumes and use cases can explode in a short time), lean teams (startups might have just a handful of data engineers or no dedicated data team at all), and the need to maintain trust and reliability at speed. In a startup, a single critical data outage or a bad data-driven decision can be especially damaging, potentially derailing product development or undermining stakeholder confidence. Moreover, tech companies often practice continuous deployment and fast iteration, meaning data pipelines are frequently changing – this agility is great for innovation, but increases the risk of unseen data issues slipping in unless observability is in place.

Data observability is thus a crucial component for IT/tech companies and startups to ensure their agility doesn’t come at the expense of data accuracy and trust. Essentially, it allows small teams to scale their data operations intelligently, catching problems automatically that they would never have the bandwidth to manually test for. Here’s how data observability benefits this fast-paced sector:

- Scaling Data Reliability with Small Teams: Startups often have to do more with less. A data observability platform serves as a force multiplier for a lean data/engineering team by providing out-of-the-box monitors and automated checks across the entire data stack. Instead of writing countless custom tests or manually inspecting data, a two-person data team can rely on observability to highlight where attention is needed. This was exemplified by one tech company that deployed observability and achieved 4x greater data quality coverage compared to their previous manual testing approach. With thousands of datasets being monitored automatically, they caught issues that manual tests missed, all without having to massively expand headcount. In practical terms, observability tools will check schema changes, data volume thresholds, distribution changes, etc., on every data pipeline and table, alerting the team only when something goes off track. This means even a very small team can confidently maintain hundreds or thousands of data assets, because they have a safety net that scales effortlessly with data growth. As a result, startups can focus their energy on product development and analytics rather than firefighting data glitches.

- Rapid Incident Response and DevOps Integration: Tech companies typically embrace DevOps practices, and data observability fits neatly into this model by enabling fast, automated incident response for data issues. When an anomaly is detected (say, a critical metrics table didn’t update, or an ETL job produced a spike in null values), the observability platform can route alerts to the right on-call engineer or Slack channel. Modern solutions often integrate with tools like PagerDuty, Opsgenie, or other incident management systems, so that data incidents are handled with the same rigor and urgency as application outages. For example, one engineering team set up their observability tool to push alerts into their existing monitoring dashboards and pager system, which meant that a data pipeline failure at 2 AM would wake someone up just as a server outage would – ensuring no lengthy downtime for data products. Additionally, observability provides centralized visibility for resolving incidents: a single UI or dashboard shows all data checks, lineage, and recent changes, helping engineers pinpoint root causes in minutes. This efficient triage means minimal disruption to end-users or internal teams waiting on data. In essence, observability allows tech companies to respond to data problems at the speed of their business – which is often real-time – and maintain a reputation for reliability even as they move fast. It’s common to hear that data observability is the first step before any data incident management or escalation can happen, underscoring how it underpins a strong incident response process.

- Building Data Trust and Data-Driven Culture: In the tech/startup world, having a data-driven culture is considered a key to success – but that culture only thrives if people trust the data. Data observability helps instill trust by ensuring high data quality is continuously maintained and by making data reliability a visible, shared concern across the organization. Many tech companies view observability as more than just a toolset; it’s a mindset that everyone from engineers to execs embraces. As one senior data engineer described, “Data observability is more than just data quality. It’s becoming a cultural approach… companies understand that having high-quality data is a crucial part of their business.”. This means that with robust observability, data quality stops being an afterthought and becomes a proactive part of the development cycle. For instance, when launching a new feature that relies on data, teams will have observability checks in place from day one (often as code, using CI/CD integration). This proactive stance prevents the erosion of trust that can happen when each new product or analysis introduces unknown data issues. Furthermore, observability often provides democratized insights through user-friendly dashboards or reports on data health. This allows not just engineers, but product managers, analysts, and even customer support, to see the status of data pipelines and understand if an issue might be affecting their work. Such transparency breaks down silos and reinforces a culture where data is treated as a first-class asset – monitored and cared for just like application performance or any critical infrastructure. The outcome is that everyone can innovate faster because they trust the data to be accurate and fresh, and if something does go wrong, they know the mechanisms are in place to catch and fix it quickly.

For IT startups especially, adopting a comprehensive data observability platform early on can prevent growing pains later. It allows them to avoid the scenario of scaling up a product, acquiring many users, and then discovering that their metrics or data pipelines can’t be trusted. By catching data quality issues at the source (the so-called “left-hand side” of the data lifecycle), observability ensures that only reliable data flows downstream into analytics and business decisions. Additionally, many tech companies leverage the AI/ML capabilities of modern observability tools – such as ML-driven anomaly detection and auto-thresholding – to reduce false positives and focus their attention efficiently. For example, DQLabs (as a modern observability solution) uses intelligent pattern recognition to reduce false alerts by up to 90%, and it auto-discovers data relationships and self-tunes monitoring based on historical patterns. These autonomous features are a boon for fast-moving tech teams, as they minimize manual configuration and let the system adapt as the data evolves. In short, data observability gives tech companies and startups a way to move fast without breaking things (data-wise) – maintaining the integrity and trustworthiness of data as they rapidly expand their products and services.

The DQLabs Advantage: Unified Data Observability and Data Quality for Trusted Data

Across all these industries – from highly regulated finance and healthcare to dynamic tech startups – one theme is clear: data observability is key to building and maintaining data trust. By monitoring data health in real time, providing end-to-end visibility, and proactively alerting on issues, observability tools help organizations avoid the pitfalls of bad data and harness their data for strategic advantage. However, not all data observability solutions are created equal. A truly effective platform needs to cover the full spectrum of data reliability concerns (data quality, pipeline performance, infrastructure, cost, etc.) and also help resolve issues, not just detect them. This is where DQLabs stands out as a leader in the field.

DQLabs is an AI-driven, semantics-powered data observability platform that takes a comprehensive approach to ensure reliable data. It is the only solution that provides multi-layered data observability – covering everything from the data content itself (values, schemas, quality) to data pipelines and transformations, and aspects like data usage and cost optimization. By synthesizing signals across all these layers, DQLabs delivers comprehensive, actionable information with root cause analysis, so data teams can quickly pinpoint and address problems. Unlike siloed tools that might just monitor one aspect (for example, a tool that only does data quality checks or only pipeline monitoring), DQLabs provides a single pane of glass where all data reliability issues can be seen in context and drilled into. This integrated view is incredibly valuable in complex enterprise environments – no more blind spots between your ETL tool, your cloud warehouse, and your BI dashboards, because DQLabs is observing the entire ecosystem holistically.

DQLabs also provides self-tuning capabilities that adjust monitoring thresholds based on historical patterns, drastically reducing false positives and alert fatigue. This means when DQLabs notifies your team, you can trust it’s something truly worth attention – and you may even get a suggested solution alongside the alert. The platform’s autonomous operations (such as eliminating the need to manually create hundreds of data quality rules by instead using ML-driven data profiling) free up data engineers to work on higher-value tasks. One concrete benefit reported is the ability to reduce false alarms by about 90% through intelligent pattern recognition, focusing teams on real issues. In essence, DQLabs not only protects your data quality but does so in a smart, efficient way that scales with your organization.

Another key differentiator of DQLabs is that it uniquely bridges data observability and data quality in one solution. Data quality (ensuring the correctness and completeness of data against business rules) is actually a subset of observability, and DQLabs has deep capabilities here – from automated data profiling and cleansing to master data management integration. In fact, DQLabs is the only data observability vendor that also offers a complete data quality solution, and it has been recognized for this breadth. Gartner has featured DQLabs in its Magic Quadrant for Data Quality Solutions as the sole Visionary vendor, highlighting the platform’s innovative fusion of observability and quality management. This recognition affirms that with DQLabs, organizations don’t need to juggle separate tools for observability and data quality – they get an end-to-end data trust platform. The advantage is evident: when an issue arises (say a data schema change causes some records to be dropped), DQLabs can catch it (observability), evaluate its impact on data correctness (quality), and even assist in resolving it (through suggested transformations or enrichments), all within one workflow. This accelerates the journey from problem detection to solution implementation dramatically.

In conclusion, as organizations in banking, insurance, manufacturing, retail/CPG, healthcare, telecom, and tech increasingly recognize the importance of data reliability, data observability is becoming a foundational practice. It addresses the very real challenges of today’s data landscapes – complexity, speed, distributed systems, and the critical need for trusted data – by providing continuous insight and control. Investing in data observability yields returns in the form of prevented crises, saved time and costs, improved compliance, and greater confidence in data-driven initiatives. It allows data teams and business stakeholders alike to focus on using data to drive value, rather than constantly worrying about its validity.

By choosing a comprehensive platform like DQLabs for data observability, organizations get the full package: robust monitoring across all data touchpoints, intelligent automation and AI-driven anomaly detection, integrated data quality management, and actionable remediation guidance. This means not only finding out what went wrong, but also understanding why and how to fix it – fast. DQLabs customers gain a trusted data foundation, where data issues are caught at the source and prevented from cascading downstream. The end result is data you can trust, in every project, every report, and every decision. In a world increasingly run by data, such observability is not just an IT concern; it’s a business imperative for building resilience, innovation, and competitive edge. With DQLabs and a strong data observability strategy, enterprises across all industries can finally achieve the elusive goal of data you can truly trust.

ChatGPT

ChatGPT Perplexity

Perplexity Grok

Grok Google AI

Google AI