What Is

Data Observability?

Detect and fix data issues before they disrupt your business, inflate costs, or derail AI.

Data Observability gives data engineering teams unparalleled visibility into pipeline health, schema changes, data drift, lineage and anomalies (data, pipelines, performance, usage, cost)—so they are the first to know when data breaks, what broke, and how to fix it.

Data Observability is Multi-Layered

Data observability requires a multi-layered approach that matures from foundational data and pipeline health checks up to comprehensive, business-aligned governance—delivering trusted data and control across every layer of data and AI ecosystem.

DQLabs Data Observability Platform

AI-Driven Semantics-Powered Autonomous

The DQLabs Advantage – It is the only complete solution that provides multi-layered Data Observability. It not only helps you detect problems which can create noise but help you fix those issues.

Auto-Real-Time Monitoring across Data, Pipelines, Reports and Usage

Al/ML powered Anomaly Detection - data volume, freshness, or distribution

Clean, intuitive, no-code Ul and OOTB Visualizations

Single source of End-to-end pipeline observability

RCA with Lineage and Table/Field Level Scores

Semantic-aware alerting - Less noise and Alert Fatigue

Reduced MTTR - Auto-routing of Issues with enriched semantics

Reduce false positives by 90% through intelligent pattern recognition

Autonomous Operations - Auto-discover data relationships and dependencies

Self-tune monitoring thresholds based on historical patterns

Proactively recommend corrective actions

Eliminate manual rule creation through ML-driven profiling

How Do You Benefit?

$1.5M+

Annual Savings

From early detection of broken pipelines, preventing analytics/AI delays and costly downstream reprocessing

70%

Less Engineering Workload

Freeing up time for innovation instead of firefighting data issues

3X

Faster Incident Resolution

Reducing business impact and improving SLA compliance

50–80%

Fewer Dashboard Errors

Improving executive confidence and reducing decision risk

Up to $500K

Saved Per Year

By eliminating redundant data validation, streamlining data quality ops with automation and replacing legacy tools

$250K–$1M

Saved Per Year

By getting visibility into your resource consumption and cost observability

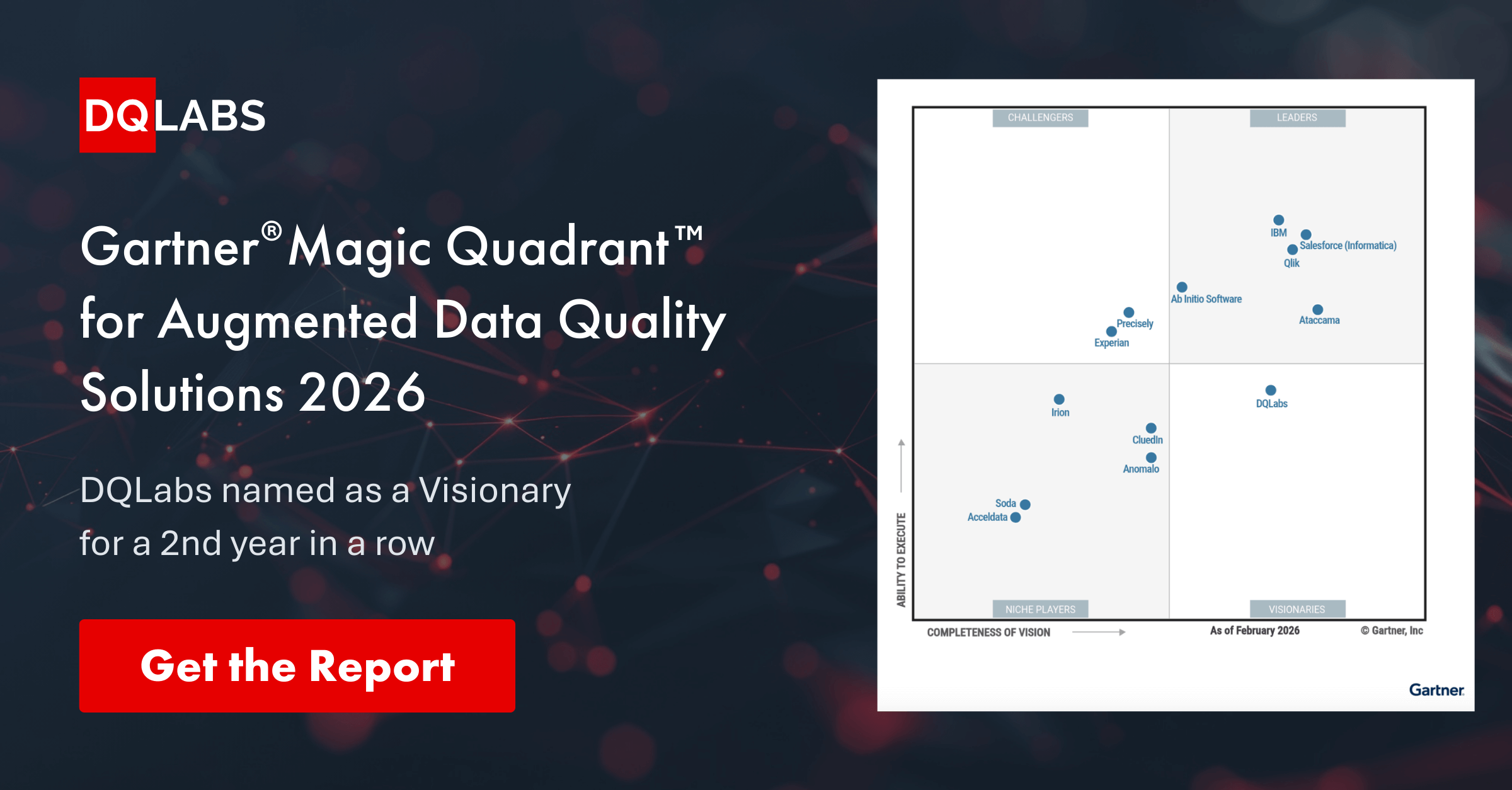

Industry Recognition & Validation

Frequently Asked Questions

-

What is data observability, and how is it different from data quality monitoring?

Data observability provides full-stack, real-time visibility into your data pipelines, jobs, infrastructure, and downstream usage. While data quality checks focus on validating values and rule conformance, observability detects issues like pipeline failures, schema drift, late data, and anomalies—even before quality tests fail. DQLabs unifies both under one platform.

-

How does DQLabs' anomaly detection differ from traditional monitoring?

DQLabs uses machine learning to understand normal data patterns and semantic context, reducing false positives by 90% compared to static threshold-based systems. Our AI continuously learns and adapts to your data evolution. Traditional data monitoring might involve manually set thresholds on a few metrics and reacting to alerts (“Is something wrong?”). DQLabs Data Observability, by contrast, offers a holistic and intelligent view, answering “Why is it wrong and what do we do?”. It correlates signals across data quality, pipelines, infrastructure, and usage to provide deep insights and root cause analysis. For example, monitoring might tell you a pipeline job failed, but DQLabs observability will tell you which data was affected, who downstream is impacted, and the likely cause (like a schema change), often with recommended fixes. We also leverage AI/ML to detect issues you didn’t explicitly set rules for (unknown unknowns), which basic monitoring would miss. Essentially, DQLabs goes beyond monitoring to deliver proactive anomaly detection, automatic lineage, and business context – ensuring not only can you detect issues, but you can also resolve them rapidly and prevent them in future.

-

What types of data quality measures does DQLabs support?

We provide 150+ out-of-the-box measures across reliability, distribution, frequency, and statistical categories, plus custom conditional, behavioural, and query-based measures for specific engineering and business needs.

-

Can DQLabs track schema drift across environments and datasets?

Yes. DQLabs continuously monitors schema changes at the table/column level, even across Snowflake, Databricks, BigQuery, and external data lakes. Changes are auto detected and versioned and visualized using lineage.

-

Can DQLabs integrate with our existing data catalog?

Yes, we have native integrations with Alation, Collibra, and Atlan, plus APIs for custom catalog connections. Metadata flows bidirectionally to maintain consistency.

-

How does DQLabs monitor pipeline health and performance?

DQLabs integrates with orchestrators like dbt and Airflow to track pipeline execution, success/failure rates, run times, and freshness SLAs. Failed jobs, retries, or latency issues are flagged automatically. DQLabs provides an aggregated view of pipeline health across your stack.

-

How quickly can we see results from DQLabs?

Most customers see immediate value within 2 weeks of deployment. Our automated profiling provides instant insights into data quality baseline, while ML models begin delivering accurate anomaly detection within the first month.

-

What's the typical implementation timeline?

Standard deployment takes 4-6 weeks vs. industry average of 6 months. Our semantic-driven auto-discovery eliminates months of manual configuration required by traditional solutions.

-

How does DQLabs handle sensitive data and compliance requirements?

DQLabs is SOC 2 Type II certified and supports HIPAA, GDPR, and other compliance frameworks. We offer flexible deployment options including private cloud, on-premises, and hybrid architectures. Our metadata-first approach means sensitive data never leaves your environment—we only analyze data patterns and structures, not the actual content.

-

How does DQLabs pricing compare to competitors?

Our value-based pricing model typically costs much less (as per customers this could mean 50-60% lesser) than credit-based systems like Monte Carlo, AccelData and others over a period of time. You pay for business value delivered, not technical consumption metrics.

-

Do you offer a free trial or proof-of-concept?

Yes, we provide proof-of-value engagements where we demonstrate how our product can offer measurable improvements in your data quality and trust within the stipulated timelines.

See DQLabs in Action

Discover Agentic AI-powered observability, quality, and discovery in one unified platform.

Book a Demo