Summarize and analyze this article with

In 2025, two marquee events – Snowflake Summit and the Databricks Data + AI Summit – showcased how rapidly the data landscape is evolving. Both conferences unveiled major innovations that highlight a new era of enterprise AI, data engineering, and analytics. For data engineers, data professionals, CDOs, and teams focused on data quality and observability, the message was clear: cloud data platforms are transforming to become AI-native, low-code, and highly governed environments.

In this blog, we break down the key takeaways from each summit and explore the common themes that emerged, from agentic AI and semantic layers to business user empowerment and robust data governance. Finally, we discuss what these shifts mean for enterprise data teams striving for AI readiness and trusted data pipelines.

Snowflake Summit 2025: Key Takeaways

1. Agentic AI and Natural Language Interfaces

Snowflake put generative AI at the core of its platform with the introduction of Snowflake Intelligence—an AI-driven assistant that allows business users to securely query both structured and unstructured data in plain English and get insights or perform actions without writing SQL. This AI is deeply integrated into Snowflake’s governed environment, ensuring enterprise trust and compliance.

A Data Science Agent is also in the works, providing data scientists with an AI copilot that automates parts of the ML lifecycle—data prep, transformation, and experimentation—using prompts instead of scripts.

Another major innovation, Cortex AISQL, enables SQL users to analyze documents, images, and other unstructured formats directly using AI functions embedded in SQL syntax.

Why it matters: Snowflake is pushing toward a future where data interaction is conversational and proactive, helping both technical and non-technical users make faster, AI-assisted decisions without leaving the platform—or writing code.

2. Openflow and Low-Code Engineering for Unified Data Movement

Snowflake unveiled Openflow, a visual, low-code ingestion and transformation service powered by Apache NiFi. It supports a wide range of pipelines—batch, streaming, structured, and unstructured—and comes with hundreds of prebuilt connectors, including ones for legacy systems like Oracle CDC.

Paired with first-class support for dbt projects (including version control, AI-assisted development, and native scheduling), Openflow consolidates data engineering workflows and reduces reliance on external orchestration tools.

Snowflake also doubled down on openness by supporting Apache Iceberg tables and launching the Open Catalog, allowing teams to interact with data in open formats without sacrificing performance, governance, or interoperability.

Why it matters: Openflow and Iceberg support underscore Snowflake’s evolution into a flexible, open, low-code data platform capable of meeting hybrid data architecture needs.

3. Semantic Views and Performance Optimization

A key pain point for many organizations—metric inconsistency across tools—is being addressed through Semantic Views, now in public preview. This built-in semantic layer enables teams to define core business metrics, hierarchies, and relationships once, and use them consistently across dashboards, AI assistants, and SQL queries.

Performance improvements weren’t left behind. The Standard Warehouse Gen 2 delivers a 2X+ performance boost on typical workloads, while SnowConvert AI can automatically translate code from legacy systems (e.g., Teradata, SQL Server), accelerating migration and reducing risk.

Why it matters: These updates reinforce Snowflake’s push toward faster, more consistent analytics, built on centralized, well-defined business logic.

4. AI-First Marketplace and App Ecosystem

With its Snowflake Native Apps and expanded Marketplace, Snowflake is morphing into an AI application platform. Enterprises can now install or build AI-powered applications—like data quality validators, automated anomaly detectors, or domain-specific copilots—right inside Snowflake.

New Cortex Knowledge Extensions even allow these apps to tap into real-time external sources (e.g., Stack Overflow, USA Today), with enterprise-grade security and attribution control.

Why it matters: Snowflake is building an ecosystem where teams can plug in AI apps like Lego blocks—accelerating value delivery while preserving governance.

5. Enterprise-Grade Governance, Observability, and Security

Snowflake also focused heavily on trust and control. The expanded Horizon Catalog now supports discovery, governance, and classification of not just Snowflake assets but also external data sources, BI dashboards, and semantic models.

To simplify policy management, Snowflake added a Horizon Copilot that uses natural language to help users find data, enforce permissions, or understand metadata.

Security got a lift with stronger MFA options and a new Trust Center. Observability also expanded: Snowflake Trail now includes full telemetry for Openflow pipelines, AI agents, and user interactions—offering deep insights into data lineage, health, and behavior.

Why it matters: Snowflake isn’t just scaling workloads—it’s scaling trust, giving organizations the governance foundation they need for AI and self-service.

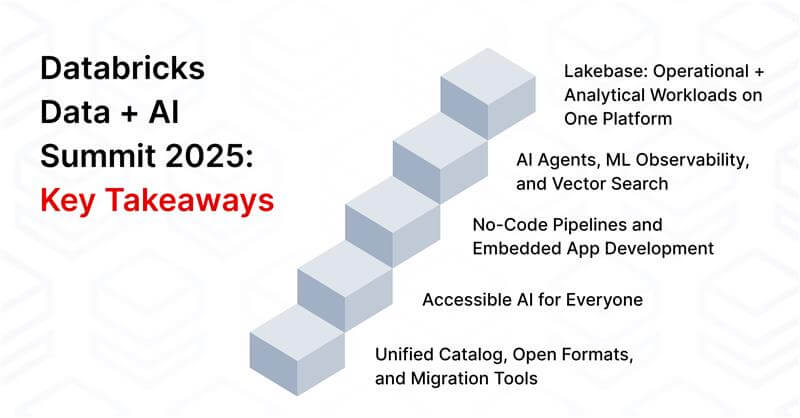

Databricks Data + AI Summit 2025: Key Takeaways

1. Lakebase: Operational + Analytical Workloads on One Platform

Databricks unveiled Lakebase, a Postgres-compatible transactional database engine built for the lakehouse. It lets teams run OLTP-style apps (real-time, high-throughput systems) directly on the same data infrastructure as analytics and AI workloads.

Built to maintain the openness and cost-efficiency of a lakehouse while adding the reliability of a traditional database, Lakebase is designed to unify operational + analytical data without duplication.

Why it matters: Lakebase positions Databricks as a single platform for all data workloads, reducing architectural complexity and latency between operational and analytical systems.

2. AI Agents, ML Observability, and Vector Search

With Agent Bricks, Databricks is enabling users to define AI agents by simply describing their tasks and data sources in plain English. The platform auto-generates prompts, test cases, and tuning parameters—reducing ML development overhead.

MLflow 3.0 brings observability to the generative AI world: tracking prompt versions, logging outputs, and monitoring agent performance across tools—even those outside of Databricks.

The platform’s new vector search engine supports retrieval-augmented generation (RAG) at scale, while optimized Model Serving now handles 250K+ queries per second with lower latency, making enterprise-grade AI more performant and production-ready.

Why it matters: Databricks is becoming a foundation for deploying, monitoring, and optimizing LLM-powered agents across real-world use cases.

3. No-Code Pipelines and Embedded App Development

With Lakeflow GA and Lakeflow Designer (private preview), Databricks makes it possible to visually design ETL workflows—supporting batch and streaming—without Spark expertise.

Databricks also introduced Databricks Apps, which let teams build embedded dashboards, assistants, or operational tools using Python, SQL, or visual components. These apps run securely inside the Databricks workspace and integrate directly with existing data and ML assets.

Why it matters: Databricks is reducing friction for builders—enabling faster pipeline creation and internal app development for both engineers and analysts.

4. Accessible AI for Everyone

Databricks’ new Free Edition gives students, professionals, and teams a cost-free way to explore the lakehouse: build notebooks, run models, and test dashboards with modest compute limits.

For business users, Databricks One (private preview) and AI/BI Genie (GA) provide intuitive, no-code interfaces. Users can search data, build visualizations, and get AI-generated narratives using natural language—all without writing a single line of code.

Why it matters: Databricks is leaning into AI-powered self-service for analysts, executives, and newcomers—not just technical users.

5. Unified Catalog, Open Formats, and Migration Tools

Databricks expanded Unity Catalog to support more open formats (e.g., Apache Iceberg) and launched Metrics to store centralized KPI definitions. A new Unity Catalog Discover experience curates certified datasets and models into an internal marketplace, complete with AI recommendations.

Databricks Clean Rooms now span multi-cloud and cross-platform use cases. The new Lakebridge migration toolkit automates onboarding from legacy systems—profiling schemas, converting code, and validating outputs.

Why it matters: Databricks is enabling governed collaboration, open data sharing, and accelerated modernization, all without vendor lock-in.

Common Themes and Market Shifts

While Snowflake and Databricks maintain distinct architectural roots, their 2025 roadmaps showed remarkable alignment:

1. Agentic AI is Now Built-In

LLM-powered assistants are no longer bolt-ons—they are deeply integrated across both platforms. From Snowflake Intelligence to Agent Bricks, AI agents now assist with querying, coding, debugging, and insight generation—securely and contextually.

2. Low-Code Development Goes Mainstream

Both platforms emphasized visual tooling: Openflow and Lakeflow Designer streamline data pipeline creation, while native apps and embedded tools reduce the time and code required to deploy internal analytics or AI products.

3. Business User Empowerment is a Core Design Goal

With Databricks One, AI/BI Genie, Snowflake’s conversational analytics, and semantic sharing, both vendors are elevating the role of business users—bridging the gap between technical and non-technical stakeholders through intuitive, governed interfaces.

4. Semantic Layers Are Now Foundational

Snowflake’s Semantic Views and Databricks’ Unity Catalog Metrics both recognize that AI and analytics need a shared vocabulary. Defining metrics, dimensions, and relationships once—and reusing them everywhere—is becoming a best practice for accuracy and trust.

5. Governance and Observability are Must-Haves

From Horizon and Unity Catalog to AI model observability and pipeline telemetry, both vendors are embedding deep trust, security, and traceability into every layer—critical for scaling AI and self-service responsibly.

Final Thoughts: What This Means for Enterprise Teams

The 2025 summits point to a new normal for data platforms—intelligent, accessible, and accountable. For enterprise teams, this shift comes with a clear action plan:

- Prioritize semantic consistency: Build a strong foundation of shared metrics and lineage so AI and analytics tools deliver consistent results.

- Adopt low-code workflows (with guardrails): Empower analysts and domain experts to self-serve—while maintaining rigorous governance and observability.

- Monitor everything: With data and AI processes becoming more automated, real-time observability is no longer optional—it’s essential.

- Get AI-ready as a team: Platforms are ready. Now the focus must be on education, policies, and responsible deployment strategies.

Modern platforms like DQLabs can complement Snowflake and Databricks by adding real-time data quality monitoring, metadata management, and AI observability—ensuring teams trust the outputs from increasingly intelligent platforms.

Conclusion

The data landscape is evolving rapidly, but the direction is clear: toward platforms that are AI-native, intuitive, and built on trust. Snowflake and Databricks are leading this transformation. For enterprise data teams, the opportunity—and responsibility—is to turn these powerful innovations into sustained business value.

The future of data isn’t just smart—it’s self-service, secure, and semantic by design.

ChatGPT

ChatGPT Perplexity

Perplexity Grok

Grok Google AI

Google AI