Data observability has rapidly become a must-have for data-driven organizations, but simply deploying tools isn’t enough – you need tangible returns. In fact, recent research suggests leading enterprises are realizing 3x–4x ROI from observability investments when they implement them effectively. How can data professionals, data engineers, and CDOs ensure their data observability initiatives deliver maximum value? The key is to pair the right technology with smart strategies. From quick wins and automation to business alignment and cultural change, the following ten approaches will help you optimize your data observability ROI and turn reliable data into a true business asset.

1. Start Small and Focus on High-Impact Wins to Boost ROI

While big goals are important, starting small and focused is key to successful data observability. Begin with a high-impact area—like a critical pipeline or customer funnel—that often faces quality issues. Quick wins, such as catching an error before it hits a dashboard, demonstrate value early and build stakeholder confidence.

A phased rollout avoids overwhelm and proves ROI on a manageable scale. One global manufacturer began by monitoring just a few supply chain feeds and quickly reduced reporting errors. That success paved the way for broader adoption.

The takeaway: pilot with purpose, prove results, and scale gradually. This targeted approach ensures resources are well spent and returns are maximized at every step.

2. Automate Data Monitoring with AI/ML for Maximum ROI

Manual data firefighting is costly and slow. To boost observability ROI, automate monitoring with AI/ML from the start. Modern platforms continuously scan for anomalies, schema changes, and error spikes—catching issues far faster than manual methods. This reduces labor for data teams and prevents costly data errors.

AI-driven observability tools learn baseline patterns and flag subtle deviations, like a sudden drop in a table’s row count. This proactive approach means fewer unnoticed issues and quicker resolution. One global consumer goods company, for example, adopted automated quality checks and significantly shortened issue detection time.

Automation delivers more than speed—it preserves trust and minimizes downtime. By resolving issues before they impact business decisions, you enhance the reliability of your data stack and realize stronger, faster returns on your observability investments.

3. Leverage Metadata and Context to Amplify Observability Value

Data observability delivers more value when paired with metadata and business context. Integrating your observability platform with data catalogs ensures every alert includes vital details—like data owners, definitions, and usage history—helping teams resolve issues faster and more accurately.

For example, linking observability metrics to catalogs such as Atlan or Alation allows users to instantly view data freshness, accuracy scores, and recent issues. This transparency builds trust and encourages informed action. Platforms like DQLabs enhance this by embedding quality scores and alerts into catalog metadata via a semantic layer.

When observability is enriched with business context, insights become more relevant and actionable. It reduces noise, accelerates root cause analysis, and ensures stakeholders can respond effectively. The result: faster resolution, better alignment across teams, and stronger ROI from your observability efforts.

4. Integrate Observability into Your Cloud Data Stack for Higher ROI

To maximize ROI, embed observability across your entire cloud data stack—from ingestion to analytics. Native integration with platforms like Snowflake, Databricks, AWS, or Azure ensures seamless monitoring and issue detection across your architecture.

Observability doesn’t just catch data quality issues; it also helps track usage and optimize query performance. For example, monitoring Snowflake workloads might reveal an inefficient query consuming excessive credits—insight that translates into cost savings.

One global entertainment brand saw value by instrumenting its full data pipeline—from AWS ingestion to Databricks transformations and Snowflake analytics—within a single observability framework. This unified view eliminated blind spots, improved performance, and ensured system reliability.

By tightly integrating observability into your stack, you reduce data incidents, improve cloud resource efficiency, and gain confidence that all components are operating as intended—driving measurable ROI and operational resilience.

5. Adopt a Domain-Centric Approach to Enhance Data Observability ROI

Not all data holds the same value—or the same business impact. To boost observability ROI, take a domain-centric approach by prioritizing monitoring around specific business areas like finance, marketing, or operations. This ensures observability efforts align with what matters most, rather than wasting resources on low-priority data.

In practice, this means setting up dedicated dashboards or alert rules tailored to each domain’s needs. For instance, an insurance company might create separate observability domains for underwriting, claims, and customer data. Each would have its own quality rules—like catching anomalies in risk scores or tracking claim record completeness.

This focused strategy reduces alert noise, speeds up resolution, and empowers domain experts to take ownership of their data quality. The result: higher ROI, as observability efforts directly prevent revenue loss, compliance issues, or customer satisfaction problems in the areas where they matter most.

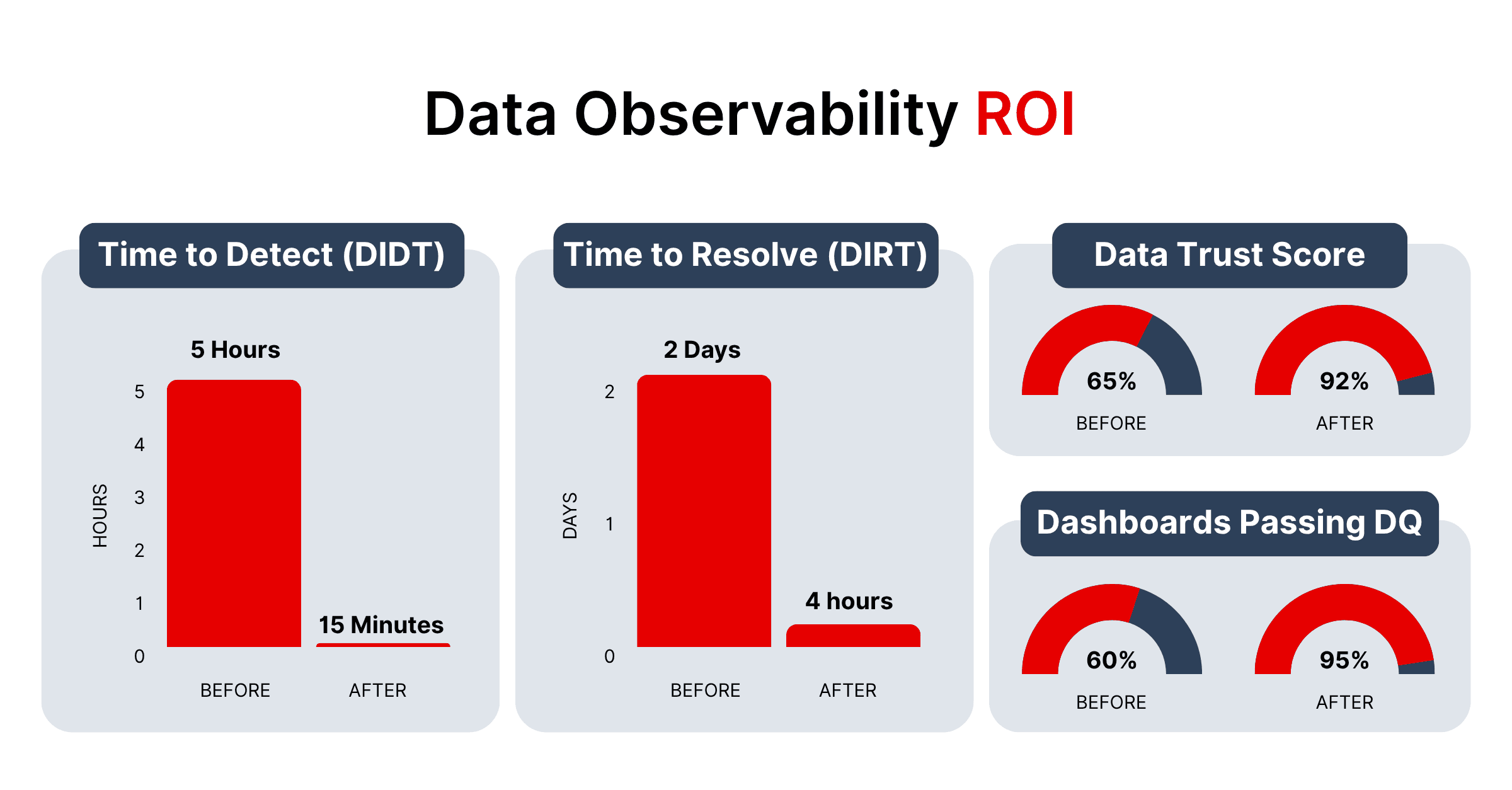

6. Track Data Health KPIs and Demonstrate ROI Improvements

To drive and prove ROI, data observability must be measured. Define clear data health KPIs—like the number of incidents, time to detect (DIDT), time to resolve (DIRT), data downtime, or an overall “data trust score.” Tracking these metrics helps showcase improvements and highlight the business value of observability.

Make these metrics visible. Dashboards can display key trends, such as average data freshness or the percentage of tables passing quality checks. This visibility transforms abstract goals into tangible results.

One global CPG company implemented a “data reliability index” across its analytics datasets. Post-observability rollout, the index steadily improved—driving faster, more confident decisions across marketing and supply chain teams.

By tying improvements to business outcomes like hours saved or errors avoided, teams can clearly demonstrate value to executives. This builds a strong case for ongoing investment and cross-functional support in scaling data quality efforts.

7. Align Data Observability with Business Objectives for Greater ROI

To maximize ROI, align observability with business goals—not just technical metrics. Focus on data assets that directly impact revenue, cost, or risk. This ensures reliability improvements translate into real business value.

Work with leaders like CDOs and product owners to define what “reliable data” means for them—be it reducing support issues or accelerating financial closes. One global brand tied reduced error rates to better delivery performance, making value clear to executives.

When observability improves a dashboard a VP relies on daily, it drives better decisions. Speak the business language—link observability to outcomes like savings, growth, or speed for maximum impact.

8. Use End-to-End Data Lineage to Accelerate Issue Resolution (and ROI)

In complex data environments, a single flawed dataset can impact dozens of downstream systems. End-to-end data lineage helps minimize that risk and accelerate ROI by mapping data flows from source to destination—making it easier to trace and resolve root causes.

Ensure your observability platform includes automated lineage and impact analysis. When a table triggers a data alert, lineage reveals all affected dashboards, models, or reports—enabling faster, more precise fixes.

Pharma companies, for example, use lineage to quickly trace clinical data issues to specific source systems. This reduces downtime, ensures compliance, and lowers infrastructure costs—turning days of investigation into minutes.

9. Minimize Data Downtime with Proactive Alerts and Remediation

Data downtime—when data is missing, incorrect, or delayed—quietly drains productivity and leads to poor decisions. Reducing it should be a key observability goal.

Proactive alerts and rapid remediation help catch issues early. Alerts should integrate with existing tools like Slack or email and clearly signal severity. Advanced platforms use machine learning to reduce noise and flag only what matters.

Automation adds resilience. Failed pipelines can auto-retry or pull from backups. Some companies build self-healing systems that isolate bad data and restore the last good state.

One global manufacturer, for instance, uses schema change detection and instant alerts to catch problems before they affect production. The result: less downtime, stronger decisions, and higher ROI.

10. Foster a Data Quality Culture and Integrate Observability into Workflows

One of the most impactful ways to drive long-term ROI is by embedding observability into your team’s culture and workflows. When data quality becomes a shared responsibility across engineers, analysts, and business users, results become more consistent and sustainable.

Encourage a culture where clean data is valued, and issues are treated as opportunities— not blame games. Observability should feel natural in daily work, not like an add-on.

Integrate data alerts into tools your teams already use, like Jira or ServiceNow. Display quality indicators within BI dashboards and push metrics into catalogs and governance tools for broad visibility.

Platforms like DQLabs make insights accessible beyond IT by integrating with tools such as Data.World or DataGalaxy. Leading organizations go further—embedding observability checks into deployment pipelines and holding regular cross-functional reviews.

When observability is part of how your teams operate, not just what they use, trust in data grows, adoption increases, and your observability investment delivers compounding business value.

Conclusion

Investing in data observability is one of the smartest moves for any data-driven organization—but unlocking its full ROI takes more than just tools; it requires strategy. By starting with focused wins, embracing automation and metadata, integrating observability into your stack, aligning with business goals, and nurturing a culture of quality, you transform observability from a cost center into a powerful value driver. When done right, data observability delivers not only technical improvements but also real business gains.

Now it’s your turn. Apply these ten strategies to unlock the true value of your data. Ready to accelerate your journey and see results? Start optimizing your data observability approach today—and if you want expert help, consider reaching out to DQLabs for a personalized demo. It’s time to turn your data into ROI and use observability as a catalyst for growth.