Summarize and analyze this article with

In today’s data-driven world, protecting sensitive information is paramount. High-profile data breaches and stricter privacy regulations have put organizations on alert – but how can companies still leverage data for innovation while keeping it secure? This is where data obfuscation comes in. Data obfuscation allows businesses to use real-looking information for development, testing, and analytics without exposing actual sensitive data. In simple terms, it’s about hiding or disguising data so that unauthorized users can’t make sense of it, yet authorized teams can still do their jobs. In this comprehensive blog, we’ll explain what data obfuscation means, explore common methods and techniques, discuss why it’s so important (especially for compliance like GDPR and HIPAA).

What is Data Obfuscation?

Data obfuscation is the process of deliberately transforming or masking data to make it unreadable or meaningless to anyone who doesn’t have authorization, while keeping it usable for those who do. In other words, it’s a technique to hide sensitive information by replacing or altering it, so that the real data is protected from prying eyes. Unlike simply encrypting data (which locks it completely until decrypted), data obfuscation often allows data to remain partially usable for things like software testing, analytics, or troubleshooting. The goal is to strike a balance: sensitive details (like personal identifiers, financial information, etc.) are shielded, but the data’s format and realism are preserved enough to serve its purpose in non-production environments.

Think of data obfuscation as creating a decoy version of your data. For example, a database of customer records might have real names, addresses, and credit card numbers. By obfuscating that data, you could swap out those real identifiers with fake but realistic ones – “John Doe at 123 Maple Street” might become “Jake Smith at 456 Oak Ave.” To anyone trying to misuse the data, it’s meaningless. But to a software developer or data analyst, the data still looks and behaves like real customer information, enabling them to work with it without risking privacy.

It’s important to note that “data obfuscation” is an umbrella term encompassing a range of techniques (including data masking, encryption, tokenization, etc., which we’ll cover shortly). Some forms of obfuscation are reversible (you can get the original data back with the right key or process, as in decryption) and others are irreversible (once obfuscated, the original values cannot be restored, as in most masking scenarios). Ultimately, all these methods serve one purpose: protect sensitive data from unauthorized access while maintaining its usefulness for legitimate operations.

Types of Data Obfuscation

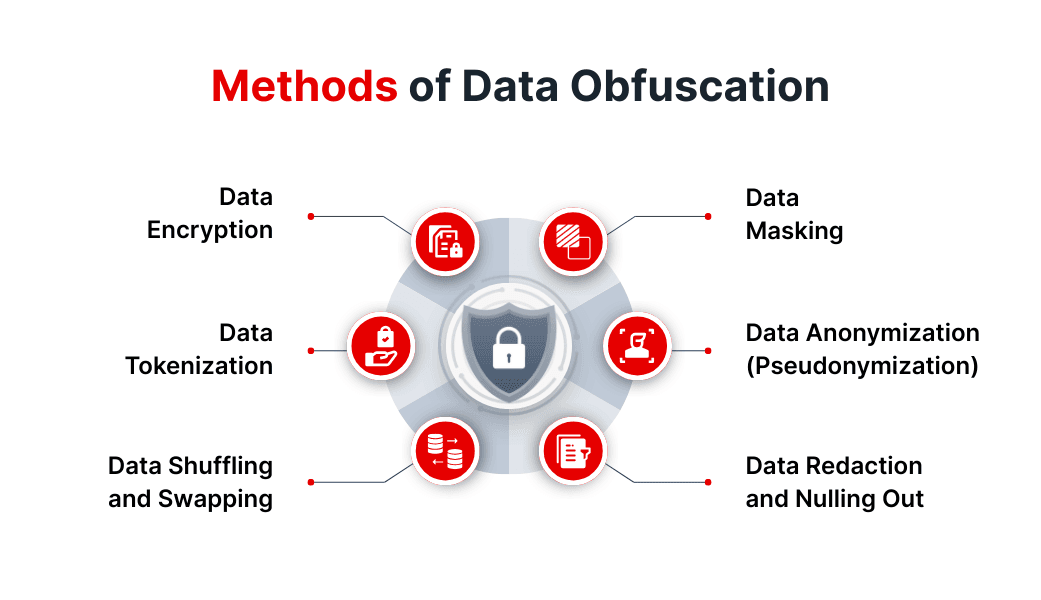

Data obfuscation can take many forms depending on how the data is transformed. Broadly, we can categorize data obfuscation techniques into a few types:

- Reversible vs. Irreversible Obfuscation: Some data obfuscation methods allow you to reconstruct the original data if needed (for example, decrypting encrypted data with a key or mapping a token back to the original value). Other methods permanently alter or strip out identifying details, so the process cannot be undone (for example, masking or anonymization). Both types are useful in different scenarios – reversible methods are handy when you may need to recover the original data under controlled conditions, whereas irreversible methods provide stronger privacy by ensuring the original sensitive values are never exposed even if someone has the obfuscated dataset.

- Static vs. Dynamic Obfuscation: In static data obfuscation methods (often called static data masking), a copy of the database or dataset is made and all sensitive data in that copy is obfuscated. This safe version can then be used in testing or shared externally. Dynamic obfuscation, on the other hand, happens on-the-fly: data is masked or transformed in real time as users access it, based on their permissions. Dynamic obfuscation (such as dynamic data masking in databases) ensures that anyone without sufficient clearance sees only obfuscated values, while authorized users can still see actual data. This is useful for production environments where you want extra protection for certain fields when viewed by certain roles.

- By Technique or Approach: We often refer to types of data obfuscation by the specific technique used. Common data obfuscation techniques include data masking, encryption, tokenization, pseudonymization/anonymization, shuffling/swapping, scrambling, and more. Each of these methods achieves obfuscation in a slightly different way and is suited to different use cases. In the next section, we’ll break down the most common methods of data obfuscation and how they work.

By understanding the different types and categories of data obfuscation, organizations can choose the approach (or combination of approaches) that best fits their needs. Often, the right solution involves multiple techniques – for example, using encryption for data in transit or at rest, but using masking or tokenization for data in use in lower environments. Now, let’s explore the methods of data obfuscation in detail.

Why Is Data Obfuscation Important?

Hiding sensitive data may seem like extra effort, but it’s now essential for any organization handling personal or confidential information. Here’s why:

Protecting Sensitive Data and Preventing Breaches

Obfuscation adds a vital layer of defense. If attackers gain access to a masked test database, they only see dummy data, not real customer details. This reduces the risk and impact of breaches—whether external or internal. With breach costs running high, obfuscation is a smart way to minimize damage.

Meeting Regulatory Compliance

Privacy laws like GDPR, HIPAA, and CCPA require organizations to safeguard personal data. Techniques like pseudonymization and de-identification—core to obfuscation—are often recommended or required. Obfuscation helps companies stay compliant and avoid fines by ensuring personal data isn’t exposed unnecessarily.

Enabling Safe Data Sharing

Many teams, from developers to analysts, need access to data but not the sensitive parts. Obfuscation makes it possible to share realistic datasets internally or with partners, while keeping personal details private. This supports testing, analytics, and collaboration without compromising privacy.

Maintaining Customer Trust

Customers expect their data to be protected. Obfuscation shows a clear commitment to privacy and helps preserve trust—even in the event of a breach. Anonymized data is less risky, and being able to say exposed data was masked can protect your reputation.

Supporting Data Governance and Quality

Obfuscation helps identify and classify sensitive data, a key step in data governance. It also enables teams to run quality checks without exposing private information. This balance of protection and usability supports better data oversight and aligns with privacy-by-design principles.

In short, data obfuscation protects, complies, enables, reassures, and governs. It’s a foundational practice in today’s data-driven world.

Methods of Data Obfuscation

There are several ways to obscure sensitive information so it’s unreadable or unusable without proper access. Each method has its own strengths and is best suited for different situations. Here’s a breakdown of the most common techniques used to obfuscate data:

1. Data Encryption

Encryption is one of the strongest and most commonly used obfuscation techniques. It works by converting readable information—known as plaintext—into an unreadable format called ciphertext, using a mathematical algorithm and a key. For instance, a name like “Alice” might be transformed into something completely unintelligible like “Qx7$1Bz…”. Without the decryption key, the original value is impossible to recover.

It’s used extensively to protect sensitive data both while it’s being transmitted (like during online banking or messaging) and while it’s stored. Even if someone manages to steal encrypted data, it’s essentially useless unless they also have the key.

That said, encryption isn’t always practical for every use case. Since encrypted data can’t usually be searched or analyzed without first decrypting it, it may not work well for things like analytics or reporting. It also comes with key management challenges—if the key is lost, so is access to the data; if the key is compromised, the data is too.

Still, encryption is a cornerstone of secure data handling—think HTTPS in your browser or encrypted files on your laptop. It’s especially important when maximum protection is required.

2. Data Masking

Masking replaces real data with fake but believable values. The structure stays the same, but the sensitive parts are swapped out. For example, a real credit card number like 4539 4512 7894 6521 might be replaced with something like 5103 2945 1234 9876. It still looks like a credit card number and could even pass validation checks, but it doesn’t belong to anyone.

Data masking is widely used for generating test or demo data. It allows teams to work with data that behaves like the real thing—same formats, same lengths—but doesn’t expose private information.

There are a few different types of masking. Static masking creates a new, masked copy of a dataset for use in non-production environments. Dynamic masking applies rules in real time, hiding sensitive parts when someone without the right permissions tries to access the data.

It’s also worth noting that masking is typically one-way. Once the original values are replaced, they can’t be retrieved. That’s by design—it ensures privacy even if the data gets out. Common techniques include substitution, shuffling, character scrambling, and partial redaction (like showing just the last four digits of an ID). Done well, masking strikes a balance between privacy and usability.

3. Data Tokenization

Tokenization replaces sensitive values with randomly generated stand-ins—tokens—that hold no meaning on their own. These tokens are stored alongside a secure mapping system (a token vault), so systems that need access to the real values can retrieve them as needed.

Let’s say a customer’s credit card number is replaced with a token like AZXQ-1135-XY89-77DK. The token doesn’t reveal anything about the original number and can’t be reverse-engineered. But when a payment needs to be processed, the system can use the token to look up the original card in the secure vault.

Tokenization is common in industries like finance and healthcare, where sensitive data is handled regularly but doesn’t always need to be exposed. It reduces the number of systems that come in contact with real data, lowering the risk surface dramatically.

Unlike encryption, tokenization doesn’t rely on complex algorithms—it depends on keeping the mapping database secure. If implemented correctly, it’s nearly impossible to reverse-engineer a token without access to the vault. It’s also faster in many real-time use cases, making it great for things like checkout flows or API responses where performance matters.

4. Data Anonymization (Pseudonymization)

Anonymization is about stripping away anything that could identify an individual. This means removing or generalizing personal identifiers—names, IDs, birth dates, and sometimes even specific combinations of data points that could be used to trace back to someone.

Instead of a birthdate, a dataset might include just an age bracket. Names might be removed altogether, or replaced with codes. Exact addresses could be turned into general regions. The idea is to make it impossible (or extremely difficult) to tie the data back to a specific person.

There’s also pseudonymization, where identifiers are replaced with artificial ones (pseudonyms), and the mapping is stored separately. Under regulations like GDPR, pseudonymized data is still considered personal data (since re-identification is technically possible), but it’s much safer to handle.

True anonymization, on the other hand, is irreversible. Once identifiers are scrubbed out, there’s no way back. That makes it especially useful in research or product development, where data is needed for analysis but individual identities don’t need to be known. Regulations like HIPAA outline specific techniques for anonymizing healthcare data, making it possible to share valuable insights without compromising privacy.

5. Data Shuffling and Swapping

Shuffling is a straightforward method: you rearrange data values within a column so that while each value remains valid, it’s now linked to a different individual. For example, you might randomly reassign salaries among employees. The overall salary distribution stays intact, but nobody’s salary is accurately represented.

This kind of obfuscation is useful when you want to preserve the realism of the data without exposing actual relationships. It can be applied to various fields—birthdates, locations, customer IDs—especially when the values are interchangeable.

Swapping is often combined with other techniques like masking or redaction as part of a layered approach to obfuscation. While simple, it can be surprisingly effective at breaking associations that could otherwise lead to re-identification.

6. Data Redaction and Nulling Out

Redaction is a blunt but effective technique—you simply remove or blank out sensitive data. In datasets, this could mean deleting a field entirely (like Social Security Numbers) or replacing values with nulls or placeholders like “[REDACTED].” Since the data is gone, there’s no risk of exposure—but also no way to use it.

This method works well when certain data is too sensitive or not needed. For example, you might redact email or IP addresses in log files shared with engineering teams, or remove customer names from datasets sent to vendors. Redaction is irreversible and supports policies like “data minimization”—if a field isn’t needed, why keep it?

The tradeoff is that too much redaction can limit data utility. That’s why many teams combine redaction with other techniques—like removing direct identifiers and masking the rest. Redaction is also common in free-text fields, using pattern matching to strip out things like credit card numbers from support tickets. It’s simple, effective, and a smart way to reduce risk without much overhead.

These methods are not mutually exclusive. In fact, a strong data obfuscation strategy often layers multiple techniques. For example, an approach to protecting a database might involve encrypting the entire database at rest (so if someone gets the files, they’re gibberish), masking or tokenizing particularly sensitive columns within the database (so even if a user query accesses them, they see no real data), and redacting or anonymizing data in any extracts or reports that leave the database. By combining methods, you cover for each method’s limitations and maximize security. The key is to choose the right methods for the right scenario: consider the sensitivity of the data, how it’s going to be used after obfuscation, and who (if anyone) might need to retrieve the original values.

Challenges of Data Obfuscation

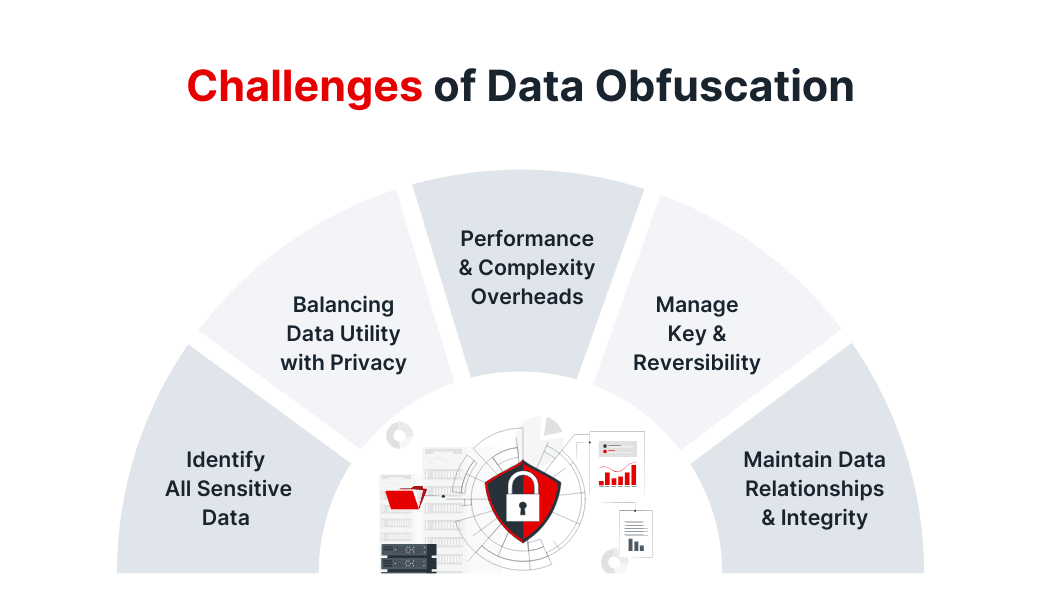

Implementing data obfuscation isn’t as easy as flipping a switch—it brings its own set of challenges and trade-offs. Below are some of the most common hurdles organizations run into, along with ways to manage them.

1. Identifying All Sensitive Data

The first roadblock is often just figuring out what needs to be obfuscated and where it’s hiding. In most organizations, data is scattered—across databases, files, applications, and cloud platforms. And sensitive information doesn’t always sit in neatly labeled fields. You might find personal details tucked away in places like free-text “Comments” fields or miscellaneous metadata. Overlooking one of these can create a privacy gap.

To get this right, you need thorough data discovery and classification. That often starts as a manual process, but AI-powered tools like DQLabs can help automate the grunt work. By analyzing metadata and content, they flag fields that likely contain PII or PHI. Even with automation, though, maintaining a current inventory of sensitive data is something that requires ongoing attention.

2. Balancing Data Utility with Privacy

There’s a delicate balancing act between protecting sensitive data and keeping it usable. Mask too much, and you risk breaking downstream processes or producing test data that’s too artificial to be useful. Mask too little, and privacy exposure creeps back in.

Getting this right usually depends on the context. You might encrypt certain fields—say, bank account numbers—because they’re not needed in most use cases. For less sensitive data, partial masking or format-preserving obfuscation might be enough. The point is, obfuscation isn’t one-size-fits-all. It requires collaboration between governance teams and end users to make sure the data still supports its intended purpose. Some experimentation is often part of the process.

3. Performance and Complexity Overheads

Obfuscation doesn’t come free—it adds overhead, both technically and operationally. Encryption slows down read/write operations. Dynamic data masking introduces latency. Tokenization might need extra infrastructure like a token server. All of this can affect system performance, especially in environments where data flows constantly and at scale.

There’s also the complexity of integrating obfuscation into existing tools and workflows. Compatibility issues can pop up. Encryption keys need to be stored and rotated securely. Masking rules must evolve as schemas change. None of it is trivial. The good news is, many modern databases and platforms now support native obfuscation features—things like transparent encryption or row-level masking—which can ease some of the burden if used wisely.

4. Reversibility and Key Management

Reversible methods like encryption and tokenization come with a critical dependency: secure key management. If the keys aren’t protected, the entire obfuscation effort can fall apart. Unfortunately, it’s not uncommon to see keys hardcoded into scripts or config files—a big no-no.

Good practices here include using dedicated key management systems, rotating keys regularly, and enforcing strict access controls. You also need to decide whether reversibility is even necessary. In some cases, it’s better to use irreversible techniques like hashing or redaction to eliminate that risk entirely. But if reversibility is required—say, for support workflows—then key management becomes non-negotiable.

5. Maintaining Data Relationships and Integrity

Obfuscating data while preserving its integrity is harder than it looks. Let’s say you mask a customer ID in one table but forget to do it the same way in another—now your join logic is broken. Consistency is key, especially when working with relational data or pipelines that rely on linked records.

Format matters too. If an application expects a social security number in a certain pattern, your obfuscation method needs to output something similar. Otherwise, you risk system errors or rejected inputs. Even statistical properties might need preserving—for example, keeping outlier distributions intact for testing logic.

This is where data validation steps in. Running profiling and quality checks after obfuscation helps ensure you didn’t break anything. Done right, obfuscation not only protects sensitive data but supports data quality by enforcing rigor and structure in how it’s handled.

Best Practices for Implementing Data Obfuscation

Successfully implementing data obfuscation takes more than picking a technique or tool—it needs to be part of your broader data security and governance efforts. Here are some best practices to guide the process:

1. Know Your Data and Privacy Requirements

Start by understanding what data you have, where it’s stored, and its sensitivity. Use data discovery and classification to identify personal or confidential information, and review applicable regulations like GDPR, HIPAA, or PCI DSS. These rules may influence how you obfuscate—GDPR, for example, favors pseudonymization, while PCI DSS requires masking card numbers.

If you don’t already have a data classification policy, create one. Label data as public, internal, or sensitive, and define corresponding obfuscation rules. The more clearly you understand your data and obligations, the more effective your obfuscation efforts will be.

2. Choose the Right Techniques for the Right Data

Obfuscation isn’t one-size-fits-all. Techniques should match the context and sensitivity of the data. Highly sensitive data like Social Security numbers or health records may require irreversible methods like masking or anonymization. If you need data to retain some realism (e.g., email formats for testing), consider using dummy data or controlled domains.

If analytics is the goal, obfuscation should preserve data’s statistical integrity—shuffling or format-preserving masking can help. For data that needs to be reversible later, use encryption or tokenization with tight access controls. Ultimately, tailor your methods field by field, and document your choices in a policy so teams stay consistent.

3. Favor Irreversible Methods When Possible

Where feasible, use one-way obfuscation like masking, hashing, or anonymization. If the data can’t be re-identified, the security risk drops dramatically. Regulators also tend to favor irreversible methods—under GDPR, anonymized data is no longer considered personal data, easing compliance.

Of course, sometimes reversibility is needed for business reasons. But ask yourself: do we really need the original data in this environment? If not, mask and move on. If you do use reversible methods, make sure keys and access controls are tightly managed. When in doubt, default to irreversible.

4. Implement Consistency and Scalability

Obfuscation should be consistent and repeatable. If a value is masked today and tomorrow, the result should be the same—this is especially important across environments or systems. Use centralized services or consistent algorithms to avoid mismatches that can break downstream processes.

Scalability matters too. As your data grows and systems evolve, obfuscation methods should adapt without requiring complete overhauls. Automate where possible—build obfuscation into ETL pipelines, use scheduling, and support new data sources. Treat obfuscation as a standard operational step in your data workflows.

5. Leverage Automation and Modern Tools

Manual obfuscation doesn’t scale. Use tools and platforms that support automated masking, tokenization, and encryption. Many database systems let you define masking policies that apply automatically during queries. There are also purpose-built tools that apply complex rules across sources on a schedule.

AI-based platforms can help too. DQLabs, for instance, supports semantic discovery of sensitive data and integrates with broader data governance workflows. Pairing this with your obfuscation tool gives you an end-to-end setup—from discovery to masking—with minimal manual effort. Automation ensures consistency, reduces exposure time, and supports real-time or on-demand use cases.

6. Monitor, Audit, and Refine

Obfuscation isn’t “set it and forget it.” Regularly audit your processes to ensure no sensitive data is slipping through. Scan masked datasets to validate effectiveness, and monitor who accesses any reversible data or keys. This kind of oversight ties directly into data observability.

It’s also smart to gather feedback from users—developers, analysts, QA teams. If masked data is breaking things or isn’t usable, adjust your methods. You might find you’re masking more (or less) than needed, or that certain formats need tweaking.

Stay current on emerging techniques like differential privacy or advanced tokenization approaches. Even if you don’t adopt them right away, being aware keeps your strategy future-ready. And if you’re in a regulated industry, be sure to document everything—from methods to audit trails—so you can show compliance when needed.

Conclusion

Data obfuscation has evolved from a niche practice to a vital element of modern data management. As organizations collect and use more data, the need to protect sensitive information becomes critical. Techniques like masking, encryption, and tokenization help de-identify and secure data—supporting security, compliance, and governance efforts.

By applying obfuscation, teams can safely use real-world data for development, testing, and analysis without risking privacy. It supports compliance with regulations such as GDPR and HIPAA, helps prevent costly breaches, and builds trust with customers. But obfuscation shouldn’t stand alone—it works best when integrated into a broader data strategy that includes automation, quality checks, and continuous improvement.

For data professionals, the takeaway is clear: obfuscation isn’t just an IT task—it’s a smart business move. By following best practices and leveraging the right tools (possibly with AI-driven discovery from platforms like DQLabs to guide the way), any organization can implement obfuscation effectively.

As you adopt or enhance obfuscation in your environment, remember the goal: protect what’s sensitive, preserve what’s useful. Striking that balance ensures your data remains valuable, safe, and compliant—ready to support innovation and meet evolving regulatory demands.

FAQs

What’s the difference between data obfuscation and encryption?

Encryption is a type of data obfuscation—specifically, a reversible one. It scrambles data using an algorithm and key, making it unreadable unless decrypted. It’s highly secure when the key is safe, but the data must be decrypted before use. Obfuscation, on the other hand, is a broader term that includes both reversible (like tokenization) and irreversible (like masking or hashing) techniques. While encryption focuses on secrecy, obfuscation balances privacy with usability. Often, both are used together—encryption for data at rest or in transit, and other obfuscation methods for data in use or shared environments.

Can data obfuscation be automated?

Yes—and at scale, it must be. Manual masking isn’t practical for enterprise data. Most major platforms (like SQL Server, Oracle, or Snowflake) offer built-in masking or encryption. Dedicated tools let you create masking rules and apply them with a click or schedule. Scripting in Python or integrating with ETL pipelines also enables automation. AI-driven discovery tools like DQLabs can identify sensitive fields and trigger automated data obfuscation workflows. In DevOps and DataOps, these processes are often embedded in CI/CD or orchestration pipelines. Automation ensures speed, consistency, and security—keeping humans out of the loop and sensitive data protected.

How does data obfuscation help with GDPR compliance?

Data obfuscation supports GDPR in four major ways:

- Pseudonymization: GDPR encourages replacing identifiers with artificial ones. If re-identification keys are kept separately, the data can often be processed with fewer restrictions.

- Privacy by Design: Techniques like masking help enforce data minimization by hiding PII when it’s not required, such as sharing analytics data with vendors.

- Breach Mitigation: If encrypted or masked data is leaked, the impact (and liability) is lower. In some cases, it might not even count as a breach under GDPR.

- International Use: Anonymizing data removes it from GDPR’s scope, enabling safer global sharing or repurposing without additional compliance hurdles.

Data obfuscation isn’t a silver bullet—it doesn’t replace the need for access controls or data subject rights—but it’s a core privacy safeguard. Regulators value technical measures like encryption and masking as proof of responsible data stewardship.

ChatGPT

ChatGPT Perplexity

Perplexity Grok

Grok Google AI

Google AI