Summarize and analyze this article with

Data drift refers to unexpected changes in data over time – whether in its content, structure, or meaning – that can throw off pipelines and machine learning models. Imagine carefully building a data pipeline or an ML model based on certain assumptions about your data, only to find those assumptions no longer hold a few months later. Without proactive monitoring, these shifts can lead to broken ETL jobs, inaccurate analytics, or decaying model performance.

This blog provides a deep dive into what data drift is, the various forms it takes (schema changes, statistical shifts, concept drift), why it happens, and how to detect and handle it using modern data observability practices. The goal is to equip data and AI engineers and teams with the knowledge to recognize drift early and maintain reliable, high-quality data pipelines and models.

What Is Data Drift?

Data drift is a broad term describing any significant change in data that occurs over time. These changes can be in the data’s structure, statistical properties, or the underlying relationship that the data represents.

In simpler terms, data that used to look or behave one way is now different in a notable way. Data drift isn’t just an academic concept for ML researchers – it’s something that data engineers, analysts, and ML practitioners all need to watch for in production data systems.

Data drift encompasses a few specific phenomena, including changes in schema (structure), changes in data distribution, and changes in concept/meaning. We’ll break these down in the next section. The common theme is that drift happens without explicit notification – it’s the “silent” change in your data that, if left unchecked, can lead to downstream problems. Recognizing what data drift is and how it manifests is the first step for data teams to mitigate its effects.

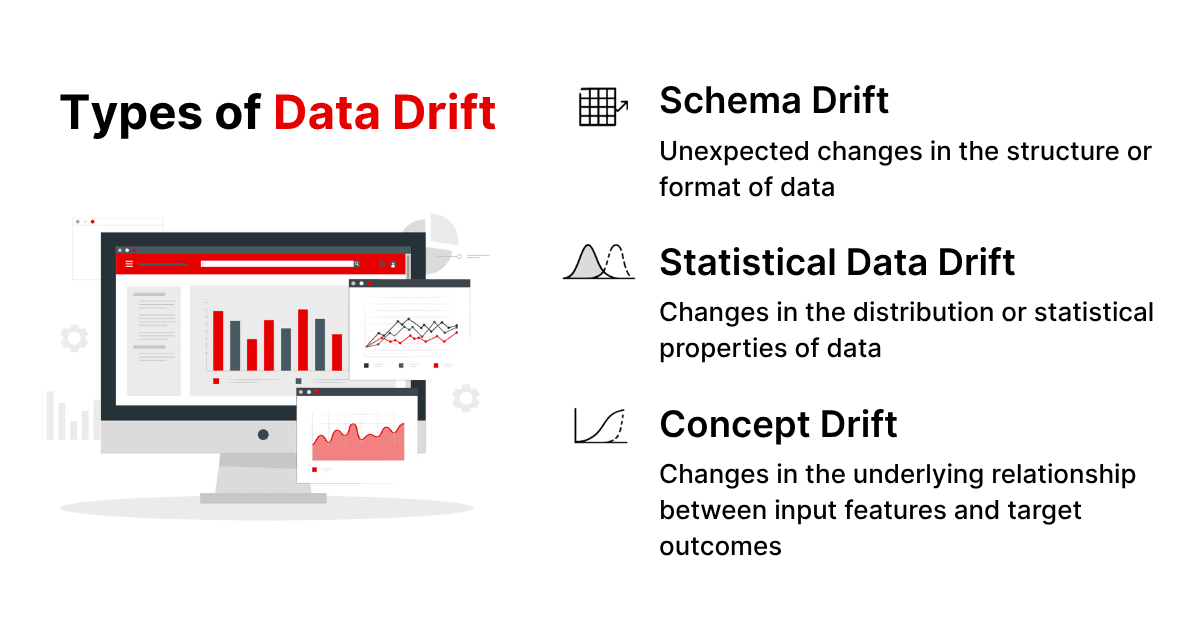

Types of Data Drift

Not all data drift is created equal. Broadly, data drift can be categorized into a few types based on what exactly is changing. Understanding these types helps in diagnosing issues and applying the right detection methods. The main forms of drift include schema drift, statistical data drift, and concept drift. Let’s explore each:

Schema Drift (Structural Changes)

Schema drift refers to unexpected changes in the structure or format of data. This could mean a database table’s schema has changed, a new column appears (or an existing one disappears), data types shift, or JSON/CSV fields change order or naming. For data engineers, schema drift is a common headache – as these structural changes can break ETL jobs, cause schema validation checks to fail, or lead to subtle errors if not detected.

Real-world example: Imagine your pipeline expects a CSV file with columns Date, ProductID, and Sales. One day, the source system adds a new column, Region, in the middle. Your parser might misalign columns, or your SQL transformations may fail due to the schema mismatch. Data observability tools can catch this by monitoring schema metadata – flagging if the number of columns changed or if a field’s data type no longer matches the expected schema. Proactively handling schema drift often involves schema versioning, flexible data ingestion that can adapt to evolving schemas, or using a schema registry for contracts, especially in event streams. The key is to detect these structural changes as soon as they happen, so that data engineers can update pipelines or mappings before data quality suffers.

Statistical Data Drift (Data Distribution Shift)

Statistical data drift refers to changes in the distribution or statistical properties of data over time. It’s often what people simply call “data drift” in the context of machine learning – i.e., the input data’s patterns shift away from what was seen historically or during model training. This can include changes in mean, variance, frequency of certain values or categories, or the introduction of new patterns not seen before. This is also known as feature drift or covariate shift in ML terminology.

Real-world example: A data team at an e-commerce company might observe that average order value or product category distribution has shifted from Q3 to Q4. During the holiday season, for example, electronics may spike in sales, or new product lines may alter usual patterns. In Snowflake or BigQuery, such changes don’t break pipelines (schema remains the same) but can mislead analytics or degrade ML model performance if not addressed. A model trained on Q3 data may underperform in Q4 because input distributions—like product category mix or spend—have shifted. Statistical drift can also appear as KPI anomalies or unusual dashboard spikes. Monitoring for such drift means tracking metrics like mean, count, or category frequencies over time. Modern observability platforms apply anomaly detection on these trends, alerting teams when values deviate significantly from historical baselines, so they can investigate before issues escalate.

Concept Drift (Underlying Relationship Change)

Concept drift is a bit different – it occurs when the underlying meaning or relationship in the data changes, even if the raw data distribution might not obviously show a shift. This term is often used in the context of machine learning models, referring to a change in the relationship between input features and the target outcome. In essence, the “concept” that the model is trying to learn has evolved. The model’s performance degrades not necessarily because the input data looks drastically different, but because the world has changed in how inputs map to outputs.

Real-world example: Think of a credit scoring model that predicts loan default risk. If economic conditions shift (say, a recession hits), the relationship between a person’s financial attributes and their likelihood to default might change (perhaps even people with good credit scores are defaulting more due to external conditions). That’s concept drift – the model’s assumptions about how inputs relate to default risk no longer hold true.

Concept drift is crucial for data teams to understand because it directly impacts business metrics and model accuracy. It often requires retraining models or revising logic. Detecting concept drift usually involves monitoring model performance indicators (like error rates or prediction accuracy over time) and checking if they degrade. It can also involve monitoring target variable distributions – for example, the proportion of “yes” vs “no” outcomes in your prediction might shift (sometimes called prior probability shift). Data observability for ML models, sometimes termed ML monitoring, can catch concept drift by keeping an eye on metrics like prediction distributions vs actual outcomes, and alerting if there’s a significant divergence.

Why Does Data Drift Happen?

Data drift can seem mysterious, but it usually has logical causes. Understanding why drift happens can help data engineers both predict potential drifts and design systems robust to change. Here are some common causes of data drift:

- Upstream Data Changes: One of the most frequent causes is changes introduced in upstream systems. This could be a software update that changes how data is logged, a new version of an app, or a migration to a different database. For instance, a source team might start tracking a new feature (adding a column) or apply a different encoding or business rule that alters the data values. These changes can lead to both schema drift (structure changes) and data drift (different values or distributions).

- Evolving User Behavior and Seasonality: Human-driven data is never static. Customer preferences, behavior patterns, and market trends evolve continuously. Seasonal effects or external trends can cause data to shift. For example, social media usage patterns may drift as a platform’s user demographics change. A sudden viral trend can spike certain metrics. Over a longer term, gradual changes like an aging user base or shifting consumer interests will reflect in the data. These fall under statistical drift – the data distribution changes as the world generating that data changes.

- External Events and Shocks: Major external events (think of a global pandemic, economic downturn, new regulations, or even a viral news event) can cause abrupt concept and data drifts. COVID-19, for instance, dramatically shifted consumer behavior in many industries – models trained on pre-2020 data often became inaccurate in the post-2020 reality. Regulatory changes can redefine categories (e.g., a new law might redefine what counts as sensitive data, changing how data is labeled or collected). These are often sources of concept drift because the fundamental ground truth has changed.

- Changes in Data Collection or Instrumentation: Sometimes the tools and processes we use to gather data change. A classic scenario is a logging pipeline update: say your web app’s analytics SDK gets upgraded and now captures events slightly differently. Perhaps the definition of an “active user” event changes, or a sensor’s firmware update increases its precision. Such changes can introduce drifts – maybe you start seeing more events (not necessarily because users increased, but because the instrument is logging more aggressively), or sensor readings shift range due to calibration. Data preprocessing steps can also cause drift: if an ETL job’s logic is tweaked (like new data cleaning rules or filtering criteria), the output data distribution might change from before.

- Addition of New Data Sources or Features: In modern data stacks, it’s common to continually integrate new data sources. Merging a new source dataset or feature into an existing pipeline can introduce drift if the new source has different characteristics. For example, a company expanding into a new region might start adding data from that region – the overall dataset now has new categories (e.g., new countries or languages) and different distributions that can drift from the previous state. Likewise, combining two customer databases or enabling a new user event will change the overall data profile.

- Data Quality Issues and Gradual Decay: Not all drift comes from intentional changes; some come from entropy in data quality. Over time, data can accumulate noise, errors, or missing values. Perhaps a slowly degrading sensor starts reporting higher error readings, gradually shifting the average of a measurement. Or user input data becomes messier as new types of entries appear that weren’t previously validated (imagine free-form text fields getting new kinds of responses). These issues can cause subtle data drift. If outliers or null values start increasing due to a hidden pipeline bug, your data’s statistical properties drift from the ideal clean state.

The Impact of Data Drift on Data Engineering and ML

Data drift isn’t just a theoretical annoyance; it has tangible impacts on both the technical health of data pipelines and the accuracy of analytics or machine learning models. Let’s break down the consequences:

Data Drift in Data Pipelines (Engineering Impact)

For data engineers, drift can directly translate to pipeline pain. Schema drift often causes pipelines or ETL jobs to fail outright – for example, a scheduled job might throw an error because a column it expected is missing or has a different type. In the worst case, a pipeline might not fail obviously but produce corrupted or misaligned data (which is even scarier, as bad data silently enters your warehouse). This leads to what some call “data downtime” – periods when data is not reliable or available for use. Every change not accounted for is a potential incident: missing data in dashboards, reports that don’t run, or alerts going off because of unexpected nulls. Data engineers end up firefighting these issues, doing ad-hoc investigations into “what changed?”. This reactive mode can drain productivity and erode trust in data.

Even statistical drift (without schema changes) can hurt pipelines. Suppose a key metric’s value doubles overnight due to a genuine change in the business – if you have downstream systems expecting values in a certain range (say, a transformation that caps values or a report that highlights “above threshold” values), those systems might behave erratically. A sudden spike or drop in data volume (volume drift) can overwhelm a pipeline or trigger false alarms in monitoring systems if thresholds aren’t adjusted. Essentially, when data characteristics shift, data quality rules and pipeline logic that were tuned for the old state might no longer be valid. This requires retuning or making pipeline logic more dynamic.

Another impact is on data governance and compliance: if sensitive data fields drift (e.g., a field that was mostly anonymized now suddenly contains some raw personal data because upstream changed), you could inadvertently violate compliance rules. Or if referential integrity drifts (say, new category values appear that don’t match the reference data set), the completeness and consistency of your data is affected. Data drift can thus degrade overall data quality, leading to inaccurate analyses if not caught.

Data Drift in Machine Learning Models (Model & Business Impact)

From a machine learning perspective, data drift is often cited as a primary cause of model decay. Models in production start to perform worse when the data they see begins to differ from the data they were trained on. If you ignore drift, you might find that your once-accurate predictions are now way off, which can be dangerous in a business setting. For instance, if a fraud detection model doesn’t adapt to new fraud tactics (concept drift), it could miss fraudulent transactions, causing financial loss. If a recommendation engine’s user behavior data drifts, it may start showing irrelevant recommendations, leading to poor user experience and lost revenue. In marketing or customer analytics, data drift might mean your segmentation or forecasting models are off-target, resulting in misallocated budget or missed opportunities.

The business impact of these can be huge: poor decisions based on stale or drifted data can cost money and reputation. For example, A retail company experienced concept drift when the COVID pandemic hit; their ML demand forecasting model started severely underestimating demand for home office furniture (because pre-2020 data didn’t include the sudden work-from-home effect). This drift, if not noticed, would mean stocking out of popular items (lost sales) or misallocating inventory. Fortunately, if data observability is in place, the team might catch unusual patterns early (like a surge in certain product category sales against the forecast) and realize the model needs retraining with recent data. This kind of agility can turn a potential loss into an opportunity to recalibrate strategy quickly.

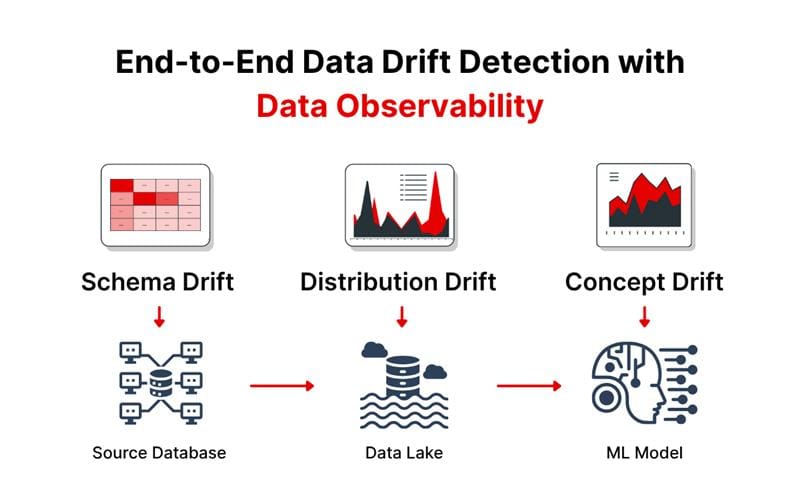

Detecting Data Drift with Data Observability

Given the risks, detecting data drift as early as possible is critical. This is where data observability comes in. Data observability is the practice of continuously monitoring the health and behavior of your data pipelines and data itself by tracking various signals and metrics.

Here’s how data observability helps detect different kinds of drift:

- Schema Change Detection: A good observability platform automatically detects schema drift by monitoring metadata changes—like a new column added to a table or an API response format shift. Instead of relying on tribal knowledge or manual checks, the system notifies data owners when a schema deviates from its previous version. Techniques include integration with schema registries or warehouse queries to track changes. With observability integrated into workflows (like an Airflow job or a dbt test), teams can catch breaking changes early. Many tools support schema drift detection out-of-the-box and send alerts when unexpected changes occur.

- Data Volume and Freshness Anomalies: Monitoring record counts and data arrival times helps flag volume and freshness drift. A 30% drop in daily customer records might indicate a stuck pipeline or upstream filtering. A sudden spike could mean duplicate records or an increase in events. If data that usually arrives at 8 a.m. shows consistent delays, that signals potential upstream changes. Observability dashboards track these metrics over time, triggering alerts if thresholds are breached. Advanced tools use ML to set dynamic thresholds that adjust for patterns like weekend slowdowns or monthly spikes.

- Statistical Distributions Monitoring: Statistical drift is detected by profiling data over time—tracking values like means, medians, or category frequencies. Platforms like DQLabs use anomaly detection on these time-series metrics to spot shifts. For instance, if the null rate in CustomerAge suddenly doubles, or if “PayPal” usage drops from 20% to 5%, the system flags it. Some teams also apply statistical tests like PSI, KL divergence, or KS tests to measure differences between time windows (e.g., today vs last month). These can be automated to catch subtler drifts that simple anomaly rules might miss.

- Data Quality Rule Breakage: Observability tools also track adherence to predefined data quality rules—like value ranges or null limits. A rule failure often signals drift, but observability goes further by catching “unknown unknowns.” If a value outside any previously seen range appears, ML-driven anomaly detection can still flag it. Pairing rule-based checks (e.g., with dbt) with observability ensures both expected and unexpected issues are caught.

- Model Performance Monitoring: For concept drift in ML models, observability extends to model monitoring. This means tracking metrics like accuracy, error rates, or business KPIs tied to the model’s output. If there is a consistent decline in model accuracy on recent data, that’s a red flag for drift. Another approach is to compare the model’s predictions distribution to the actual outcomes distribution (when you have ground truth later) – any significant divergence might indicate concept drift. Specialized ML observability solutions can monitor feature importance and data drift on features feeding the model as well, highlighting which specific feature might be drifting the most and contributing to model issues.

- End-to-End Pipeline Visibility: A hallmark of good observability is not just detecting an anomaly, but helping trace its root cause. When a drift alert fires, engineers need to quickly pinpoint where and why. Observability systems that integrate metadata, lineage, and logs can help answer: Did a specific upstream job failure lead to missing data (volume drift)? Did a particular deployment or code change coincide with the drift? For example, if an alert says “Schema drift detected in Table Orders (new column added)”, the platform could immediately show that this table is fed by a Spark job that had a new version deployed last night by the X team – providing a lead on the cause. Similarly, lineage can show which downstream dashboards or models might be impacted by that drift, so you can proactively inform those users.

For best practices on implementing pipeline observability, check out our blog on Data Pipeline Observability.

How DQLabs Helps: As an example of a modern data observability platform, DQLabs uses ML-driven observability signals to catch drift automatically. It provides out-of-the-box adaptive rules that learn your data’s normal behavior, so you don’t have to manually set static thresholds for every metric. DQLabs continuously tracks metrics like row counts, schema changes, data freshness, duplicates, and column value profiles. If something goes out of the ordinary – say a schema change or a spike in missing values – it generates an alert with context. The platform’s time-series analysis on both numeric and categorical data means it can even factor in seasonality (so it knows the difference between an expected yearly seasonal drift versus a truly anomalous shift). By deploying such a tool, data teams gain a real-time radar for data drift, freeing them from relying purely on reactive troubleshooting or periodic manual checks.

Strategies for Handling and Preventing Data Drift

Detecting drift is half the battle; the other half is responding to it and preventing future issues. Here are strategies data teams can employ to mitigate data drift and its impact:

- Establish a Retraining Schedule and Triggers: For machine learning models, expect concept drift to occur eventually. Avoid a “set it and forget it” approach—schedule regular retraining cycles (monthly or quarterly) using the latest data to keep models updated. More importantly, use drift detection as a trigger for off-cycle retraining: if your observability system flags significant input drift or a drop in model performance, this should prompt immediate investigation or retraining. Advanced MLOps setups can automate this process, kicking off retraining and redeployment when drift crosses a threshold.

- Build Robust Pipelines with Flexibility: To handle schema drift, design data pipelines that accommodate change. If ingesting JSON or AVRO data, use schema evolution features that tolerate new or unknown fields. In Spark, options like mergeSchema for Parquet or using flexible struct columns can help. Avoid using SELECT * blindly in SQL or ETL scripts—but also avoid rigid column mappings if upstream changes are expected. Strike a balance and ensure there’s a quick update process for schema mappings when changes are detected. Having a schema registry or contract tests can also help manage expected schema changes in a controlled way (especially for event streams, tools like Kafka Schema Registry ensure producers and consumers manage schema evolution compatibly). The key is to reduce the chances that a schema change flat-out breaks your pipeline – either by accommodating it or by making the pipeline fail fast with a clear error so you catch it (rather than silently producing wrong data).

- Versioning and Data Lineage: Track data versions and maintain lineage metadata. Keeping snapshots or samples of both training and recent data enables you to measure drift explicitly and trace its impact. If a source changes, lineage helps quickly identify affected downstream datasets and reports, so stakeholders can be alerted and outputs double-checked. Many observability and cataloging tools automate lineage tracking.

- Data Quality Controls and Alerts: Embed rule-based validations across your pipelines. Checks like “no nulls in primary key” or “order date must be in the past” help catch obvious errors and early signs of drift. While observability handles broader pattern anomalies, quality rules act as a first line of defense—especially for issues like data entry errors or invalid values. Configure DQ checks both upstream (to reject bad data early) and downstream (before data hits dashboards or models). When a check fails, treat it as you would a failing unit test in software – investigate, and only allow data through once resolved or consciously accepted.

- Collaborative Communication: Often, the knowledge of an upcoming change that could cause drift exists somewhere in the organization (for example, the product team knows they will launch a new feature that adds a new event type to the logs). Encourage a culture where data producers (upstream system owners) and data consumers (downstream analysts, data scientists) communicate about changes. This might be through change request processes, subscriber lists for data schema changes, or just Slack channels where announcements are made. While automation is great, human communication can sometimes pre-empt a drift incident entirely (“Oh, you’re adding a new category value next month? Let me make sure our analytics can handle that.”). Catalog tools with owner tags or discussion threads, and agile DataOps processes, can facilitate this awareness.

- Governance and Policy Enforcement: Strong data governance policies often provide a safety net against drift. For example, mandating that schema changes in critical datasets go through reviews, or requiring that new sources be profiled and approved before use. Governance also includes monitoring compliance—if a field suddenly starts containing PII data, observability alerts can surface it quickly. These controls don’t prevent drift entirely, but they ensure it’s handled in a structured, auditable way.

- Continuous Improvement and Feedback Loops: Treat each instance of data drift as a learning opportunity. When you detect and resolve a drift issue, perform a post-incident analysis. Ask: Could we have caught this sooner or prevented it? If a certain type of drift keeps recurring, perhaps you need a new monitoring metric or a more frequent data review with stakeholders. Over time, your team can build a playbook for common drift scenarios. For example, “If an upstream team changes a data field format, our playbook says: update schema in ingestion script, backfill the historical data in new format if needed, communicate the change to affected analysts, add a test to ensure format remains consistent going forward.” This turns reactive firefighting into proactive management.

- Leverage AI and Automated Remediation: Newer data observability platforms (again, like DQLabs) are increasingly embedding AI not just to detect but also to suggest fixes for data issues. While human oversight is vital, don’t shy away from using automation for routine responses. For example, if a known drift happens (like the typical end-of-quarter surge in data volume that always triggers false alarms), you can automate an adjustment to thresholds during that period or automate scaling of infrastructure to handle the volume. In cases of model drift, some systems can auto-retrain a model and run champion/challenger evaluations. Automated remediation needs careful governance, but when well-configured, it can dramatically reduce downtime.

By employing these strategies, data teams can minimize the disruption caused by data drift. Ultimately, you want to reach a state where your systems are resilient to change: when data evolves, it’s detected quickly and handled gracefully, without panicked late-night issues.

Conclusion

Data drift is inevitable in any evolving data system. Whether it’s a subtle shift in statistical distributions, a structural schema change, or a more complex shift in how input features relate to outcomes in machine learning models, drift has real consequences. Left unchecked, it can lead to broken pipelines, inaccurate dashboards, and decaying model performance—ultimately eroding trust in your data.

Addressing drift effectively requires more than ad-hoc checks. With data observability, teams gain continuous visibility into their pipelines and datasets. By monitoring schema changes, data volumes, freshness, and distribution anomalies in real-time, observability platforms help detect drift early—before it causes downstream damage.

But detection is just the beginning. Building resilient data systems means combining observability with flexible pipeline design, proactive data quality checks, clear governance, and feedback loops. When done well, managing drift becomes a predictable part of your data operations—not a recurring crisis.

By embracing observability and proactive strategies, your team can maintain reliable data pipelines, preserve model accuracy, and ensure the insights your business depends on remain trustworthy—even as your data and the world around it continue to change.

FAQs

What is the difference between data drift and concept drift?

Yes, Data drift refers to changes in the distribution or content of data over time, such as shifts in demographics or transaction patterns. Concept drift, by contrast, involves changes in the relationship between inputs and outputs—when the logic behind a model’s predictions no longer reflects reality. For instance, if what defines a “fraudulent transaction” changes due to new tactics, the model’s understanding becomes outdated. In short, data drift affects the input (X), while concept drift alters how X relates to the output (Y). Mitigation differs too—data drift may require updating datasets, while concept drift often demands revising model logic or retraining with new assumptions.

How can data teams detect data drift early?

Continuous monitoring is key. Teams should use data observability tools to track metrics like row counts, schema changes, and value distributions in real time. Alerts should trigger when today’s data deviates from historical patterns. Embedding data checks into pipelines—via dbt tests or custom scripts—can catch drift early during ingestion or transformation. Visual trend analysis helps spot gradual shifts. The goal is to move from manual, reactive checks to proactive, automated surveillance of data health.

Why is data drift important to manage in production ML models?

In production ML, unmanaged data drift leads to model decay – the model’s predictions become less accurate and potentially misleading. This can have real business costs: for example, a recommendation system may start suggesting irrelevant products (hurting sales and user trust) if user behavior data drifts from what the model was trained on. In high-stakes fields like healthcare or finance, drift could even mean the difference between identifying a risk or missing it. Managing drift through regular model updates, monitoring, and alerts ensures the model stays aligned with the current reality. It protects the investment made in developing the model and safeguards the decisions or user experiences driven by that model.

Can data drift occur even if our data is not used for machine learning?

Definitely. Data drift affects all data pipelines—not just ML models. For example, dashboards may display unexpected trends because the data source changed, not the calculations. In warehouses, category definitions might shift, breaking reports or causing incorrect joins. Even in simple SQL-based reporting, drift can impact accuracy. Managing drift is essential for maintaining trust in any data-driven system.

ChatGPT

ChatGPT Perplexity

Perplexity Grok

Grok Google AI

Google AI