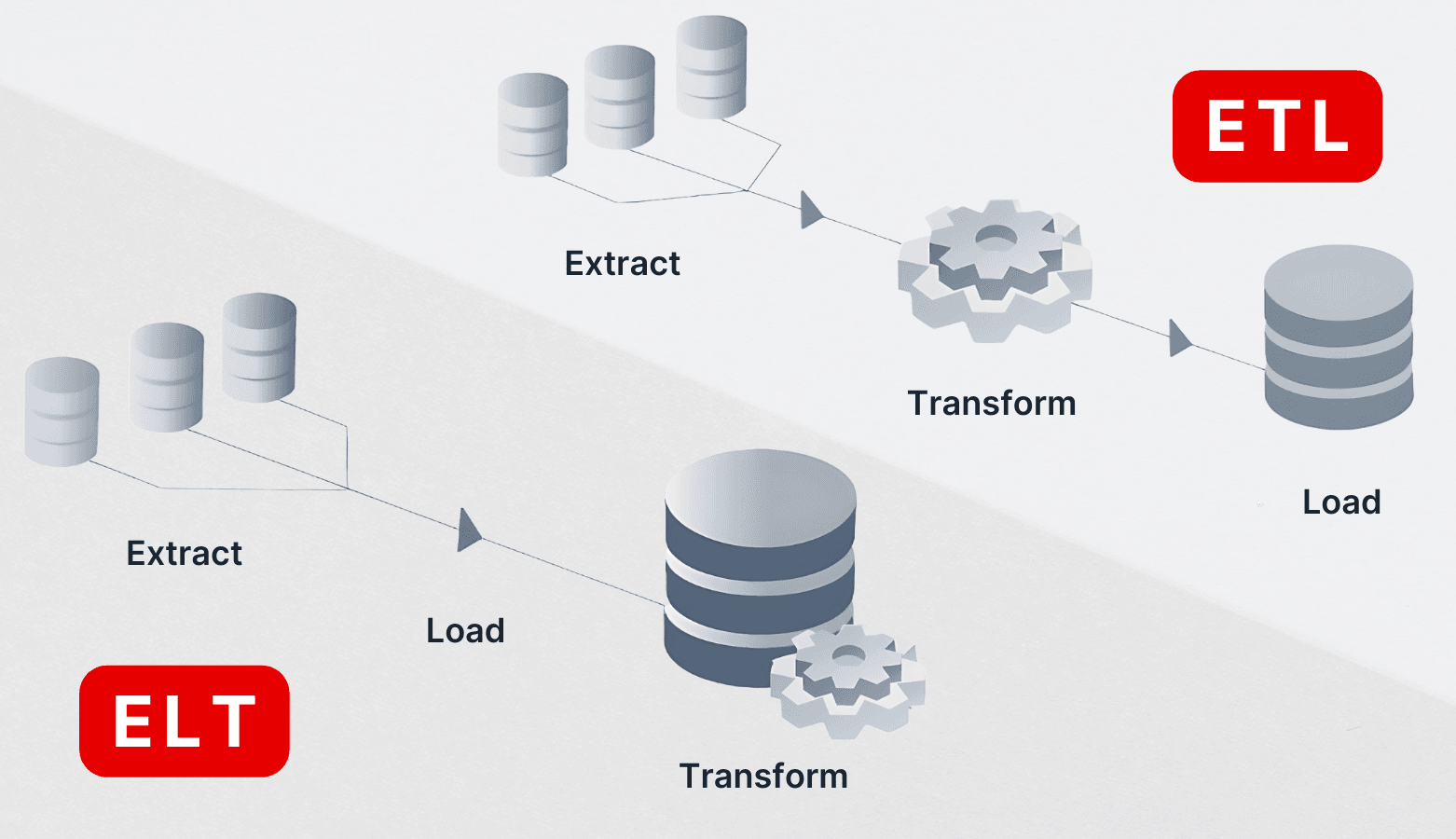

Modern data teams often debate ETL vs ELT when building their data pipelines. Both approaches help you move and prepare data for analysis, but the difference between ETL and ELT comes down to when and where data is transformed. ETL (Extract, Transform, Load) transforms data before loading it into a target system, while ELT (Extract, Load, Transform) loads data first and transforms it later. This one change in order has big implications for speed, scalability, and flexibility. In this comprehensive guide, we’ll break down ETL vs ELT in depth – covering what each is, key differences (with a handy comparison table), pros and cons, use cases, and how to choose the right approach. Let’s dive in and see which data integration strategy best fits your needs, and how ensuring data quality and observability (with platforms like DQLabs) keeps your data pipeline reliable.

What is ETL?

ETL (Extract, Transform, Load) is a classic data integration approach used to move and prepare data for analytics. It follows a linear process: extract data from sources, transform it to match business and technical requirements, and load the cleaned data into a target system like a data warehouse.

ETL stands for:

- Extract – Pull data from various sources like databases, files, or apps.

- Transform – Clean, standardize, and apply business rules to the data in a staging area.

- Load – Move the transformed data into the warehouse, ready for reporting and analysis.

ETL ensures only curated, high-quality data reaches the warehouse, making it ideal for compliance-heavy environments or when upfront data validation is critical.

What is ELT?

ELT (Extract, Load, Transform) takes a modern approach by loading raw data directly into the warehouse first, then transforming it inside the destination using SQL or platform-native tools. It supports large-scale, agile analytics by enabling faster ingestion and flexible reprocessing. ELT is well-suited for cloud-native platforms and evolving use cases—but requires strong governance to avoid quality and access issues.

ETL vs. ELT: Key Differences

ETL and ELT are two common methods for integrating data, but they go about it in different ways. Both aim to make data ready for analysis, but the timing of when transformations happen is what sets them apart. Let’s take a closer look at how each one works:

Transformation Timing and Location:

The biggest difference between ETL and ELT is when and where the transformation occurs. In ETL, data gets transformed on a separate server before it’s loaded into the target system. With ELT, the data is first loaded into the target system (like a data warehouse) and only transformed afterward. This means ETL relies on an extra step and possibly additional infrastructure for transformation, while ELT uses the target system to do the heavy lifting.

Scalability and Performance:

ETL can sometimes struggle with large datasets because the transformation happens before the data is loaded, and this can slow things down. ELT, on the other hand, leverages the power of modern data warehouses, which can process large amounts of data quickly by distributing the work across multiple nodes. For smaller datasets, ETL is still a solid choice, but when handling big data, ELT shines by loading quickly and processing in parallel.

Data Flexibility and Schema:

ETL forces you to decide on a structure before transforming and loading the data, which is called schema-on-write. This gives you a clean, consistent model but lacks flexibility. ELT uses schema-on-read, so you load the raw data first and decide how to use or transform it later. This approach is more flexible, especially when your data is diverse or constantly changing. With ELT, you have the raw data available for analysis, which is perfect for exploring new insights down the line.

Data Quality & Governance:

ETL ensures data quality during the transformation step, so by the time it’s loaded into the system, it’s already cleaned and validated. ELT loads everything raw, meaning the responsibility for ensuring quality falls on the team later on. Tools like DQLabs help here by monitoring the data after it’s loaded, identifying any anomalies or quality issues before they become a problem.

Infrastructure & Cost:

ETL usually requires separate servers or tools to handle the transformations, which can drive up costs. ELT, by using the target system for transformations, cuts down on the need for extra infrastructure, but this can lead to higher cloud costs, especially when running large-scale transformations in the cloud. For organizations already on the cloud, ELT is generally easier and cheaper to manage, while companies on older systems might still stick to ETL to avoid overwhelming their databases.

Latency (Data Freshness):

ETL typically runs in batch processes, which can introduce some delay. ELT, on the other hand, loads data quickly, allowing you to access raw data almost immediately and apply transformations as needed. If real-time data access is important, ELT is the better choice for faster insights.

To summarize these differences at a high level, here is a comparison table outlining ETL vs ELT across key dimensions:

| Aspect | ETL (Extract, Transform, Load) | ELT (Extract, Load, Transform) |

|---|---|---|

| Data Transformation | Happens before loading into target. Data is transformed in a staging area or ETL tool, then loaded into the data warehouse. | Happens after loading into target. Raw data is loaded into the data warehouse or lake, then transformed in place (inside the target system). |

| Processing Performance | Can be slower for large volumes, since transformation must complete before loading. Often uses batch processing. | Typically faster initial loading. Leverages the scalable power of the target system for transformation, handling large datasets efficiently (parallel processing). |

| Scalability | May require significant ETL server resources to handle very large data. Scaling up can be costly and complex (limited by ETL tool capacity). | Highly scalable with cloud data platforms – can handle large volumes of structured or unstructured data by using cloud compute on demand. Easier to scale storage/compute as data grows. |

| Data Volume & Variety | Best suited for smaller to medium, structured datasets where upfront schema is known. Struggles with very large or highly varied data because all data must fit a predefined schema before loading. | Ideal for big data and a mix of data types. Can load structured, semi-structured, and unstructured data. Schema can be applied later, so it adapts well to diverse or evolving datasets. |

| Schema Approach | Schema-on-write: schema is defined upfront; data is molded to that schema during transformation. The warehouse only contains curated, schema-compliant data. | Schema-on-read: data is stored in raw form. Schema is applied when reading/using the data. This preserves all original data and allows multiple schemas for different use cases. |

| Data Quality Control | Data quality is enforced before load – cleansing, validation, and business rules in the ETL stage ensure only high-quality data enters the warehouse. Less risk of bad data in target. | Data quality is handled after load – raw data (good or bad) is in the warehouse. Requires strong monitoring and cleaning processes in the warehouse. (All original data is available, so issues can be fixed with new transform queries.) |

| Use of Storage | Typically loads only processed data, so storage contains just the final dataset (which may be smaller than raw). Does not retain all source data (anything filtered out or not required is dropped). | Stores all extracted data, including historical or “extra” attributes that might not be immediately used. This requires more storage, but allows retrospective analysis and re-transformation if needed since nothing is thrown away. |

| Tooling & Infrastructure | Often uses dedicated ETL tools (Informatica, Talend, etc.) or custom ETL scripts. Involves managing ETL servers and pipelines. Integration is managed by IT/engineering teams. | Often implemented with ELT services or simple loaders plus using the data warehouse’s capabilities (e.g. SQL transformations). Fewer moving parts – mainly the data warehouse and orchestration scripts. Can enable more self-service for analysts (they can write transformation queries). |

| Security & Compliance | Allows filtering or masking sensitive data before it enters the target system. Useful for compliance since only approved data lands in the warehouse. | Needs controls in place because raw data (potentially sensitive) is loaded into the target. Requires robust security on the data lake/warehouse (access controls, encryption) and policies to handle sensitive fields after loading (e.g. transform to mask or segregate them). |

| Maintenance & Flexibility | Changes to source or schema require updating the ETL pipeline. If you need new insights, you might have to re-extract and re-transform data (since raw data wasn’t retained). ETL pipelines can be rigid but are well-understood with lots of documentation (mature technology). | More flexible to change. If you need a new report or discover an issue in transformation, you already have the raw data loaded – just write a new transformation in SQL and you’re done (no re-extract needed). Easier to iterate and evolve. However, ELT as a practice is newer, and teams must establish good governance to manage the ever-growing raw data. |

As shown above, ETL and ELT differ in everything from where the work happens to how they handle data growth. Next, we’ll explore the pros and cons of each approach in detail, and later, we’ll discuss real-world use cases and how to decide which approach is right for you.

ETL vs. ELT: Pros and Cons

Every technology choice has advantages and drawbacks. Depending on your needs, you may favor the reliability of ETL or the flexibility of ELT. Let’s break down the specific pros and cons of each:

ETL Pros

- Delivers high-quality, ready-to-use data by cleaning, validating, and formatting it before it reaches the warehouse.

- Enforces a consistent schema-on-write, helping ensure standardized data structures for easier reporting and compliance.

- Offloads heavy transformation workloads from the destination system, preserving warehouse performance and query speed.

- Supports early compliance and privacy needs by masking or removing sensitive data before storage.

- Backed by a mature ecosystem of tools, offering robust scheduling, logging, and support for enterprise-grade pipelines.

ETL Cons

- Struggles to scale efficiently with high-volume, fast-changing data without investing in more compute or re-architecting.

- Introduces delays in data availability, as transformation must complete before data is accessible for analysis.

- Limits flexibility for new business questions, since only pre-selected data reaches the warehouse.

- Requires upfront effort in modeling and tooling, adding cost and complexity to pipeline design and maintenance.

- Carries a risk of accidental data loss, especially if transformation logic filters out rows or fields prematurely.

ELT Pros

- Enables fast data ingestion by loading raw data first, allowing near real-time access and quicker iteration.

- Takes advantage of cloud-native scalability, running large-scale transformations directly within the warehouse.

- Offers greater flexibility and agility, as raw data can be re-queried and transformed without revisiting the source.

- Simplifies pipeline design by removing the need for a separate transformation layer outside the warehouse.

- Retains detailed, granular data that supports deeper analysis, audit trails, and improved visibility.

- Reduces rework when logic changes, allowing teams to rerun transformations without re-pulling data.

ELT Cons

- Requires strong governance practices, or raw data can turn into a messy, hard-to-manage data swamp.

- Exposes unclean data to end users, risking confusion or misinterpretation if raw layers aren’t clearly separated.

- Puts computational strain on the warehouse, potentially increasing costs and affecting performance.

- Historically lacked mature tools, relying on manual SQL or custom scripts to manage transformations.

- Raises security and compliance risks, as sensitive raw data is loaded and stored before any safeguards are applied.

ETL vs. ELT: Use Cases

How do ETL and ELT play out in real-world scenarios? Below are some common use cases that illustrate when each approach is typically used:

ETL in a Traditional Enterprise Data Warehouse

A global retail company collects transactional data from POS systems, inventory, and finance applications. Each night, they use ETL to extract, apply business rules (e.g., currency conversion, data filtering), and load it into a relational data warehouse. The result is clean, unified tables ready for reporting. This approach ensures executives see consistent, accurate dashboards each morning. ETL works well when data volumes are manageable, schemas are known, and quality is critical—common in industries like banking and healthcare, where sensitive data must be scrubbed or masked before loading.

ELT for Cloud Analytics & Big Data Pipelines

A media streaming service collects millions of semi-structured logs per hour. Using ELT, raw event data is streamed directly into a cloud lake or warehouse (e.g., S3, Snowflake). Transformations—such as parsing JSON and generating metrics like “views_per_movie_per_day”—are performed inside the warehouse. This allows near real-time insights and adaptability as new metrics evolve. ELT is ideal here, where high volume, speed, and machine learning use cases make pre-load transformation impractical.

ETL for Data Migration and Integration

When consolidating older systems, a company performs a one-time ETL process—extracting customer data from two platforms, aligning formats (e.g., addresses), and loading it into a new database. ETL ensures data consistency before it enters the new environment. This is common during system upgrades, mergers, or CRM/ERP implementations where legacy data needs to be cleaned and standardized.

ELT for Data Science and Sandbox Environments

A data science team explores raw datasets like sales, support tickets, and social media in a data lake. With ELT, the team transforms and joins data on demand using SQL or notebooks—creating custom features such as “tickets per customer in the last month.” Unlike ETL, this approach offers agility, enabling experimentation without waiting for new pipelines. ELT supports open-ended analysis where questions evolve over time.

Hybrid Approach – Using Both

Many organizations adopt both ETL and ELT. For example, an e-commerce company uses ELT for raw clickstream data and ETL for structured product catalogs. Inside the warehouse, both datasets are joined for analysis. This hybrid strategy balances the speed and scale of ELT with the control and quality of ETL—proving that both methods can complement each other based on the use case.

When Should You Use ELT or ETL?

Choosing between ETL and ELT depends on your data architecture, scale, and business goals. Here’s a simplified guide:

Choose ETL if your pipelines need strict cleaning or compliance upfront, or if you’re working with structured, well-understood data at moderate scale. It’s ideal when schemas and business rules must be applied before storage—like in financial reporting or regulated industries where raw data can’t be landed. ETL is also useful in on-prem environments with limited compute, letting you offload transformation outside the warehouse. If you’re doing nightly CRM loads or batch reporting, ETL ensures data is shaped and validated before it hits the dashboard. And if your team already uses ETL tools, sticking with them can offer stability and leverage advanced transformation logic not easily done in SQL.

Choose ELT if you’re handling high volumes, diverse data, or need faster access. ELT thrives in cloud-first setups and modern data stacks. If you’re streaming logs, parsing JSON, or collecting data from many sources into a central warehouse or lake, ELT offers better scalability. It’s also a fit when you need to preserve raw data for data science or on-demand analytics. Real-time use cases benefit too—you load immediately and transform later. ELT also simplifies architecture: fewer tools, more agility, and greater self-service for business users. Just be sure to layer in governance and observability to manage quality post-ingestion.

Considerations for Transition or Hybrid:

If you’re moving from ETL to ELT, start gradually—replicate raw data into your warehouse while continuing ETL in parallel. Transition transformations over time, ensuring cloud security, SQL skills, and validation checks are in place. A hybrid model works well: use ETL for high-trust master data that needs cleaning, and ELT for fast-moving or exploratory datasets. There’s no one-size-fits-all. The best approach aligns with the use case and team capability, ensuring reliable, timely data delivery.

Whichever path you choose, invest in documentation, monitor quality closely, and evolve your strategy as your data needs grow.

Conclusion

ETL and ELT are both powerful strategies for moving and preparing data, but the right choice depends on your organization’s infrastructure, data volume, and business needs. ETL excels when data quality, compliance, and governance are top priorities, especially in regulated industries or when working with structured datasets. In contrast, ELT leverages modern cloud platforms to deliver scalability, speed, and flexibility, making it a natural fit for big data and dynamic analytical use cases.

As more organizations shift to cloud-native architectures, ELT has gained popularity for its agility and ability to handle raw data at scale. However, this flexibility also increases the need for strong data observability and quality controls post-load.

Ultimately, the best strategy may not be a strict ETL vs. ELT decision — many teams successfully combine both based on the data type, latency needs, and downstream consumers. Understanding the strengths and trade-offs of each method helps ensure your data pipeline remains efficient, scalable, and ready for the future.

FAQs

What is the difference between ETL and ELT?

- ETL extracts data, transforms it before loading into the warehouse, requiring a fixed schema and upfront cleaning.

- ELT extracts and loads raw data first, then transforms it inside the warehouse, offering more flexibility but relying on the warehouse for processing and quality checks.

- ETL suits batch jobs and regulated environments; ELT is preferred for cloud-based, large-scale data.

When should you use ELT instead of ETL?

Use ELT when you need scalability, speed, and flexibility—especially with large or streaming data in cloud warehouses like Snowflake or BigQuery. ELT allows fast data loading and multiple transformations later, making it ideal for data science and real-time analytics. ETL works better for on-premises systems or when strict upfront data validation is required.

What are the key considerations for transitioning from ETL to ELT?

Key points to consider:

- Technology: Ensure your warehouse can handle raw data and transformations.

- Data Governance: Organize data into layers (raw, staging, production).

- Skills: Train teams on SQL and cloud tools.

- Data Quality: Implement quality checks inside the warehouse.

- Security: Enforce access controls and compliance.

- Migration: Move gradually, running ETL and ELT in parallel until stable.

With careful planning, ELT can simplify maintenance and speed up the development of data pipelines and analytics workflows.