Summarize and analyze this article with

Data observability is rapidly becoming a critical discipline for modern organizations striving to maintain data reliability, quality, and trust across increasingly complex and distributed ecosystems. It ensures that data teams have continuous visibility into the state of data, pipelines, infrastructure, usage, and costs—helping them detect issues early and resolve them before they impact business outcomes. Yet, as data environments grow larger and more intricate, traditional observability methods struggle to keep pace.

Agentic AI is emerging as a transformative force that extends data observability beyond passive monitoring into autonomous, intelligent data trust and reliability management. These AI agents actively perceive the ecosystem’s health, reason about issues, adapt to changes, and take proactive remediation steps across all data observability pillars. Powered by advances in large language models and multi-agent orchestration, agentic AI equips organizations with real-time, context-rich insights and self-healing capabilities—enabling resilient, efficient, and trustworthy data operations that meet the demands of modern analytics and AI applications.

This blog will explore what agentic AI means in the context of data observability, dive into how it transforms the five key pillars of observability and examine its impact on various data team roles—highlighting the ways this powerful technology is shaping the future of autonomous and intelligent data management.

What Is Agentic AI in the Context of Data Observability?

Agentic AI in the context of data observability refers to intelligent AI systems that go beyond merely detecting issues—they act autonomously to analyze, adapt, and resolve problems within complex data ecosystems. Unlike traditional AI or automation tools that follow fixed rules or generate alerts, agentic AI agents possess the ability to plan multi-step actions, collaborate with other agents, and learn continuously from outcomes to optimize the entire data workflow.

While traditional observability systems primarily focus on monitoring metrics, logs, and anomalies to inform data teams, agentic AI transforms observability into an active, self-managing process. These agents dynamically monitor data quality, pipeline health, infrastructure, usage patterns, and cost, taking proactive and contextual actions to maintain system reliability and business alignment. This shift is critical today due to advances in mature multi-agent frameworks and the increasing complexity and scale of modern data environments.

In essence, agentic AI acts as a network of autonomous caretakers for your data ecosystem, moving from passive detection to proactive data trust management. This enables organizations to achieve resilient, trustworthy, and efficient data operations capable of supporting the fast-paced demands of AI and analytics-driven enterprises.

Impact of Agentic AI on Data Observability

Today’s AI-powered observability tools are great at spotting anomalies and surfacing insights. But they’re still reactive—someone has to step in to investigate and fix the issue. Agentic AI takes observability a step further. It brings autonomy, context-awareness, and continuous learning to every layer of the stack. Instead of just alerting you, it learns patterns, adapts in real time, and even initiates corrective actions.

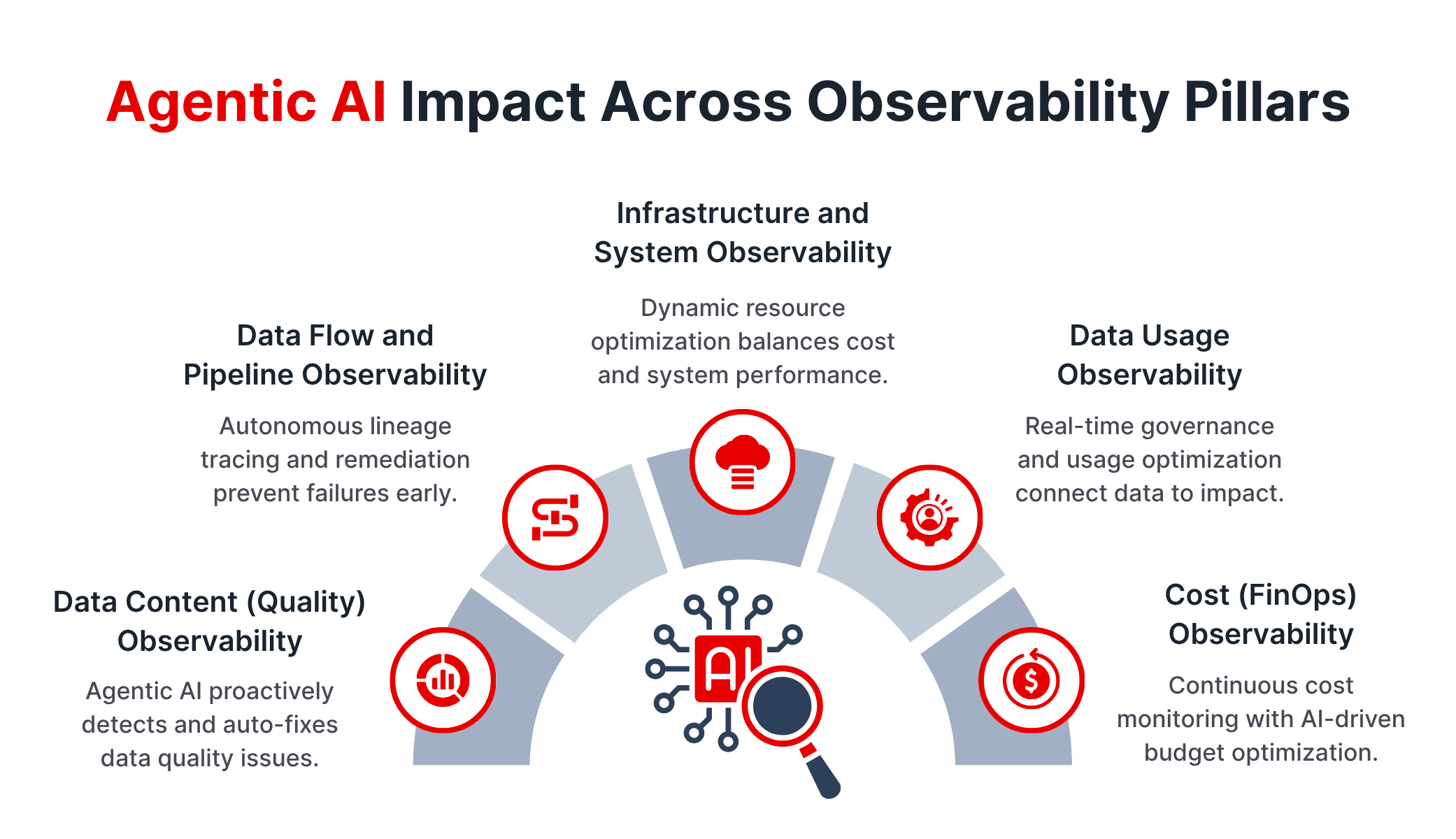

Let’s walk through the five key pillars of observability and see how agentic AI reshapes each one.

1. Data Content (Quality) Observability

Traditional Approach:

Data content checks have focused on predefined rules and scheduled tests. Teams monitor for completeness (no missing values), accuracy (valid formats), uniqueness, and consistency. Schema changes or outliers are flagged through anomaly detection, and business rules—like “order_date can’t be in the future”—trigger alerts. From there, the investigation is manual. These methods work but are reactive and put most of the effort on people.

With Agentic AI:

Agentic AI moves quality checks from reactive to proactive. Agents learn evolving patterns in the data, tune validation checks on the fly, and pick up subtle degradations before they spread. When they catch an issue, they can launch fixes automatically—cleansing bad rows, reconciling schemas, or triggering upstream adjustments. By reducing manual work and adapting in real time, agentic AI keeps data trustworthy and cuts down on downstream risk.

2. Data Flow and Pipeline Observability

Traditional Approach:

Observability across data pipelines involves monitoring job schedules, success/failure statuses, and performance metrics like latency and throughput. Teams rely on alerts for pipeline failures or backlogs, manually investigating lineage and dependencies to find root causes. Pipeline observability is often siloed, making it hard to correlate cascading failures or predict downstream impacts. Data volume monitoring detects drops or spikes which could signal data loss or duplication, but remediating these issues largely depends on human response.

With Agentic AI:

Agentic AI transforms pipeline observability through autonomous, end-to-end lineage tracing and causal analysis. AI agents collaborate to detect pipeline degradations or failures early and precisely isolate failure points across complex, distributed workflows. They execute or recommend multi-step remediations—like job re-runs, data reprocessing, or priority adjustments—without waiting for manual approvals. This proactive orchestration ensures continuity by healing issues rapidly, reducing downtime and operational burden while ensuring reliable and timely data delivery.

3. Infrastructure and System Observability

Traditional Approach:

Infrastructure observability monitors system resources like CPU, memory, disk I/O, and network throughput, typically through static threshold-based alerts. Teams analyze logs and service uptime metrics to detect hardware or network issues that could degrade data pipeline performance. Scalability is monitored manually through trend analysis to plan capacity expansions. These metrics usually reflect isolated environments, making it hard to get cohesive views across multi-cloud or hybrid platforms.

With Agentic AI:

Agentic AI makes infrastructure observability dynamic. Agents continuously correlate resource utilization with pipeline health and business outcomes, detecting bottlenecks or failures in real time. They autonomously optimize resource allocation—including scaling compute clusters, redistributing workloads, or decommissioning idle resources—balancing costs and performance dynamically. Multi-cloud and hybrid environments become manageable through unified agent-led visibility and automated tuning, reducing operational waste and improving system reliability.

4. Data Usage Observability

Traditional Approach:

Usage observability focuses on understanding who is accessing data, how they are using it, and the impact of this usage across the organization. This includes access logs, query performance, and workload analytics—plus auditing and compliance checks. But the process is fragmented across tools, making insights siloed. Detecting unusual usage trends, such as a sudden spike or drop in dashboard usage, often relies on retrospective analysis, delaying response and remediation. In practice, usage observability has mostly supported compliance and audits rather than proactive optimization.

With Agentic AI:

Agentic AI changes usage monitoring into something far more strategic. Agents analyze user behavior alongside data changes and business context, enforcing governance policies in real time. They can restrict unauthorized access instantly, tune slow queries, or recommend model adjustments to boost performance. Beyond compliance, they connect usage patterns to business impact, highlighting who or what will be affected by data problems. That turns usage observability into a tool for governance, optimization, and smarter decision-making.

5. Cost (FinOps) Observability

Traditional Approach:

Cost observability traditionally relies on aggregated cloud spend reports and manual cost allocation processes. Organizations track the usage and expenses of cloud resources such as compute clusters, storage, and data processing jobs, often tied to teams or projects by retrospective analysis. While cost anomalies like spikes or unexpected surges can be detected, the process is typically reactive—meaning budget overruns often come to light only after the fact. Manual efforts to correlate financial spend with operational metrics are time-consuming and error-prone, limiting an organization’s ability to optimize resources proactively.

Furthermore, idle or underutilized resources may go unnoticed, causing unnecessary expenditure. Without tight integration between cost data and system performance, organizations struggle to balance stringent SLAs with cost-efficiency, hindering mature FinOps practices.

With Agentic AI:

Agentic AI brings real-time cost visibility tied directly to workloads and pipelines. Agents watch cloud spend as it happens, link it to specific jobs or queries, and flag inefficiencies like duplicate runs or oversized scans. They can suggest or trigger optimizations—resizing clusters, throttling non-critical jobs, or fine-tuning queries—keeping expenses aligned with SLAs and budgets. They also make chargebacks and budgeting accurate by giving granular, team-level visibility. The payoff: tighter cost control, better ROI on data, and far fewer “hidden” expenses.

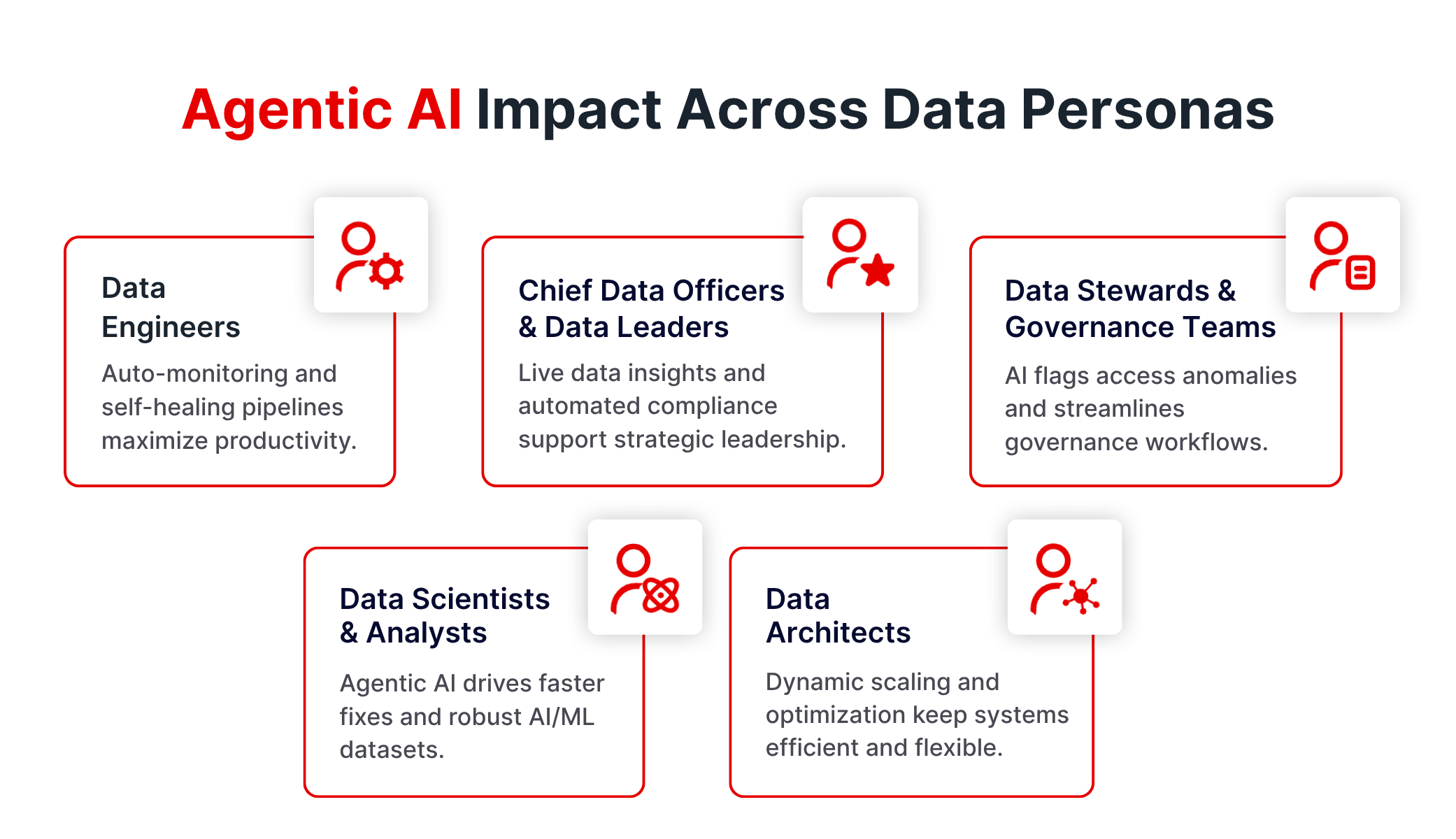

Impact of Agentic AI on Data Teams: Empowering Personas Across the Data Landscape

Agentic AI is reshaping the way data teams work. Routine workflows get automated, complex issues are handled faster, and teams get to spend their time on work that actually moves the needle—like strategy, innovation, and delivering insights.

Here’s how different data roles benefit when agentic AI steps in:

Data Engineers

Traditionally, engineers spend hours building and maintaining pipelines, fixing broken jobs, and handling schema changes. On top of that comes constant monitoring, debugging, and incident response.

With agentic AI, agents take over the grunt work. They watch pipelines in real time, spot failures, identify root causes, and even self-heal by rerunning jobs or adapting to schema shifts. They also tune queries and optimize resources automatically. The result? Engineers spend less time firefighting and more time designing new data products and scalable architectures.

Chief Data Officers (CDOs) and Data Leaders

For CDOs, the job usually means managing compliance, governance, and data strategy—often relying on static reports or slow manual audits to understand data health.

Agentic AI changes that. Intelligent agents continuously monitor data quality, pipeline performance, usage, and cost in real time. They flag risks before they escalate and automate compliance tasks, giving leaders a live view of business impact. That means faster decisions, fewer surprises, and a stronger foundation of trust—turning the data office into a truly strategic function.

Data Stewards and Governance Teams

Data stewards often act as policy enforcers—reviewing access controls, auditing usage, and chasing down misuse or violations. Most of it is manual, repetitive, and reactive.

With agentic AI, oversight becomes continuous. Agents watch access in real time, flag anomalies, and kick off compliance workflows automatically. That way, risks are mitigated instantly, and stewards can shift their energy to refining governance frameworks and driving long-term strategy.

Data Scientists & Analysts

Analysts and scientists dedicate time to data cleaning, validating model inputs, and manually investigating data quality or pipeline issues that impact analysis accuracy. It slows everything down.

Agentic AI flips the balance. Agents handle validation, catch quality problems early, and provide contextual root cause analysis. They can even auto-generate insights and summaries. Instead of firefighting, analysts focus on building models and delivering insights faster—speeding up experimentation and decision-making.

Data Architects

Architects design data platforms and infrastructure, planning capacity and scaling manually, based on historical trends and business forecasts.

Agentic AI integrates real-time infrastructure and usage observability to recommend or implement elastic scaling, cost optimizations, and architecture adjustments autonomously. This enables architects to maintain highly performant and cost-efficient data environments that adapt fluidly to evolving workloads.

Conclusion

Agentic AI is reshaping data observability, moving it beyond reactive monitoring into a more autonomous and intelligent ecosystem. By embedding proactive, context-aware AI agents across every layer—data quality, pipelines, infrastructure, usage, and cost—organizations gain a new level of reliability, agility, and efficiency in their data operations. This shift allows data teams to spend less time firefighting and more time driving innovation, all while ensuring the trust in data that modern analytics and AI strategies depend on.

The real opportunity lies in how agentic AI reshapes the daily reality of data teams. Data engineers spend less time firefighting pipelines. Analysts and scientists work with cleaner inputs and faster insights. Leaders gain confidence through real-time, business-aligned metrics, while governance teams enforce compliance seamlessly without manual overhead. By elevating every role, agentic AI makes observability not just a technical safeguard, but a strategic enabler—turning data into a dependable, high-value asset that fuels innovation and competitive advantage.

ChatGPT

ChatGPT Perplexity

Perplexity Grok

Grok Google AI

Google AI