Summarize and analyze this article with

Data-driven organizations cannot afford “data blind spots.” When data pipelines break or data quality issues go unnoticed, the business suffers from incorrect decisions and lost trust. This is where data observability tools come in – they monitor the health of your data and pipelines, helping data teams detect and resolve problems before they impact stakeholders. However, not all data observability platforms are created equal. How do you choose the right one for your needs? The key is to evaluate tools against a comprehensive set of criteria that align with your technical requirements and future goals.

In this guide, we’ll break down the essential evaluation criteria for data observability tools. From integration with your data stack and anomaly detection capabilities to alerting mechanisms and scalability, we’ll cover what features and qualities to look for. We’ll also provide example use cases (like monitoring a Snowflake pipeline or catching anomalies in a dbt transformation) to illustrate these criteria in action. By the end, you’ll have a clear framework – and even a handy checklist – to compare data observability solutions and identify which platform (for example, DQLabs) can best ensure reliable, trustworthy data in your organization.

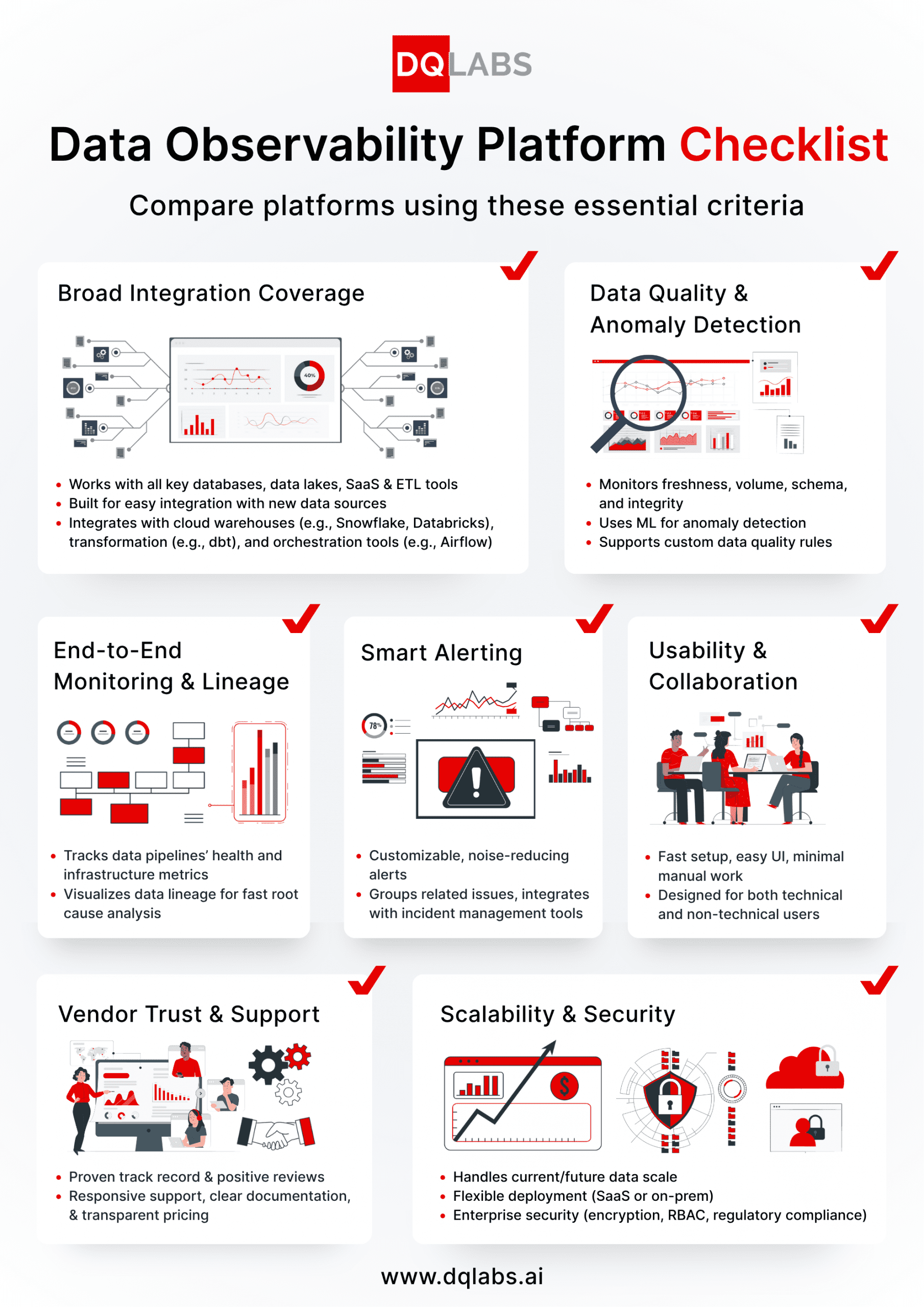

1. Seamless Integration with Your Data Stack

Breadth of Connectors: A top consideration is whether the observability tool can connect to all the data sources and pipelines in your ecosystem. Modern data stacks are diverse – you might have cloud data warehouses (e.g., Snowflake, BigQuery, Redshift), data lakes (S3, ADLS, GCS), relational databases, as well as SaaS applications and third-party APIs. Ensure the tool supports a wide range of data source connectors out-of-the-box, so it can monitor everything from your transactional databases to your SaaS app data exports. The tool should also plug into data integration and ETL/ELT tools (like Fivetran, Glue) and read from file formats (JSON, Parquet, etc.) if needed.

Pipeline and Tool Integrations: Beyond data storage, evaluate how well the tool integrates with your data pipelines and workflow tools. For example, can it hook into your orchestration systems like Apache Airflow to monitor job runtimes, delays, or failures? Does it integrate with transformation frameworks such as dbt to augment your existing tests or detect anomalies in transformation outputs? A good observability platform will seamlessly connect to these systems, ingesting metadata (logs, job statuses, metrics) from them. This integration enables end-to-end visibility – from data ingestion through transformation to BI consumption – all within the observability tool.

2. Comprehensive Data Quality Monitoring and Anomaly Detection

At the heart of data observability is the ability to monitor and ensure data quality across multiple dimensions. Evaluate how the tool approaches detecting data issues:

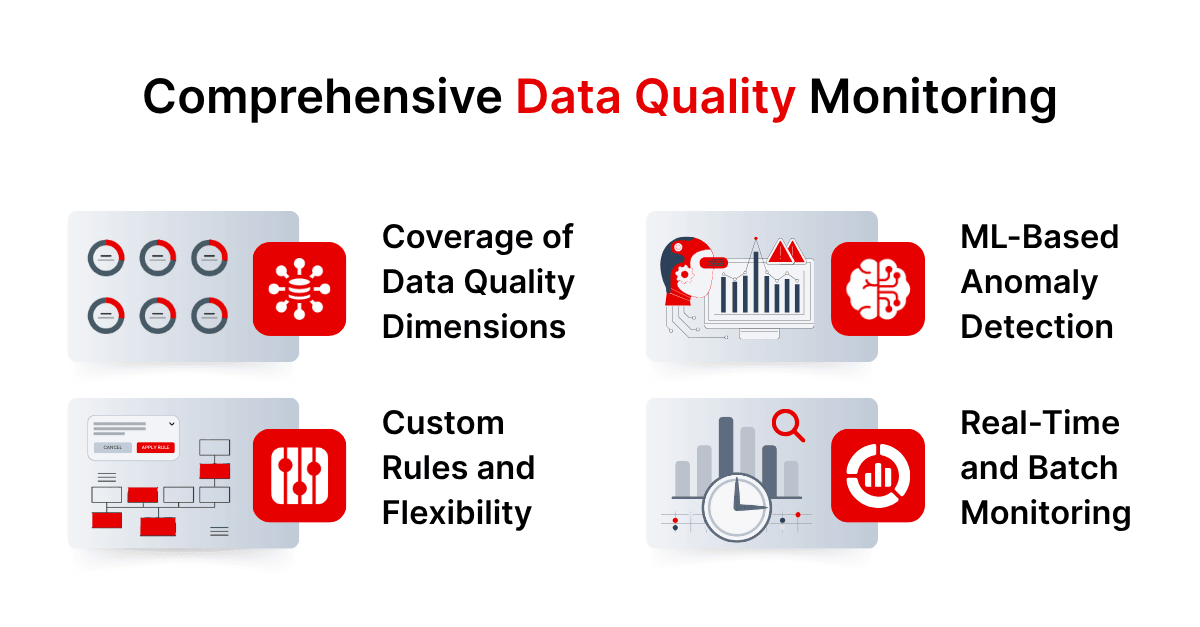

- Coverage of Data Quality Dimensions: Does the platform monitor key data health metrics such as freshness (timeliness of data updates), volume/completeness (e.g., row counts, missing records), schema changes, validity (format or business rule compliance), and distribution of values? The best tools cover all these “pillars” of data observability. For example, if a scheduled data load into a Snowflake table is late or smaller than usual, the tool should flag a freshness or volume anomaly. If a column’s values suddenly fall outside expected ranges (e.g., a spike in nulls or outliers), it should catch that as a potential data quality issue.

- Machine Learning-Based Anomaly Detection: Rigid threshold-based rules can only detect known issues, while modern data observability platforms leverage machine learning to identify unknown issues through advanced anomaly detection. Look for tools that automatically establish baselines from historical data and use statistical or ML models to identify abnormal deviations. This is crucial for catching subtle issues that wouldn’t be covered by simple rules. For instance, a good tool will learn the typical daily row count in a fact table or the normal distribution of transaction amounts, and alert you when current data drifts significantly from the norm. Automated anomaly detection drastically reduces manual effort in writing countless rules. (DQLabs excels here by using AI/ML to profile data and automatically surface anomalies in freshness, volume, and data distributions without requiring hard-coded thresholds.)

- Custom Rules and Flexibility: While automated detection is vital, you also want the flexibility to define custom data quality rules as needed. Evaluate if the tool allows custom validations – for example, the ability to specify business logic (like “Column X should never decrease day-over-day by more than 5%”) or to integrate existing tests. Many data engineers use dbt for writing tests; a capable observability tool might integrate with dbt to import or enhance those tests. Check if you can write custom checks via an AI wizard, SQL queries, or configuration files (YAML/JSON), and whether these can be version-controlled (e.g., stored in Git) for proper DevOps practices.

- Real-Time and Batch Monitoring: Determine whether the tool performs checks in real-time or only in batch windows. If you have streaming data or frequently updated data (say, IoT feeds or event streams), real-time anomaly detection might be important. Some platforms evaluate data continuously or on micro-batches to catch issues as soon as they occur, while others might run checks on a schedule. Align this with your use cases – e.g., if you need to know within minutes that an important KPI dashboard is receiving bad data, real-time monitoring is key.

3. End-to-End Pipeline & Performance Monitoring

Data observability isn’t just about data values – it’s also about the pipelines and infrastructure that move and transform data. When evaluating tools, investigate their capabilities in monitoring the operational aspects of your data ecosystem:

- Pipeline Health and Job Monitoring: Can the tool monitor scheduled jobs, ETL/ELT processes, and data workflows for issues like failures, runtime anomalies, or delays? For example, if you have a nightly batch job in Airflow that loads data into Databricks or Snowflake, the observability platform should detect if that job fails, gets delayed, or takes significantly longer than usual. During your evaluation, simulate pipeline errors—pause a DAG in Airflow or inject a failure in a dbt run—and observe if the platform detects and alerts on these failures. It should provide contextual information such as which job failed, the affected table, and where in the pipeline the issue occurred. In a real-world scenario, this could be detecting that “Table X in Snowflake has not been updated in 24 hours” due to a missed upstream job. These hands-on validations demonstrate whether the tool can maintain pipeline reliability across your stack.

- Resource and Performance Metrics: Modern data stacks can be complex, involving warehouses, distributed processing engines, etc. A good observability platform will also monitor system performance metrics like query execution times, CPU/memory usage of data processing jobs, or throughput of streaming pipelines. This helps catch infrastructure-related issues (e.g., a Spark job running out of memory or an unusual spike in warehouse credits consumption on Snowflake) that could affect data delivery. Some advanced tools even correlate these metrics with data events – for instance, noticing that a slow pipeline run coincides with an unusual data volume spike.

- Schema and Metadata Changes: The tool should automatically detect schema changes or other metadata modifications in your data systems. If a column is dropped or a data type is changed in a source table, the platform must flag it—especially if that change could break downstream models. Test this by simulating schema edits in a frequently used table and observing how the tool responds. Some platforms also integrate with data catalogs like Alation or Atlan, propagating schema change alerts to governance teams. The best tools track schema versions and highlight changes that may impact data quality, helping teams stay ahead of breakages.

- Data Lineage and Impact Analysis: When something goes wrong, you need to quickly understand the blast radius. Evaluate whether the tool provides data lineage mapping – showing how data flows from sources through transformations to outputs (dashboards, ML models, etc.). Robust lineage helps with root cause analysis: if a BI dashboard is showing incorrect data due to a broken upstream job or a bad source file, lineage visualization will point you to the root cause faster. It’s also useful for impact analysis when a problem is detected – e.g., if a particular table has bad data, lineage can reveal which downstream reports or datasets might be impacted. Look for interactive lineage graphs or detailed dependency lists in the tool. (DQLabs, for example, integrates data lineage into its observability, so users can trace anomalies from a faulty source all the way to affected business reports, simplifying troubleshooting.)

4. Intelligent Alerting and Incident Management

Catching an anomaly or failure is only half the battle; the next step is making sure the right people are notified and can act. Poor alerting can overwhelm teams with noise or, conversely, leave critical issues unnoticed. When comparing data observability tools, pay close attention to their alerting and incident response features:

- Customizable Alerts: The platform should allow you to configure when and how alerts are triggered and delivered. This includes setting alert thresholds or sensitivity (especially if using ML models – you might adjust the sensitivity for different datasets), choosing alert frequency, and grouping related alerts. For instance, if a nightly ETL job fails, you might want one high-priority alert, not a flood of repetitive messages for every downstream table that didn’t update. Tools that support alert aggregation and deduplication help reduce alert fatigue. Check if you can set different alert levels (e.g., warning vs critical) and routes based on severity.

- Multi-Channel Notifications: Data engineers and analysts live in tools like email, Slack, Teams, PagerDuty, etc. Ensure the observability tool can send alerts via your preferred communication channels. Slack integration is particularly popular for real-time notifications in chat ops style. Also, integration with incident management systems like Jira or ServiceNow is valuable – the tool might automatically create a ticket when a critical data incident occurs. During evaluation, test an alert: e.g., have the tool send a Slack message or open a Jira ticket for a simulated issue and see if it contains useful information (like error details, impacted systems, links to lineage or dashboards).

- Avoiding Alert Fatigue: Alert fatigue is a real risk if the tool flags too many minor issues or false positives. Modern observability platforms tackle this by prioritizing alerts and allowing tuning. Ask whether the tool employs logic to avoid redundant alerts (for example, if one root cause triggers multiple symptoms, does it intelligently consolidate them?). Also, check if you can easily adjust a monitor’s sensitivity or temporarily mute certain alerts when needed (say, during known maintenance periods). The goal is actionable alerts – every alert should correspond to something truly worth the team’s attention.

- Incident Workflow and Collaboration: Once alerted, how does the tool support the resolution process? Look for features like incident dashboards or runbooks, where team members can see all current data issues and their status. Some tools let you annotate anomalies (e.g., “we investigated this and found the source system outage – fix in progress”) and share notes among the team. Integration with collaboration tools (like linking an issue to your Slack channel discussion or to a Confluence page) can be a big plus. Additionally, role-based access control is important so the right team members can access and update incident status. Ultimately, the observability tool should fit into your DataOps incident management workflow, reducing the time to detect, diagnose, and fix data issues.

5. Ease of Use and Adoption

A data observability tool will only deliver value if it’s actually used. Ease of use is paramount to drive adoption across your data team (and even with stakeholders like analysts or data scientists). Key things to evaluate include:

- User Interface and Experience: Is the platform interface intuitive and well-designed? A clean, easy-to-navigate dashboard that highlights important metrics (like an overall data health score or number of incidents) can make a big difference. During a demo or trial, note how easy it is to set up a monitor, browse through detected issues, and drill into details. Non-engineering users (like an analytics manager) should be able to understand the health of the data at a glance. If everything requires coding or complex configuration, some team members might shy away from using the tool regularly.

- Setup and Automation: How much effort is required to get value from the tool? The best solutions offer out-of-the-box intelligence – for example, automatically discovering your datasets and suggesting or auto-creating monitoring rules. If a tool requires weeks of configuration and manual rule-writing for each table, it could delay ROI and burden your team. Look for features like one-click onboarding of a data source, auto-profiling of data, and pre-built monitors for common scenarios. DQLabs, for instance, is known for its AI-driven auto-discovery of data quality issues, which reduces the manual work needed by engineers to configure monitors.

- Support for Multiple User Personas: Consider who will interact with the observability platform. Data engineers will set it up and investigate issues, but data analysts or data stewards might use it to get visibility into data health as well. The tool should cater to different skill levels – perhaps a no-code or low-code interface for analysts to view and acknowledge issues, and more advanced options (like SQL-based tests or API access) for engineers. A collaborative feature (like the ability for users to comment on an issue or certify data as valid) can foster a data quality culture across teams.

- Learning Curve and Documentation: Even technical tools should minimize the learning curve. Evaluate the quality of documentation, in-app guidance, and vendor support during trial. If you can’t easily figure out how to create a custom check or integrate a new data source, that’s a red flag. Many vendors provide tutorials or even built-in “tours” of the product – take advantage of those to gauge how quickly your team can become proficient. Also, consider the vendor’s customer success resources: will they assist with onboarding and best practices? Good training and support can greatly ease adoption.

6. Scalability, Performance, and Security

Data observability tools need to handle the scale of your data operations without becoming a bottleneck themselves. They also need to adhere to enterprise security and compliance standards. Key technical considerations include:

- Scalability and Performance: As data volumes and processing demands grow, the platform should handle more assets, higher query loads, and frequent pipeline runs. Assess the architecture—does it use distributed processing and scale easily in cloud environments? During evaluation, monitor whether the tool adds system overhead. A well-designed platform will minimize performance impact, possibly by streaming metadata asynchronously. If your roadmap includes big data or real-time analytics, ensure the solution remains stable under heavy workloads.

- Deployment Flexibility: Evaluate whether the tool is offered as SaaS, on-premises, or hybrid. SaaS may offer faster deployment and automatic updates, but some enterprises require on-prem or private cloud for compliance. If running it in your cloud, confirm support for AWS, Azure, or GCP and ease of setup and maintenance. SaaS users should check data residency options. The ability to support hybrid multi-cloud environments is a plus if your data is spread out.

- Data Privacy and Security: Since observability tools connect to sensitive systems, strong security is non-negotiable. Look for encryption (in transit and at rest), integration with SSO/LDAP, and role-based access control (RBAC) for granular visibility. Ask about compliance certifications like SOC 2, ISO 27001, GDPR, or HIPAA. Even if accessing only metadata, the platform should not introduce vulnerabilities.

- Reliability and Redundancy: Much like any monitoring system, the observability platform should itself be reliable. Check if the vendor describes their uptime or high-availability features. Is there any single point of failure? In an on-prem deployment, can it run in a clustered mode? If the tool goes down, will you miss critical alerts? Ideally, the architecture should be robust and fault-tolerant, so it remains up and running when you need it most (such as during high-load periods or a major data event).

7. Vendor Stability and Support

Since data observability is a relatively new product category, it’s important to assess the vendor behind the tool as part of your evaluation. You want a solution that will not only fit your needs today but also be supported and evolve as your needs grow. Consider the following:

- Company Track Record: Research the vendor’s background. How long have they been in the market, and do they have a credible team (for instance, founders or engineers with data industry experience)? A company founded by experienced data engineers or ex-industry leaders is more likely to understand the problems deeply and continue innovating in the right direction. Also, check if they have significant funding or a growing customer base – signs that they’ll be around for the long term. While newer startups can still be great, you want confidence that the product won’t be abandoned. (DQLabs, for example, has established itself as a leader by consistently enhancing its platform and delivering successful outcomes for various enterprises, indicating strong vendor reliability.)

- Customer References and Use Cases: Ask the vendor for customer success stories or references. How have other data teams used the tool? Look for use cases like reducing BI dashboard downtime or monitoring large-scale data lakes. Talking directly with a customer (if possible) gives you practical insight into how well the tool performs. Also, check whether the vendor has experience with companies similar in size or industry—this often impacts how well their solution aligns with your needs.

- Support and Services: Evaluate the support experience. Do they offer dedicated help, defined SLAs, and multiple support channels like chat or email? If your team spans time zones, confirm global coverage. Some vendors offer premium support or on-site training—valuable if your team requires more assistance. During evaluation, pay attention to how responsive and helpful the vendor is; it often reflects the support culture post-purchase.

- Pricing Model: Finally, ensure you understand the pricing structure and that it aligns with your usage patterns. Data observability tools may charge based on various metrics – number of data assets monitored, volume of data, number of users, or even compute resources used. Try to get an estimate of what your costs would be as you scale usage. A tool might seem affordable for a small deployment, but could become expensive as you onboard your entire data platform. Look for pricing transparency and the ability to start with a smaller package or trial. It’s also worth considering if the vendor’s model encourages broader adoption (for instance, unlimited users, which encourages more teams to use the tool, versus charging per user, which might limit who gets access).

Evaluation Checklist: Selecting the Right Data Observability Tool

Conclusion

Implementing a data observability platform is a strategic move toward trusted, reliable data. The right solution enables your data and analytics teams to focus less on fixing issues and more on delivering value. When evaluating tools, look beyond flashy features—assess how well the platform fits into your ecosystem, identifies issues intelligently, and supports daily workflows.

This guide covered key evaluation criteria—from integration and anomaly detection to alerting, scalability, and vendor support. We also shared technical scenarios to test, such as detecting a delayed Snowflake load or catching anomalies in dbt outputs. Use the checklist to guide demos and proofs-of-concept.

Ultimately, you want a tool that acts as a constant monitor—always watching and alerting when something goes wrong. With a thoughtful evaluation, you’ll find a solution that meets current needs and scales with your data journey—building trust and enabling consistent delivery of quality data.

DQLabs – as an example of a modern observability platform – addresses many of these criteria by combining automated data quality checks, seamless integration across popular data stacks, and user-friendly workflows. While evaluating, consider how such capabilities align with your needs. With the right choice, you’ll turn data observability from a challenge into a competitive advantage for your organization.

ChatGPT

ChatGPT Perplexity

Perplexity Grok

Grok Google AI

Google AI